Fusing system and method based on multiple cameras and inertial measurement unit

An inertial measurement unit, multi-camera technology, applied in measurement devices, closed-circuit television systems, navigation through speed/acceleration measurement, etc., can solve the problems of unstable, unreliable, weak image texture, etc. Perception level, the effect of improving modeling accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

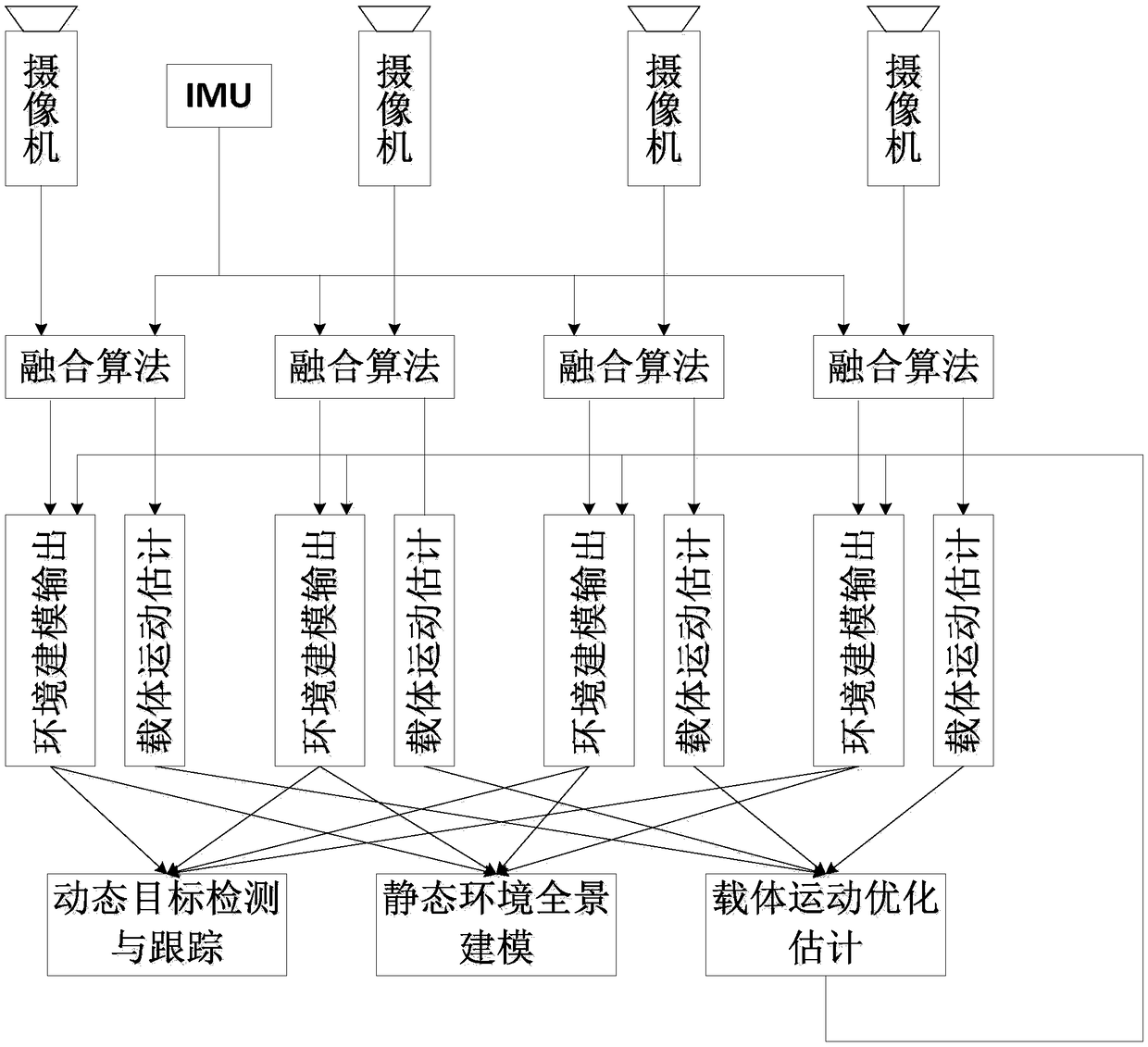

[0055] The embodiment of the present invention provides a system and method based on multi-camera and inertial measurement unit fusion. Multiple cameras are used to obtain panoramic images around the moving carrier, and the inertial measurement unit is used to obtain the acceleration and angular velocity information of the moving carrier. The panoramic image and Acceleration and angular velocity information are fused to distinguish between moving targets and static backgrounds in the environment, and then obtain reliable environmental modeling results, thereby realizing the detection and tracking of moving targets.

[0056] In one aspect of the present invention, a system based on multi-camera and inertial measurement unit fusion is provided.

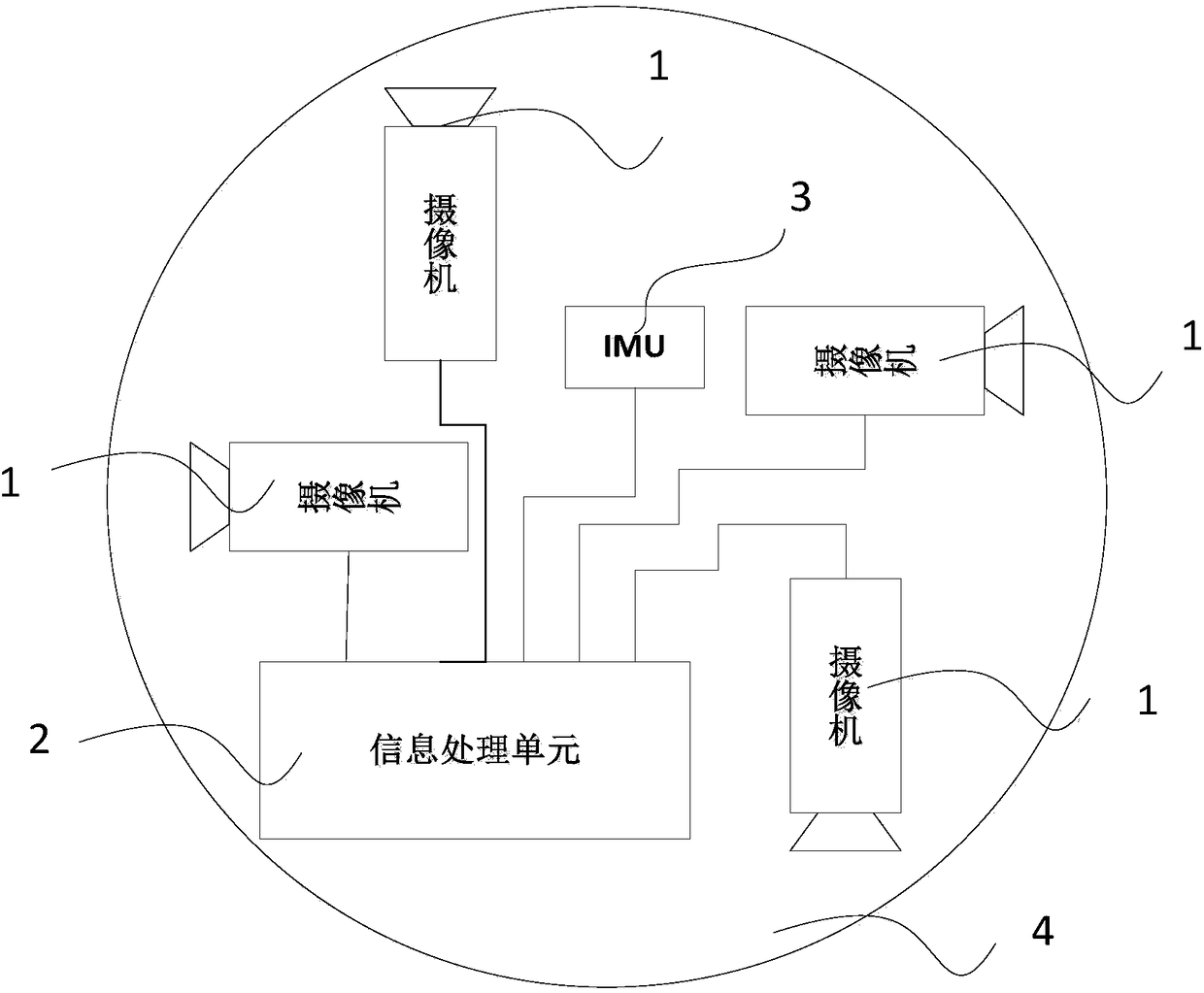

[0057] A schematic structural diagram of a system based on multi-camera and inertial measurement unit fusion provided by an embodiment of the present invention is as follows figure 1 As shown, the system includes: an inertial measuremen...

Embodiment 2

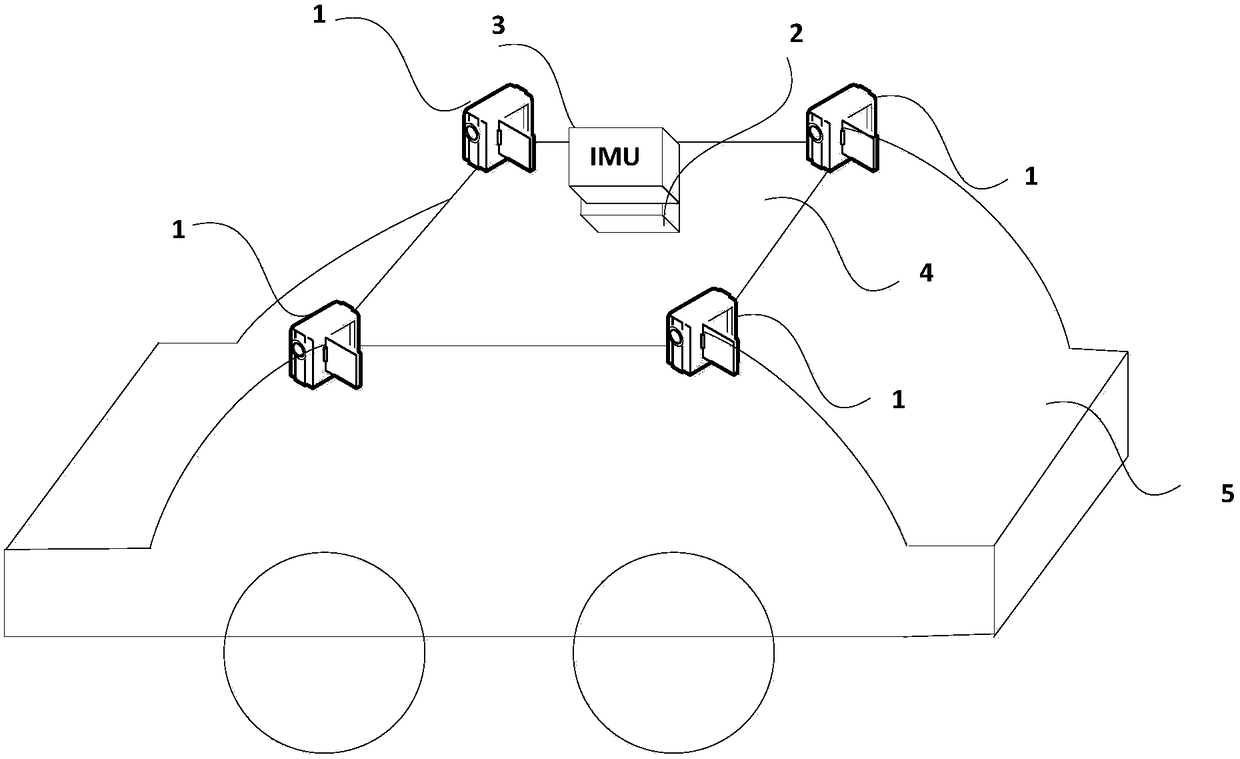

[0087] This embodiment provides a system and method based on multi-camera and inertial measurement unit fusion, figure 2 A schematic diagram of the system structure. Such as figure 2 As shown, the system includes: four horizontally placed cameras 1, each camera achieves image acquisition with a field of view of 100° through the configuration of the focal length of the lens, and the information processing unit 2 is in charge of information fusion processing by a high-performance computer , the inertial measurement unit 3 can measure the motion acceleration and angular velocity information of the moving carrier, the mounting bracket 4 fixes multiple cameras and IMUs on the top of the vehicle, the information processing unit is placed in a suitable space inside the vehicle, and the cameras and IMU are connected by cables to the corresponding interface of the information unit.

[0088] The data processing flow of the fusion processing method based on the above system is as fol...

Embodiment 3

[0098] This embodiment provides a system based on multi-camera and IMU fusion such as Figure 4 As shown, three horizontally placed cameras 1 and an inertial measurement unit 3 are fixed on a helmet 4, and the information processing unit 2 is responsible for information fusion processing by a portable mobile device.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com