Feature fusion-based RGB-D camera motion estimation method

A technology of camera motion and feature fusion, applied in computing, image data processing, instruments, etc., can solve problems such as being easily affected by light and large noise

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0060] Embodiments of the present invention will be further described below in conjunction with the accompanying drawings.

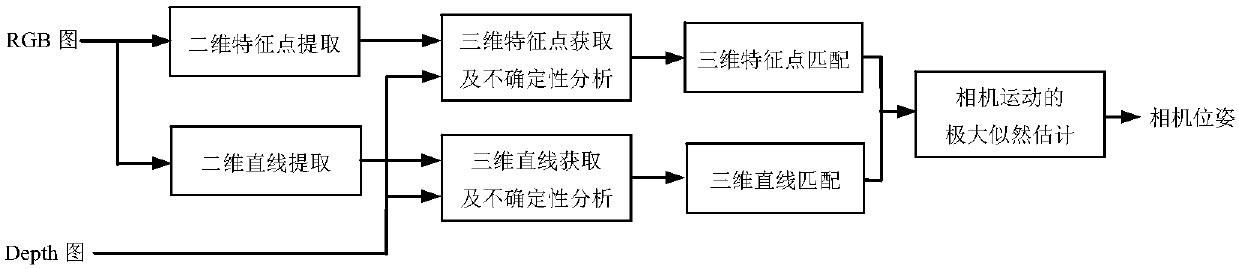

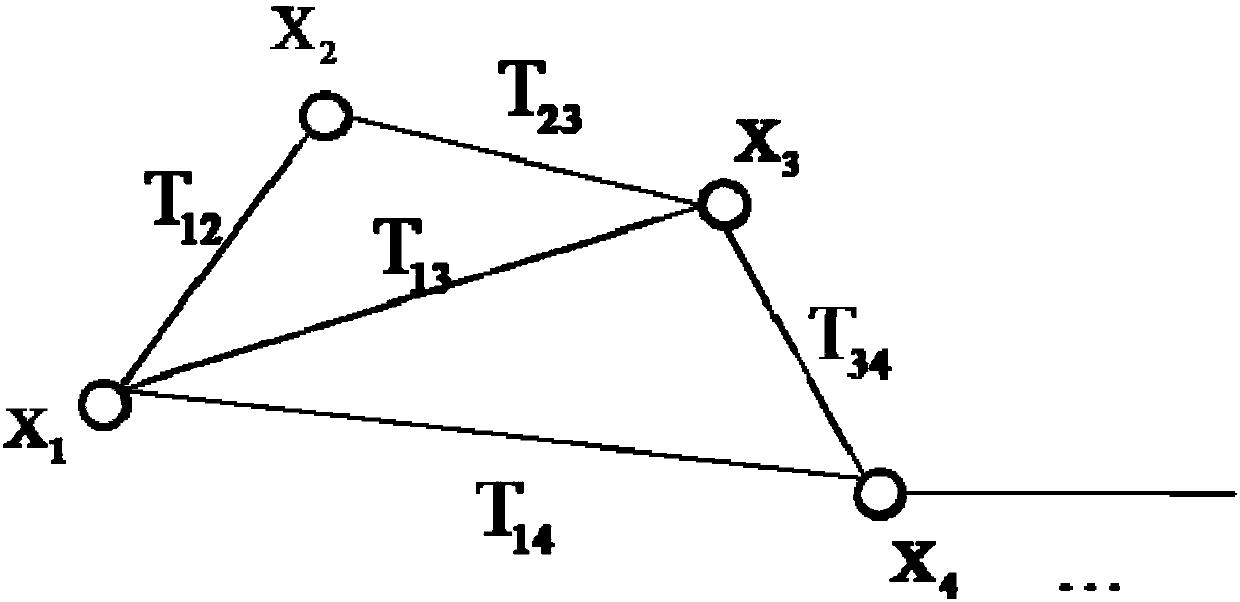

[0061] The present invention proposes a RGB-D camera motion estimation method based on point and line feature fusion, and the whole system block diagram is as attached figure 1 shown, including the following steps:

[0062] S1. Two-dimensional feature extraction: the system uses the input RGB image to extract two-dimensional point features and two-dimensional line features respectively.

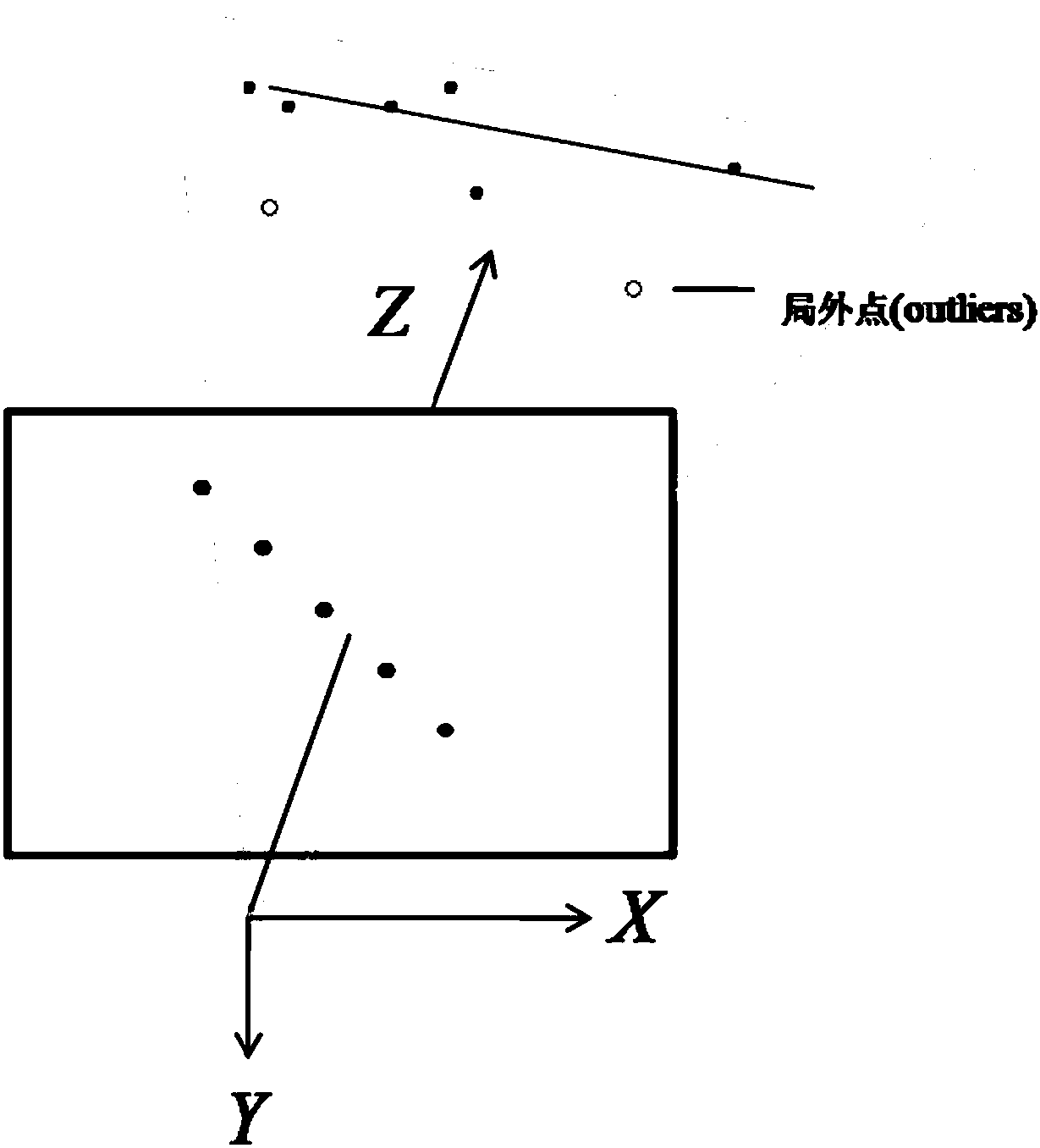

[0063] In the present invention, a group of two-dimensional point features are obtained through SURF (feature point detection algorithm), and at the same time, two-dimensional straight line features are obtained through LSD (Line Segment Detector) line segmentation detection algorithm. For an RGB image, its feature set {p i , l j |i=1,2,…,j=1,2,…}, where the two-dimensional point p i =[u i ,v i ] T , a two-dimensional straight line [u i ,v i ] T represents t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com