Large-scale resource scheduling system and large-scale resource scheduling method based on deep learning neural network

A neural network and deep learning technology, applied in the field of resource scheduling, can solve problems such as the lack of distributed parallel execution functions, achieve the effects of improving stability and scalability, improving training efficiency, and ensuring stability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] In order to facilitate the understanding and implementation of the present invention by those of ordinary skill in the art, the present invention will be further described in detail with reference to the accompanying drawings and embodiments. It should be understood that the implementation examples described here are only used to illustrate and explain the present invention, and are not intended to limit this invention.

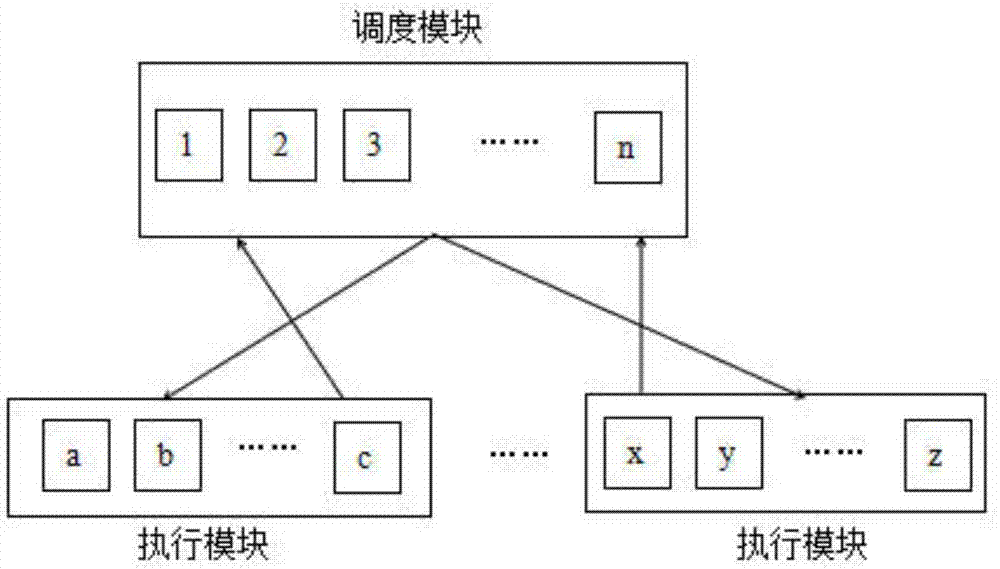

[0022] Please see figure 1 with figure 2 , The present invention provides a deep neural network large-scale resource scheduling system, which includes at least one scheduling control module and at least two execution modules. The scheduling control module is the core of the entire distributed resource scheduling. Its task: receiving user requests, allocating scheduling resources, and parallel computing status feedback; the execution module is the running body of task computing, and its task: receiving task requests sent by the scheduling control module ,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com