Multi-scale convolution kernel method based on text-image generative adversarial network model

An image generation and network model technology, applied in the field of deep learning neural network, can solve the problem of slow learning features and achieve the effect of improving efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

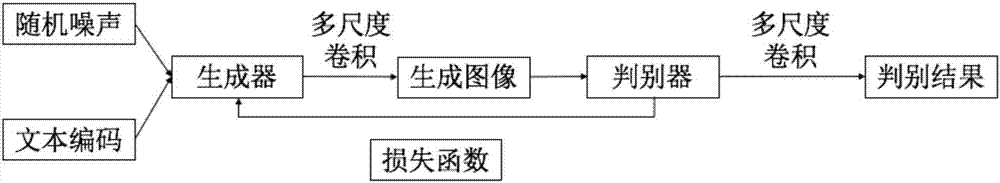

[0031] This embodiment discloses a multi-scale convolution kernel method based on a text-image generation confrontation network model, which specifically includes the following steps:

[0032] Step S1, constructing a text-image generation confrontation network model, the generator generates images and inputs them to the discriminator for network training.

[0033] Step S2, using a deep convolutional neural network to function as a generator and a discriminator;

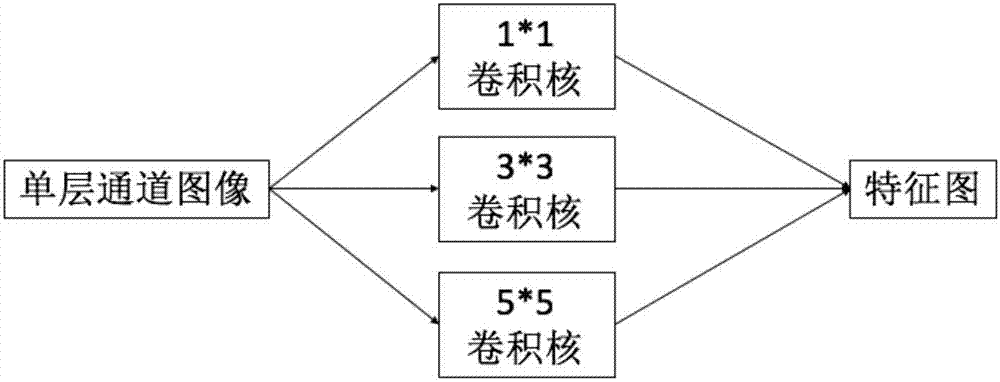

[0034] Different convolution kernels are reflected in different matrix values and different numbers of rows and columns.

[0035] Construct multiple convolution kernels. In the process of processing images, different convolution kernels mean that different features of generated images can be learned during network training.

[0036] In the network model involved in the present invention, compared with the traditional generative confrontation network model, there are more encoding operations for text content, so tha...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com