Electronic pet based on Kinect technology

An electronic pet and technology technology, applied in biological models, user/computer interaction input/output, instruments, etc., can solve problems such as single feedback, electronic pets have no learning function, and monotony.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

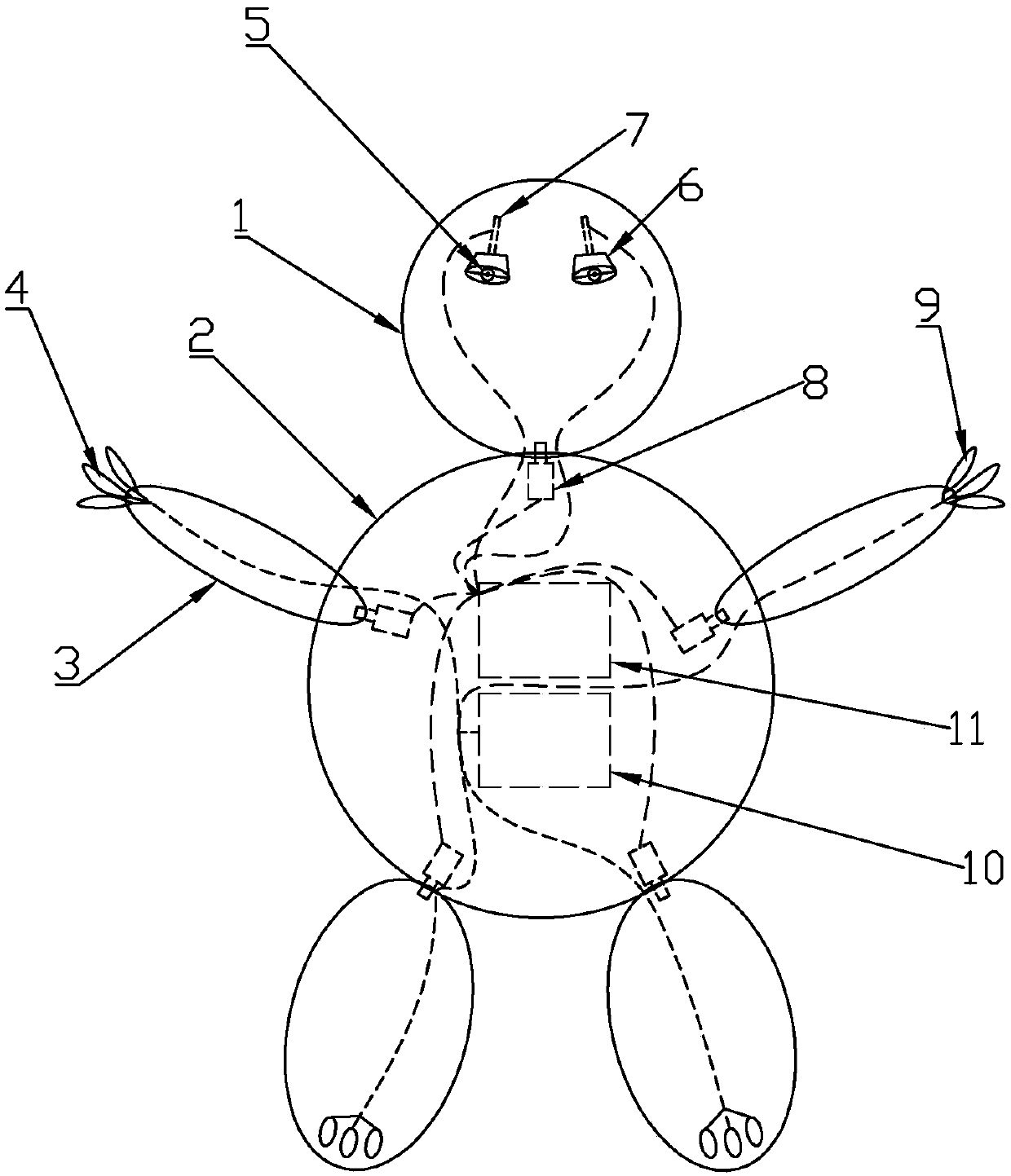

[0023] The present invention provides such as Figure 1-2 A kind of electronic pet based on Kinect technology shown, comprises logic layer, image recognition layer and control layer, and image recognition layer takes image information by camera and transmits image information to logic layer, and the corresponding The action command is sent to the control layer, and the control layer sends movement signals to the limbs of the electronic pet to control the movement of the limbs of the electronic pet.

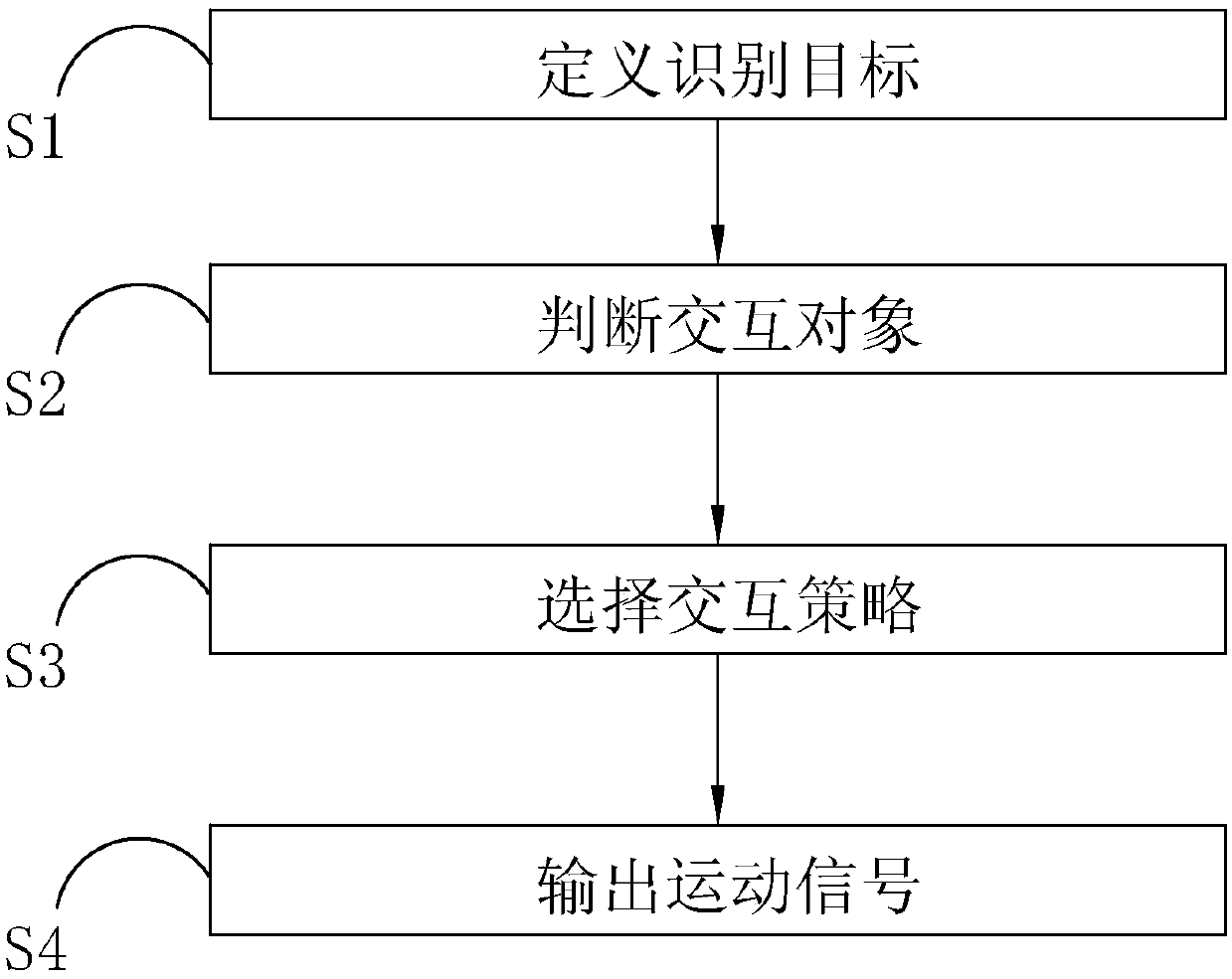

[0024] Further, in the above technical solution, the logic layer includes the following steps:

[0025] S1: Define the recognition target, the logic layer decomposes the image information into several pixels, and connects the adjacent pixels with light intensity changes and color changes in the image to form the recognition target;

[0026] S2: Judging the interactive object, judging the movement status of the recognition target, defining the motionless recognition target as the ...

Embodiment 2

[0035] The difference between this embodiment and Embodiment 1 is that, based on the Kinect recognition technology, the program flow is changed, and the program is simplified and optimized to make it faster.

[0036] The software design is divided into a logic layer and an identification layer. The logic layer is mainly realized by the .Net Core framework, and .NET is also the underlying framework used to control Kinect, both of which are developed by Microsoft. Net Core is a cross-platform operating framework redeveloped by Microsoft based on the concept of the previous .NET framework. Its main feature is cross-platform, and it can support exclusive UWP development and XAMARIN mobile cross-platform development. It is also very good, and Microsoft has also open sourced it to the community, and it is currently developing rapidly.

[0037] The image recognition layer is implemented using the Python language. Using the Python language, we can also use Google's deep learning frame...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com