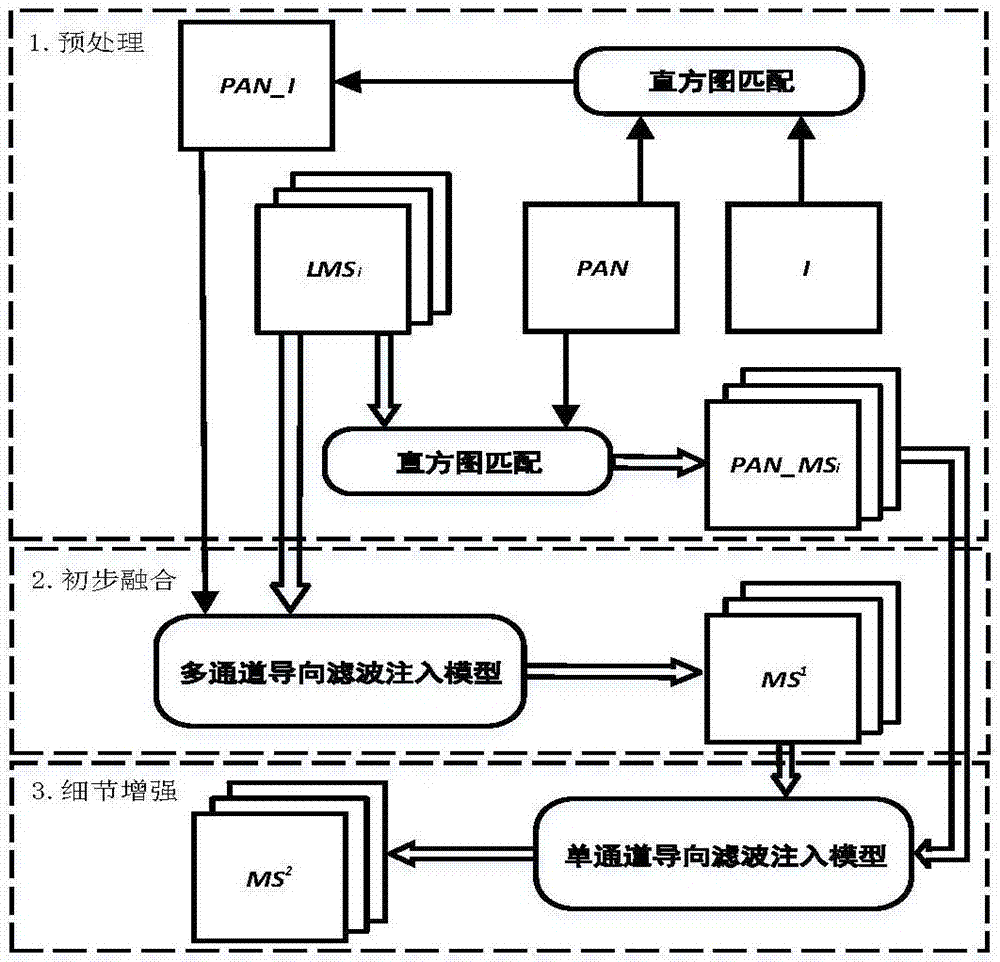

Guide filtering-based two-stage remote sensing image fusion method

A remote sensing image fusion and guided filtering technology, which is applied in image enhancement, image data processing, instruments, etc., can solve problems such as not being suitable for satellite sensors, spatial and spectral distortion of fused images, and spectral distortion of component replacement methods

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The present invention will be further described below in conjunction with the accompanying drawings and embodiments, and the present invention includes but not limited to the following embodiments.

[0019] The present invention comprises the following steps:

[0020] Assuming the original multispectral image (MS i ) contains N bands, and the subscript i=1,...,N indicates the i-th band of the corresponding multi-band image.

[0021] The first step, upsampling multispectral image

[0022] The bicubic interpolation method is used to upsample the original multispectral image, and the upsampled image is recorded as LMS i .

[0023] The second step, histogram matching

[0024] Calculate the intensity component INT, which can be obtained from a suitable upsampled multispectral image (LMS i ) linear combination model estimation:

[0025]

[0026] Among them, the linear combination coefficient is obtained by solving the following optimization model:

[0027]

[002...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com