Dynamic neutral network model training method based on ensemble learning and dynamic neutral network model training device thereof

A dynamic neural network and model training technology, applied in biological neural network models, neural learning methods, neural architectures, etc., can solve the problem of inability to effectively describe the internal input of the system, inability to guarantee the performance of neural network classification, convolution functions and pooling functions The design is complicated and difficult to achieve the effect of saving training time, reducing design difficulty and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

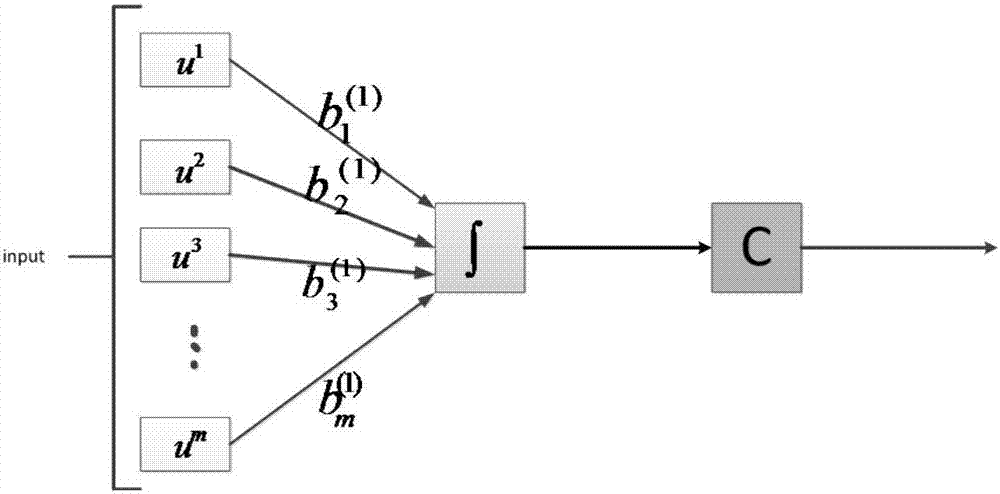

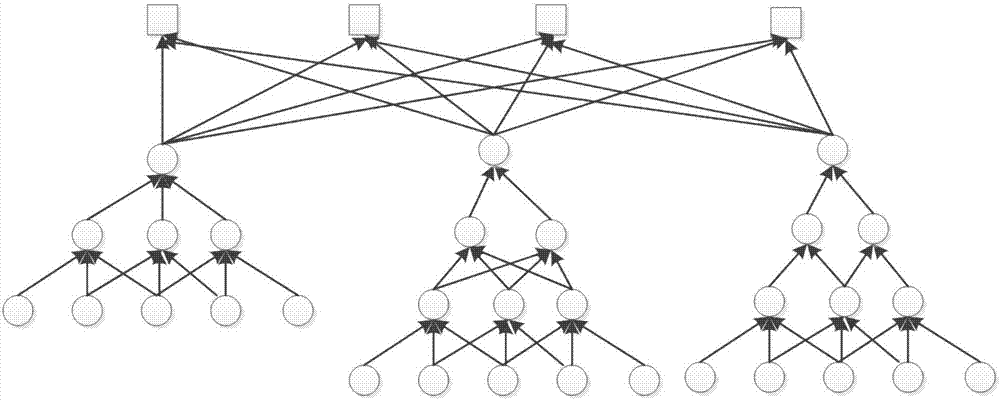

[0056] Based on the above dynamic neurons, this embodiment provides a training method for a dynamic neural network model based on integrated learning, including the following steps:

[0057] Step 1: The original data is used as the input of the neurons in the first layer of the i-th sub-model, and after being processed by the dynamic neural network, the corresponding output value is the feature of the layer, i=1,2,...,k;

[0058] Optionally, the original data can also be divided into a training set and a verification set, the training set is used for training the neural network model, and the verification set is used for subsequent model performance evaluation.

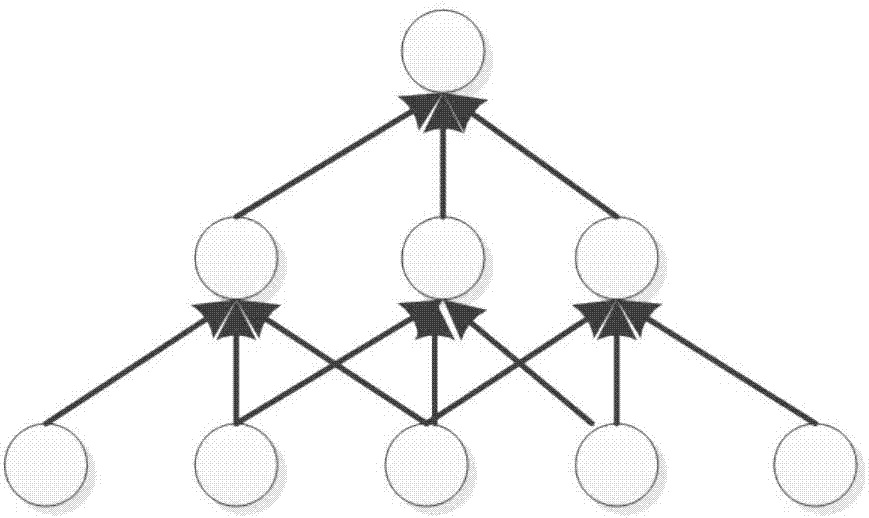

[0059] Step 2: Increase the number of neuron layers, use the output features of the upper layer as the input of the next layer of neurons to obtain the features of the corresponding layer, repeat this step until the number of layers reaches a certain preset value r;

[0060] The same layer in the same neural network h...

Embodiment 2

[0076]Based on the method of Embodiment 1, the present invention also provides a computer device, including a memory, a processor, and a computer program stored on the memory and operable on the processor, the computer program being used to train the integrated dynamic neural network model, the processor executes the following steps when executing the program:

[0077] Step 1: For each sub-model in the integrated dynamic neural network model, the original data is used as the input of the neurons of the first layer, and the output characteristics of the layer are obtained through the processing of the dynamic neurons;

[0078] Step 2: Increase the number of neuron layers, use the output features of the upper layer as the input of the next layer of neurons to obtain the features of the corresponding layer, and repeat this step until the number of layers reaches a certain preset value;

[0079] Step 3: Establish a fully connected layer between the output feature of the last layer...

Embodiment 3

[0091] A computer-readable storage medium on which a computer program is stored for training an integrated dynamic neural network model, including a memory, a processor, and a computer program stored on the memory and operable on the processor, the program being processed The following steps are performed when the server executes:

[0092] Step 1: For each sub-model in the integrated dynamic neural network model, the original data is used as the input of the neurons of the first layer, and the output characteristics of the layer are obtained through the processing of the dynamic neurons;

[0093] Step 2: Increase the number of neuron layers, use the output features of the upper layer as the input of the next layer of neurons to obtain the features of the corresponding layer, and repeat this step until the number of layers reaches a certain preset value;

[0094] Step 3: Establish a fully connected layer between the output feature of the last layer and the category to which it ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com