Method for location of following target and following device

A target and equipment technology, applied in the field of robotics, can solve the problems of poor relative position information accuracy, long time consumption, increased risk of luggage loss, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

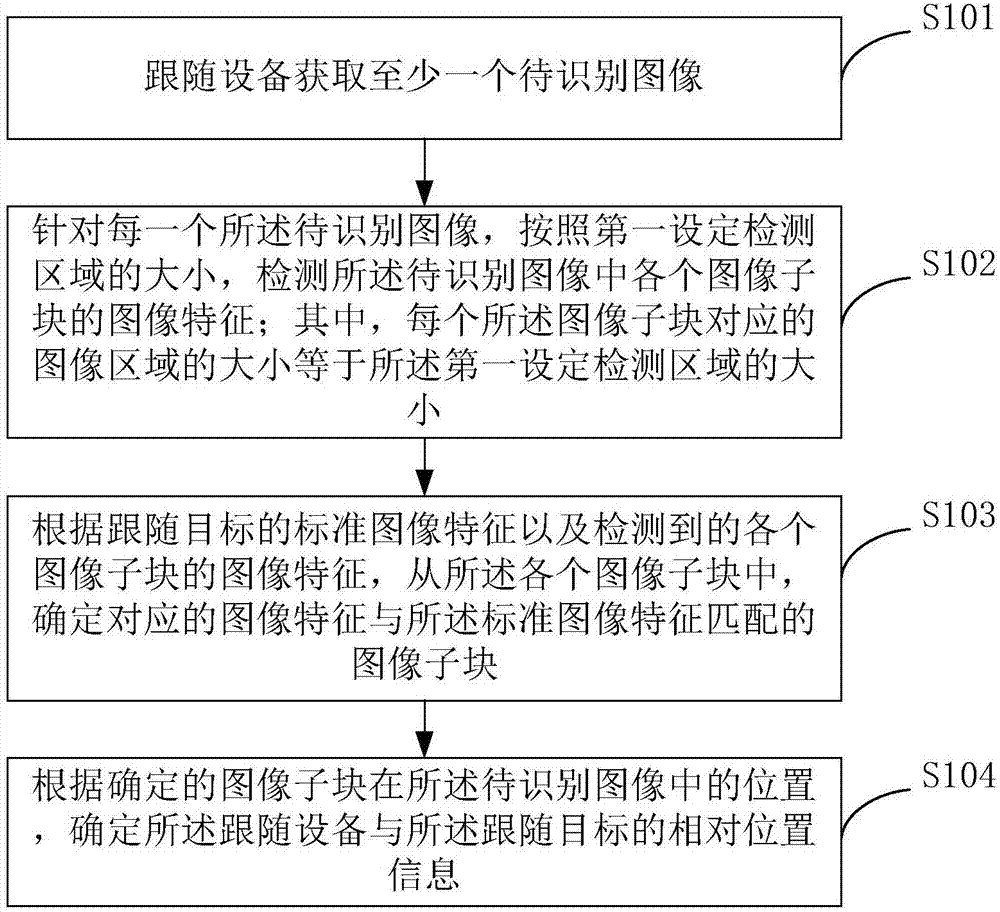

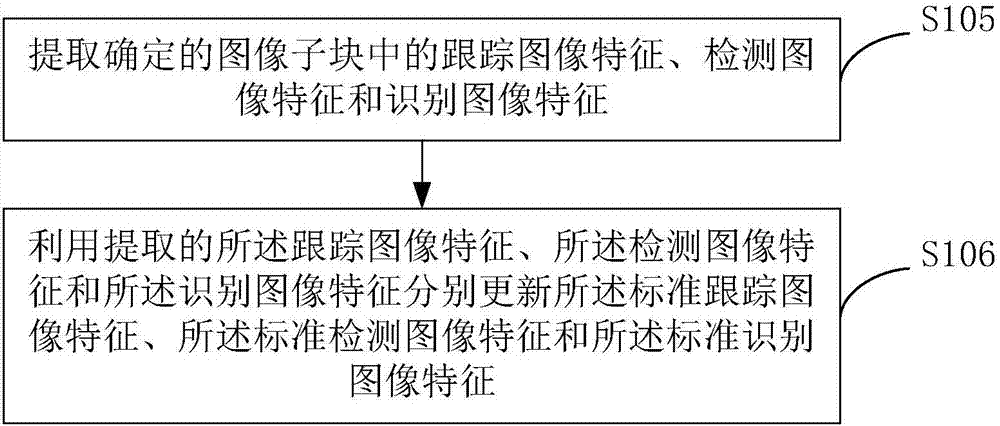

Method used

Image

Examples

Embodiment approach 1

[0123] Embodiment 1: Convert the image to color spaces such as RGB (RGB color mode), HSV (Hue, Saturation, Value, color model), LAB (color model) and so on. Construct M regions in each color space, and each region corresponds to a range in the three-dimensional space. Corresponding to the M-dimensional feature vector F, F is initialized as a 0 vector.

[0124] Judge each pixel point p(x, y), where (x, y) is the coordinate of the pixel point p in the image. If the color value of the pixel p(x,y) belongs to the i-th region, then: F_i=F_i+Q(x,y). Among them, Q(x,y)∝N(W / 2,H / 2,σ_1 ^2,σ_2^2,ρ). Among them, F_i represents the i-th dimension in the vector F, N() is a two-dimensional Gaussian distribution, W and H are the width and height of the pedestrian image, σ_1^2, σ_2^2 are the variances of x and y, respectively, ρ is a constant. Finally, the feature vectors on all color spaces are concatenated as the identification feature vector on this bar.

Embodiment approach 2

[0126] Divide the image into several small blocks horizontally on the bar. All the pixels on each small block are projected onto the N-dimensional color namespace, and then the proportion distribution of the color namespace on the small block is calculated to obtain an N-dimensional vector.

[0127] On each small block, weight the N-dimensional vector obtained in the previous step, and the weight is: Q(x,y)∝N(W / 2,H / 2,σ_1^2,σ_2^2,ρ). Where (x, y) is the coordinate of the center of the small block on the image. N() is a two-dimensional Gaussian distribution, W and H are the width and height of pedestrian images, σ_1^2, σ_2^2 are the variances of x and y, respectively, and ρ is a normal constant. Finally, the weighted vectors of all small blocks are concatenated as the identification feature vector on this bar.

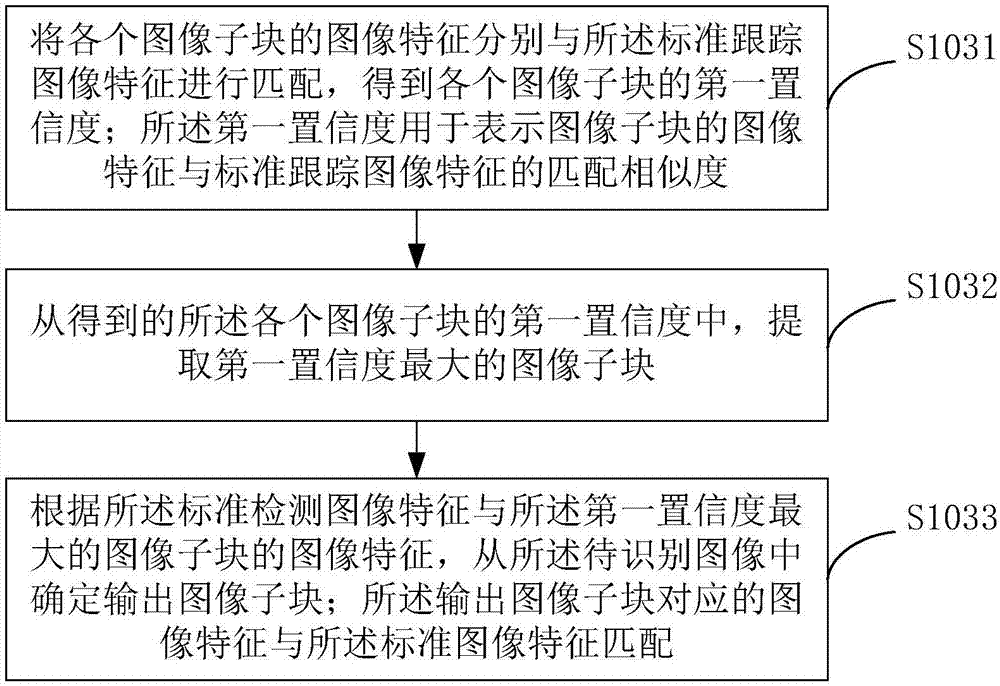

[0128] refer to Figure 4 , the above step 103, according to the standard image features of the following target and the detected image features of each image sub-blo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com