Binocular full-view visual robot self-positioning method based on SURF algorithm

A technology of autonomous positioning and panoramic vision, which is used in instruments, photo interpretation, photogrammetry/video surveying, etc., and can solve problems such as instability and acceleration of edge feature points.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

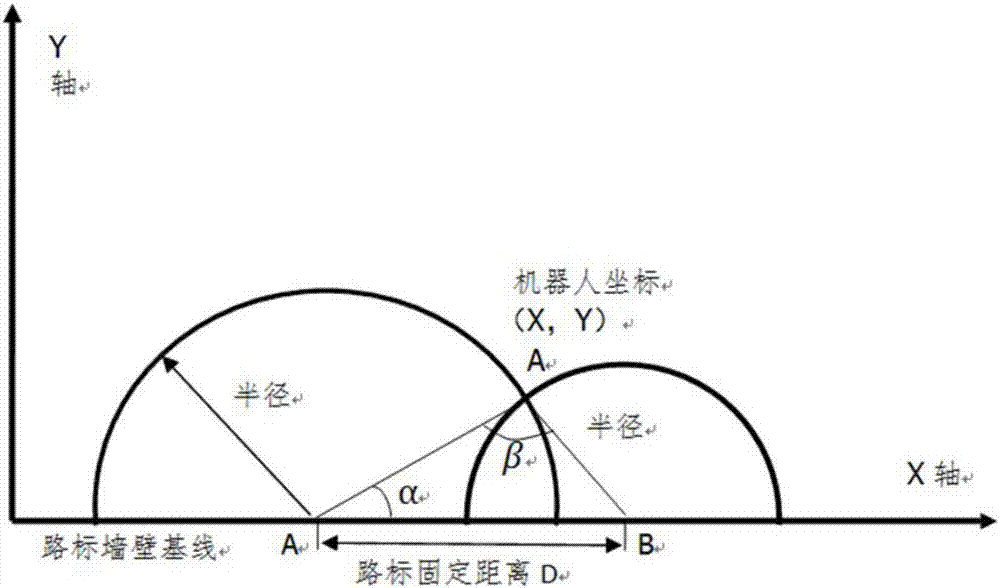

[0067] 1. Set artificial landmarks as prior location information

[0068] Since mobile robots often work in unknown unstructured environments during the execution of tasks, in order to transform unfamiliar environments into familiar ones, road signs are used as prior location information, and according to the SURF feature point extraction algorithm, the environment image library is collected for testing and design Weak interference match signpost. Set up two or more artificial landmarks with different characteristics at equal intervals and equal heights on the side with low occlusion in the working environment of the robot.

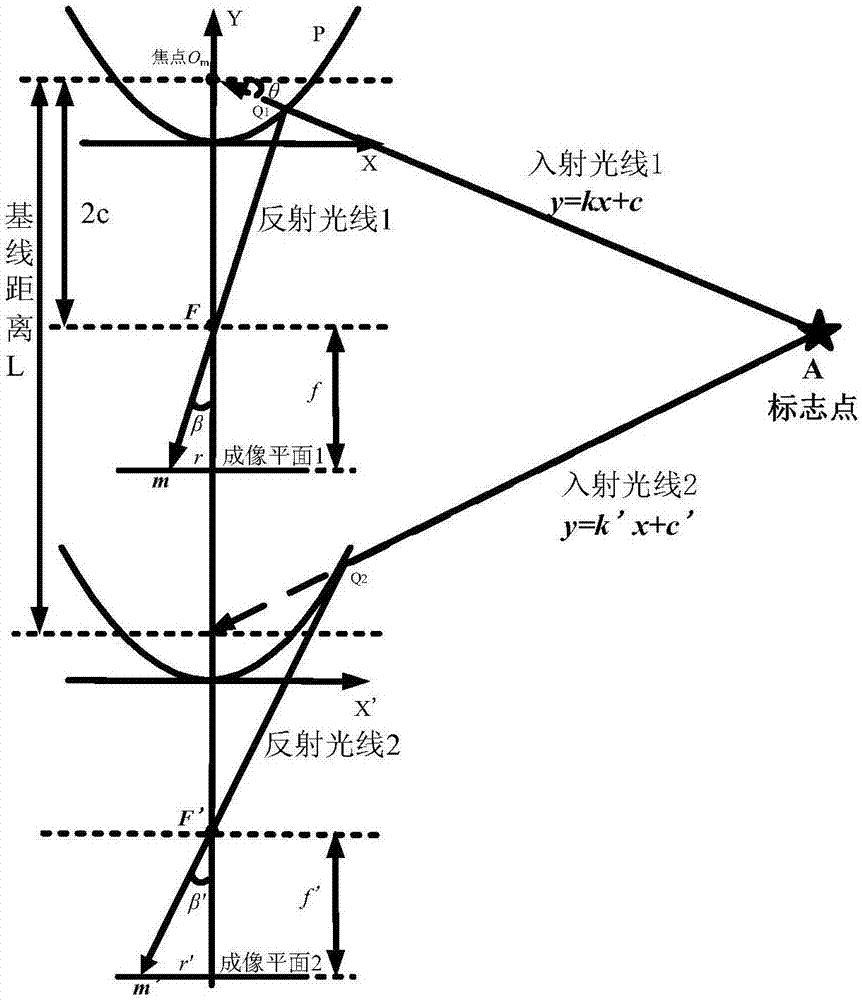

[0069] 2. Improved MDGHM-SURF feature matching algorithm

[0070] Using the improved MDGHM-SURF algorithm for landmark feature matching and positioning, sampling the panoramic image mask at equal intervals, and performing pixel coordinate transformation, the (p, q) order of the panoramic image I (i, j) is improved discrete Gaussian-Hermit A moment is de...

specific Embodiment

[0091] Taking landmark A as the research object, the coordinates of the panorama center are G(x 0 ,y 0 ), assuming that the pixel position of landmark A in the panoramic image at time t is A(ρ,θ,t), with A as the center of the circle, a circular detection window with a radius of r is set to perform SURF feature detection and landmark matching positioning, assuming that the robot Rotating in situ with angular velocity ω(t), since the distance between the robot and the landmark remains unchanged, the trajectory of the landmark in the panoramic image is an arc with the center of the panorama G as the center and the distance between the center and the radius ρ. Therefore, the detection area at time t′ rotates into a circular field centered on A′(ρ,θ+ω(t)Δt,t′). Considering the translation speed of the robot, the maximum gradient direction of the pixel change of the landmark in the image is the velocity direction, and when the robot is running at the maximum speed, the fastest pix...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com