A multi-modal data fusion method based on deep learning

A technology of data fusion and deep learning, applied in the field of machine learning, can solve problems such as data fusion of various modes, and achieve the effects of facilitating subsequent processing, realizing compression, and simplifying the fusion process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

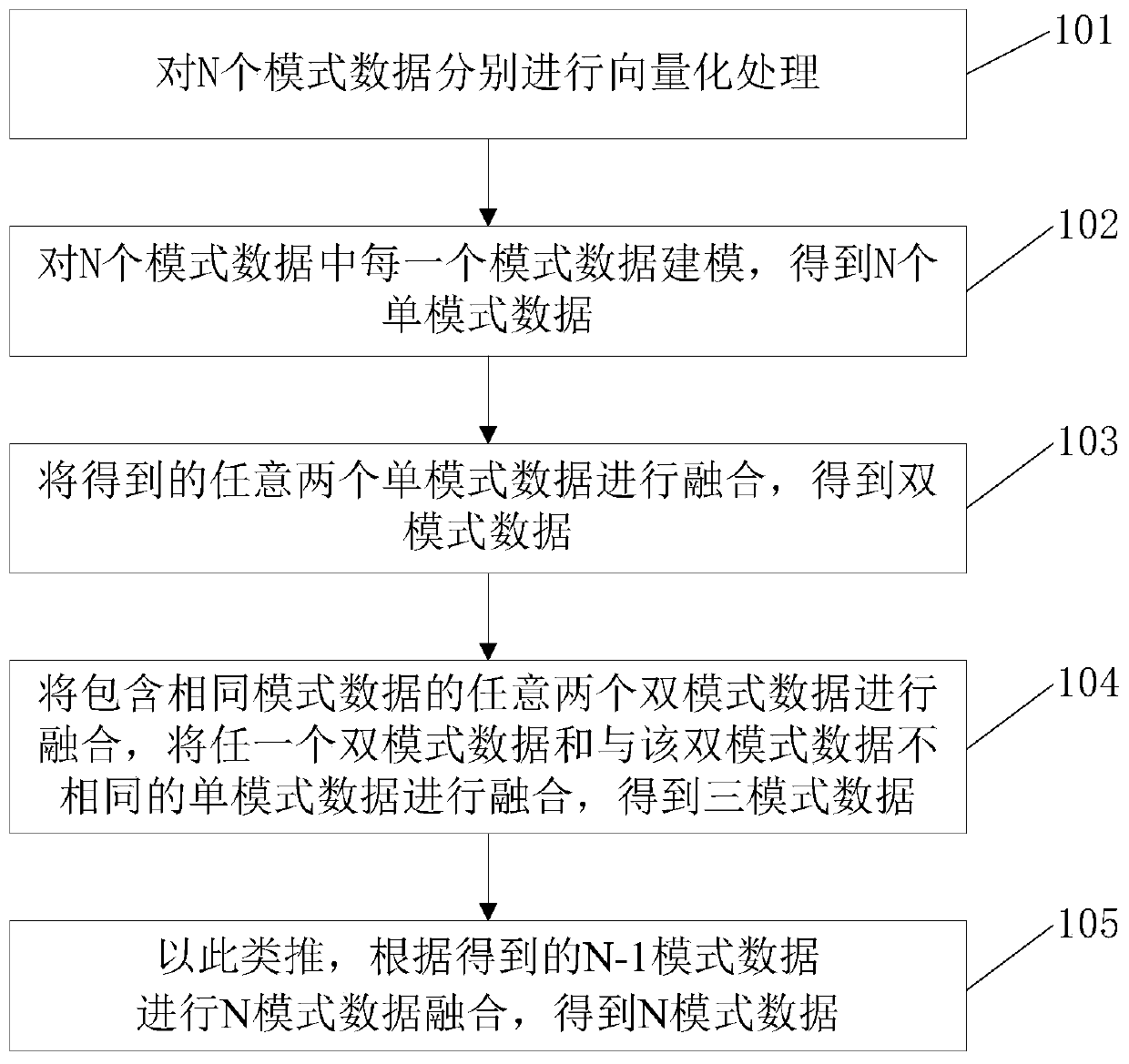

[0044] see figure 1 , the embodiment of the present invention provides a multi-mode data fusion method based on deep learning, the method comprising:

[0045] 101. Perform vectorization processing on the N pattern data respectively; N is a natural number, and the N pattern data includes sensor data;

[0046] In the embodiment of the present invention, N is set to be 4, that is, the four pattern data include audio data, image data and text data in addition to sensor data.

[0047] Specifically, the audio data is sparsely and vectorized, specifically:

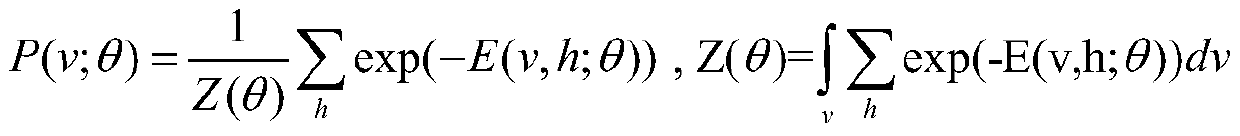

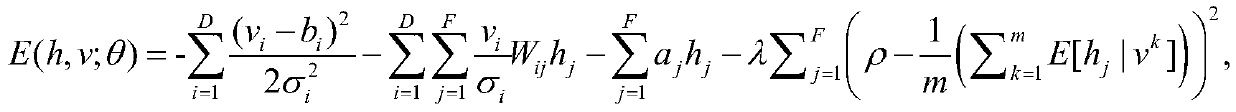

[0048] According to the average activation of the jth hidden layer neurons get m is the number of audio data, x (i) Indicates the i-th audio data;

[0049] in, Indicates that two are represented by ρ and is the relative entropy of the mean Bernoulli distribution, ρ is the sparsity parameter, is the activation degree of neuron j in the hidden layer, and n is the number of neurons in the hidden layer; and indepen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com