GPS-based binocular fusion positioning method and device

A fusion positioning, dual-purpose technology, applied in the field of computer vision, can solve the problems of increased positioning error, loss of GPS signals, and low update frequency, and achieve the effects of improved positioning accuracy, high positioning accuracy, and high positioning accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] All features disclosed in this specification, or steps in all methods or processes disclosed, may be combined in any manner, except for mutually exclusive features and / or steps.

[0039] Any feature disclosed in this specification, unless specifically stated, can be replaced by other alternative features that are equivalent or have similar purposes. That is, unless expressly stated otherwise, each feature is one example only of a series of equivalent or similar features.

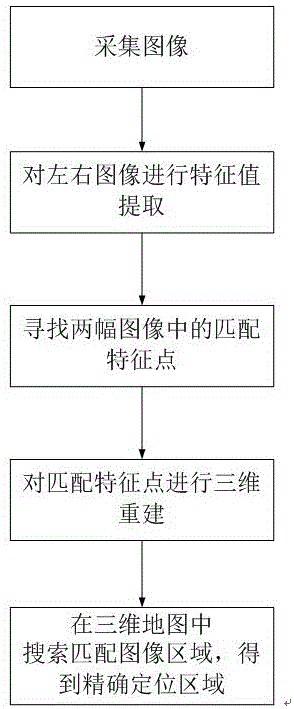

[0040] Such as figure 1 Shown, the inventive method comprises following three steps:

[0041] 1) Obtain the space where the object to be located, such as a three-dimensional map of a room or a building. The three-dimensional map contains at least image features of the space environment, such as brightness feature values, texture feature values, geometric feature values, and other image-related feature values. Three-dimensional The map should also contain the earth coordinates of each point in space....

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com