A joint calibration method of a 3D lidar and a monocular camera

A monocular camera and three-dimensional laser technology, applied in the field of information fusion, can solve problems such as large errors, difficult to accurately extract spatial matching feature points, and complex schemes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] The present invention will be described in detail below in conjunction with the accompanying drawings and embodiments.

[0051] The main steps of a joint calibration method of a three-dimensional laser radar and a monocular camera are as follows:

[0052] Step 1: Establish the mathematical model of the coordinate coefficient of the monocular camera and calibrate the internal and external parameters of the camera;

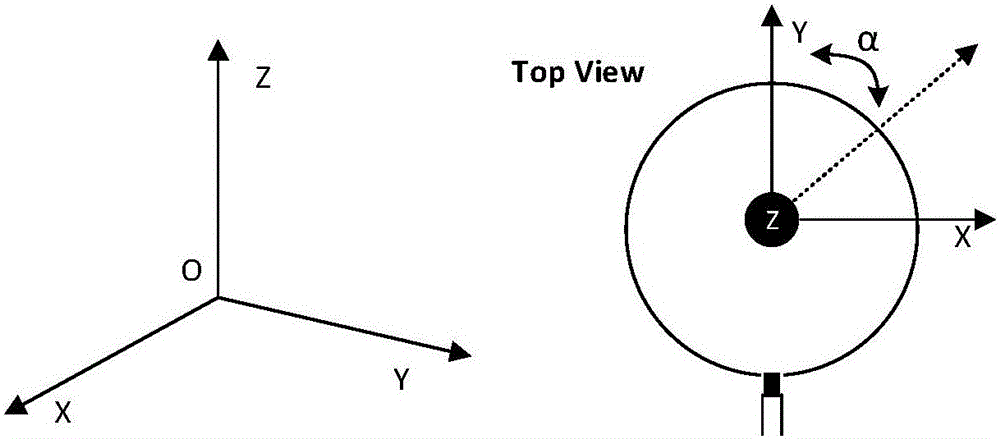

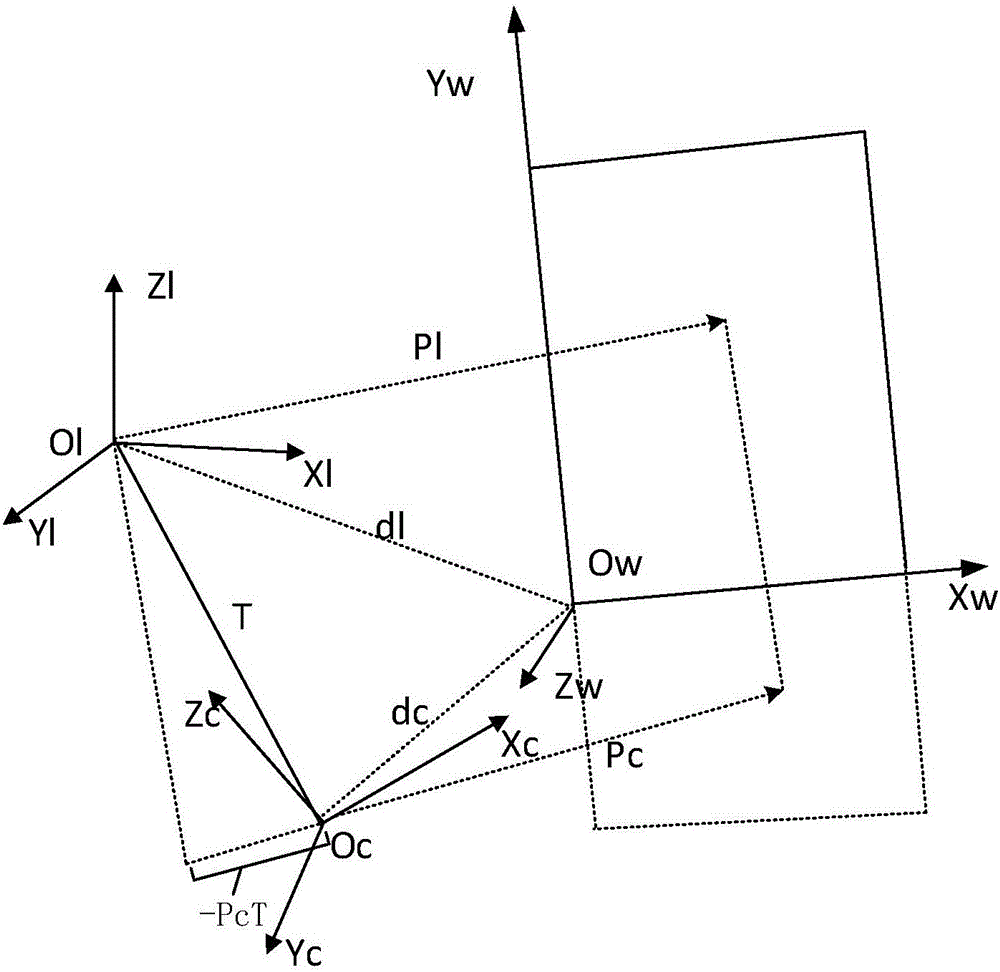

[0053] The monocular camera coordinate coefficient mathematical model adopted in this method is a pinhole approximation model, such as figure 1 shown. First, Zhang Zhengyou’s monocular camera calibration method is used to calibrate the internal parameters of the camera, and the internal parameter matrix M of the camera’s effective focal length f, image principal point coordinates (u0, v0), scale factors fx and fy, and each position is obtained. External parameters such as the orthogonal rotation matrix R and translation vector T of . Let the camera coordin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com