Multi-view gait identification method based on adaptive three dimensional human motion statistic model

A statistical model and human motion technology, applied in the field of computer vision and pattern recognition, can solve the problems of incomplete multi-view training set and low recognition accuracy, and achieve the effect of solving the problem of inability to deal with occlusion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

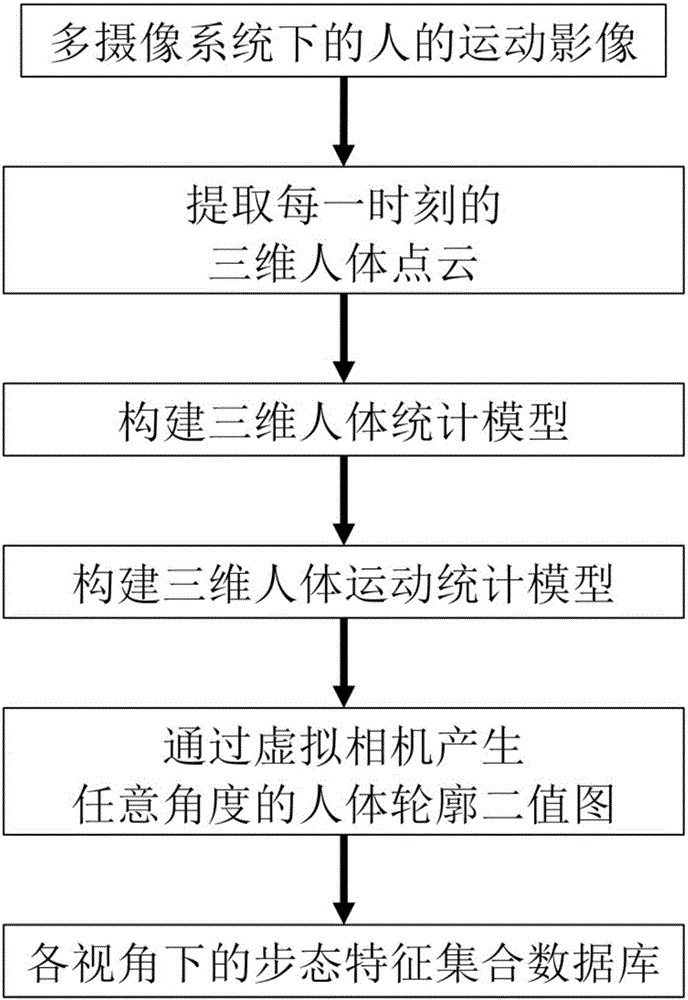

[0022] figure 1 The algorithm flow chart of the present invention in the training stage is given.

[0023] The training uses the video of people walking simultaneously captured by the multi-camera system. Extract the person from the image of each frame, and then extract the feature points only for the person area, and perform matching to generate a set of matching points. Each group of matching points is an image point of the same object point on multiple images. Then, based on the principle of the least square error, the three-dimensional coordinates of the object point are solved through collinear equations. In this way, the coordinates of all object space points in the matching point set can be calculated, and the point cloud of each frame of the human body can be generated. Assuming that all cameras shoot at the same speed, and a complete gait c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com