Multi-angle indoor human action recognition method based on 3D skeleton

A recognition method and multi-view technology, applied in character and pattern recognition, instruments, computer parts, etc., can solve the problem of not analyzing the rotation of skeleton joint points, and achieve the effect of overcoming limitations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] In order to make the object, technical solution and advantages of the present invention more clear, the present invention will be further described in detail below in conjunction with the examples. It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

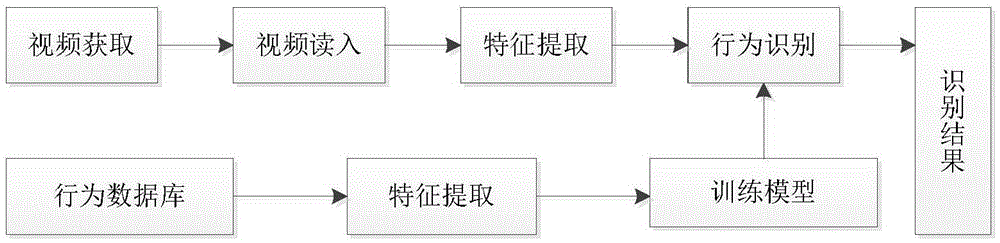

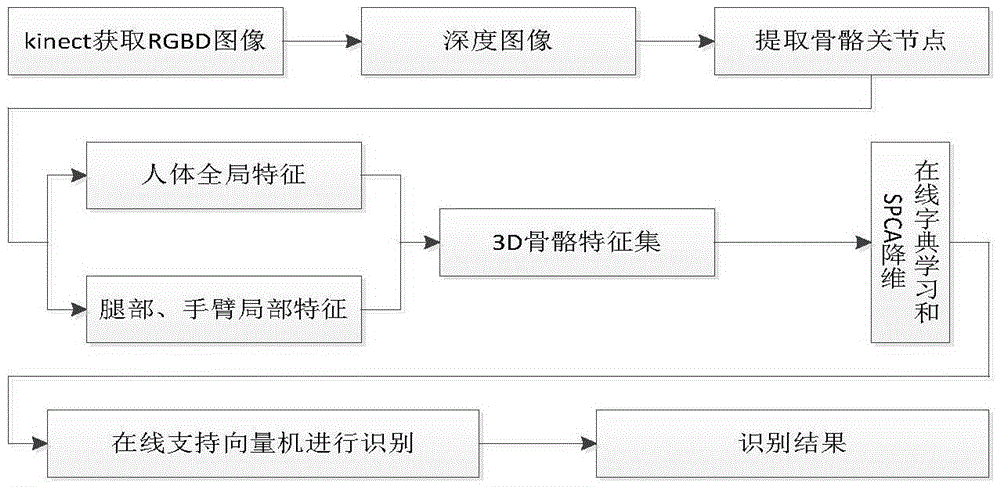

[0019] like figure 1 and figure 2 As shown, the multi-view indoor human behavior recognition method based on 3D skeleton includes the following steps:

[0020] 1) Acquire videos of human body movement at three angles of facing the camera -10°~10°, right facing the camera 20°~70° and left facing the camera -20°~-70°; the videos include training videos and test videos ; In this embodiment, we use the somatosensory device kinect to collect video data, collect RGB and Depth data, generate a video file in ONI format, and calculate the three-dimensional coordinate data and confidence of bone joint points at the same time, and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com