Unmanned plane automation detection target and tracking method

An automatic detection and target tracking technology, which is applied in computer parts, character and pattern recognition, image data processing, etc., can solve the problems of easy tracking and loss, fixed surveillance cameras that cannot be deployed in all directions, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

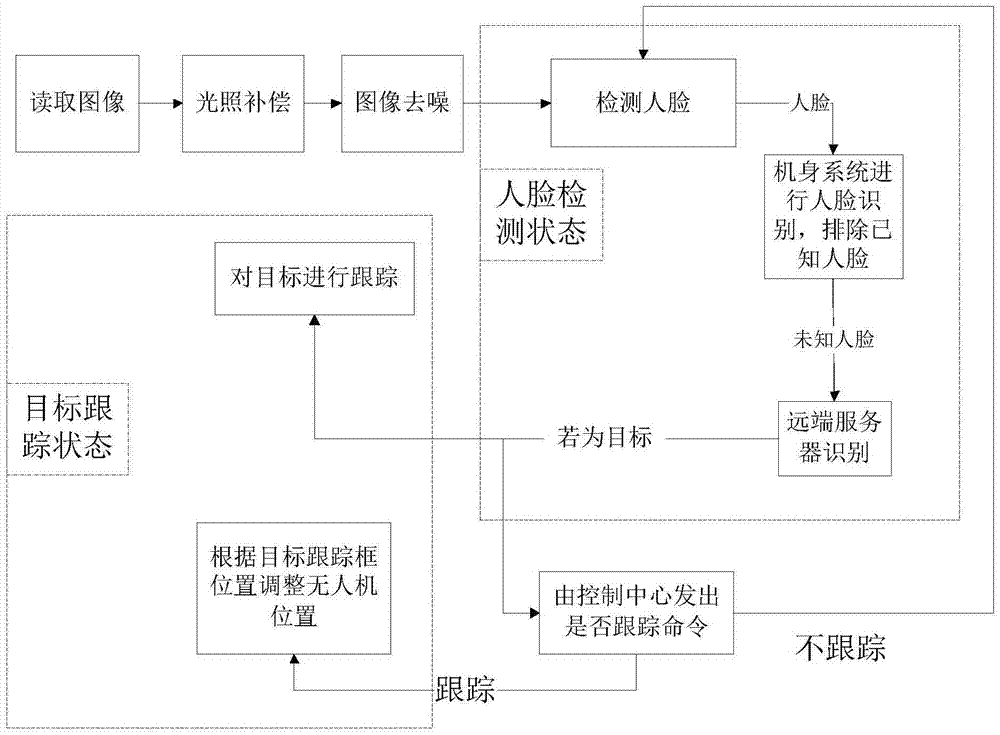

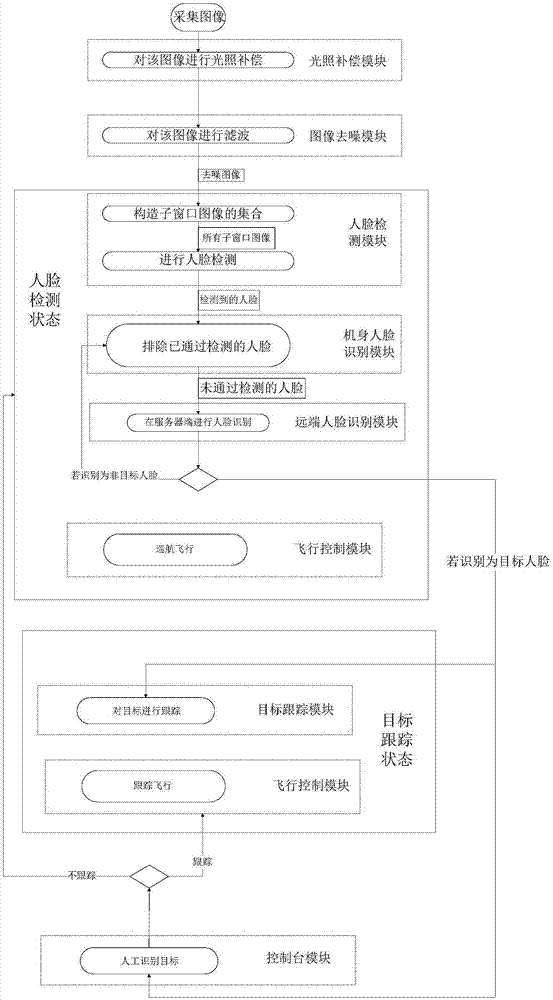

Method used

Image

Examples

Embodiment 1

[0093] This embodiment implements the whole process of parameter initialization of a UAV automatic detection target and tracking method.

[0094] 1. During the initialization process of the illumination compensation module, the input is a data set containing human faces and non-human faces. The processing process is: First, for the extracted color image X, set its red, green and blue components to be R, G, B. First, convert the original color image into a grayscale image. The conversion method is: For the R, G, and B components corresponding to each pixel X on the original color image, denote by i and j without loss of generality , Then the gray value of the gray image X′ corresponding to the pixel is X′(i,j)=0.3×B′(i,j)+0.59×G′(i,j)+0.11×R′( i, j), where X'(i, j) is an integer. If the result is a decimal, only the integer part is taken to obtain the original X grayscale image X'. Then, perform illumination compensation on the grayscale image, and send the result obtained after ...

Embodiment 2

[0140] This embodiment realizes the whole detection process of an automatic detection target and tracking method of a UAV.

[0141] 1. The illumination compensation module, whose input is each frame of image taken by the drone, considering that the video image captured by the drone has a very small gap in its consecutive frames, and taking into account the drone's own processor The processing speed is limited, so it is not necessary to process each frame. You can select an appropriate frame interval for sampling according to the performance of the processor. The processing process is the same as that in Embodiment 1, and will not be repeated here. The result obtained after illumination compensation is sent to the image denoising module, and the illumination compensation process of the current frame by the illumination compensation module ends.

[0142] 2. Image denoising module, which passes the denoising processed picture to the face detection module. If the image needs to be deli...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com