Object classification method and system based on bag of visual word model

A visual bag-of-words and object classification technology, which is applied in the field of object classification technology based on the visual bag-of-words model, can solve the problems of consuming computing resources, not considering spatial information, and not being able to obtain recognition rate, etc., achieving less computing resources and fast processing speed effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

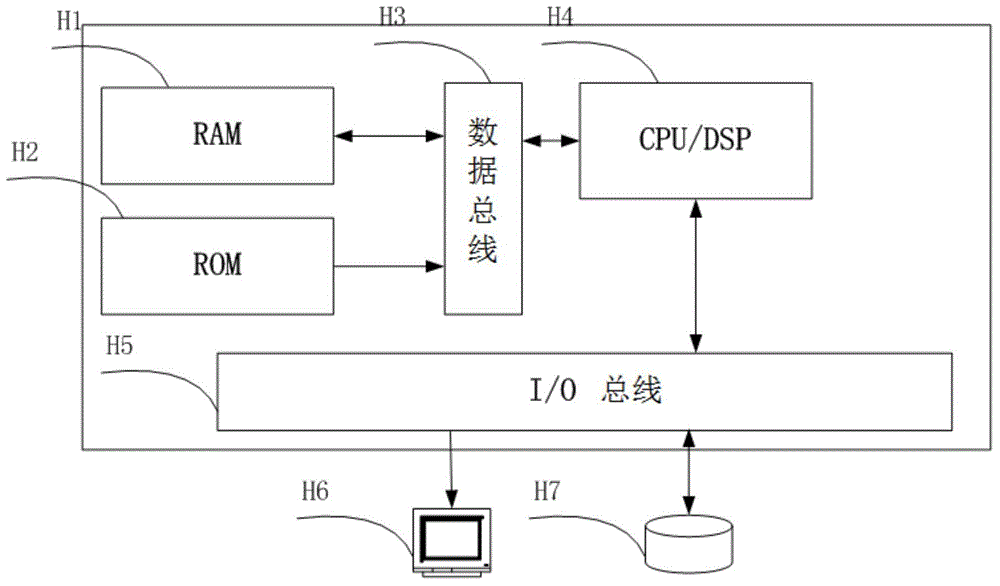

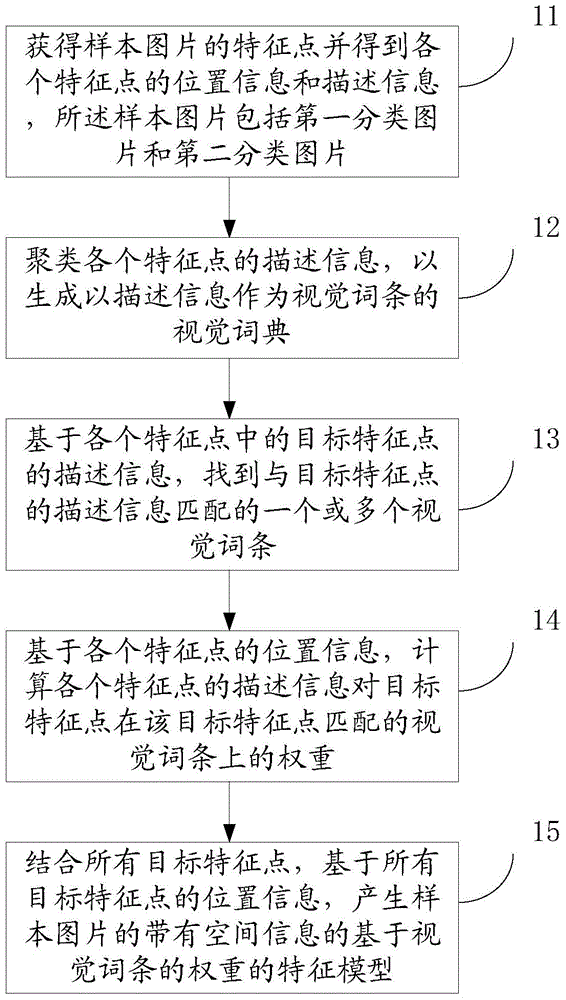

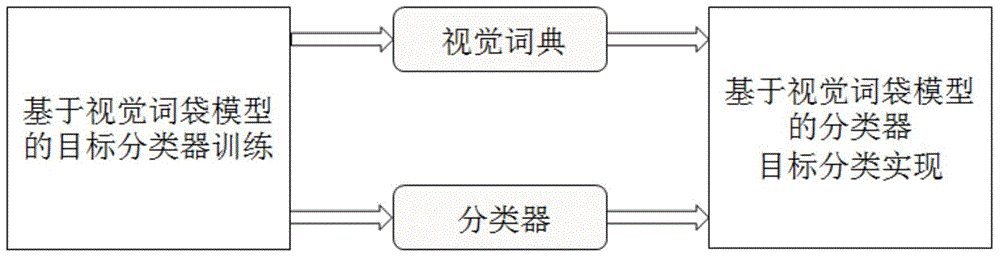

[0026] Reference will now be made in detail to specific embodiments of the invention, examples of which are illustrated in the accompanying drawings. While the invention will be described in conjunction with specific embodiments, it will be understood that it is not intended to limit the invention to the described embodiments. On the contrary, it is intended to cover alterations, modifications and equivalents as included within the spirit and scope of the invention as defined by the appended claims. It should be noted that the method steps described here can all be realized by any functional block or functional arrangement, and any functional block or functional arrangement can be realized as a physical entity or a logical entity, or a combination of both.

[0027] In order to enable those skilled in the art to better understand the present invention, the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com