A Human Behavior Recognition Method Based on the Fusion of Multi-feature Spatial-Temporal Relationships

A technology of time-space relationship and recognition method, applied in the field of computer vision, can solve the problems of time-space relationship information loss, time-consuming, high computational complexity, etc., and achieve the effect of improving recognition accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The preferred embodiments of the present invention are described in detail below, so that the advantages and features of the present invention can be more easily understood by those skilled in the art, so as to define the protection scope of the present invention more clearly.

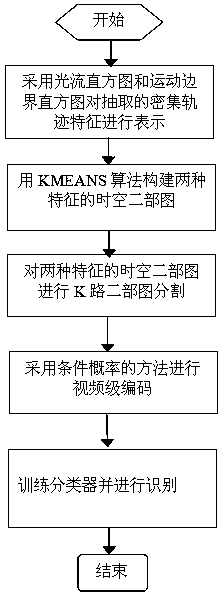

[0019] Embodiments of the present invention include: a human behavior recognition method based on multi-feature spatio-temporal relationship fusion, and the specific steps include:

[0020] Step 1: Perform dense trajectory feature extraction on the video, first perform feature point sampling in a dense grid. In order to make the collected feature points adapt to scale transformation, sampling will be performed in multiple grids with different spatial scales at the same time, and then the dense trajectory feature will track each sampling point by estimating the optical flow field of each frame, and each The sampling points only track L frames within their corresponding spatial scales, and finally...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com