Method and system for forecasting task resource waiting time

A technology of waiting time and predicting time, applied in resource allocation, multi-programming devices, etc., can solve the problems of difficult mixing probability distribution model description, inaccurate probability model evaluation and prediction, and non-obedience to a single one, so as to optimize task resource requirements Effects of configuration, optimized job scheduling, reliable forecast rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

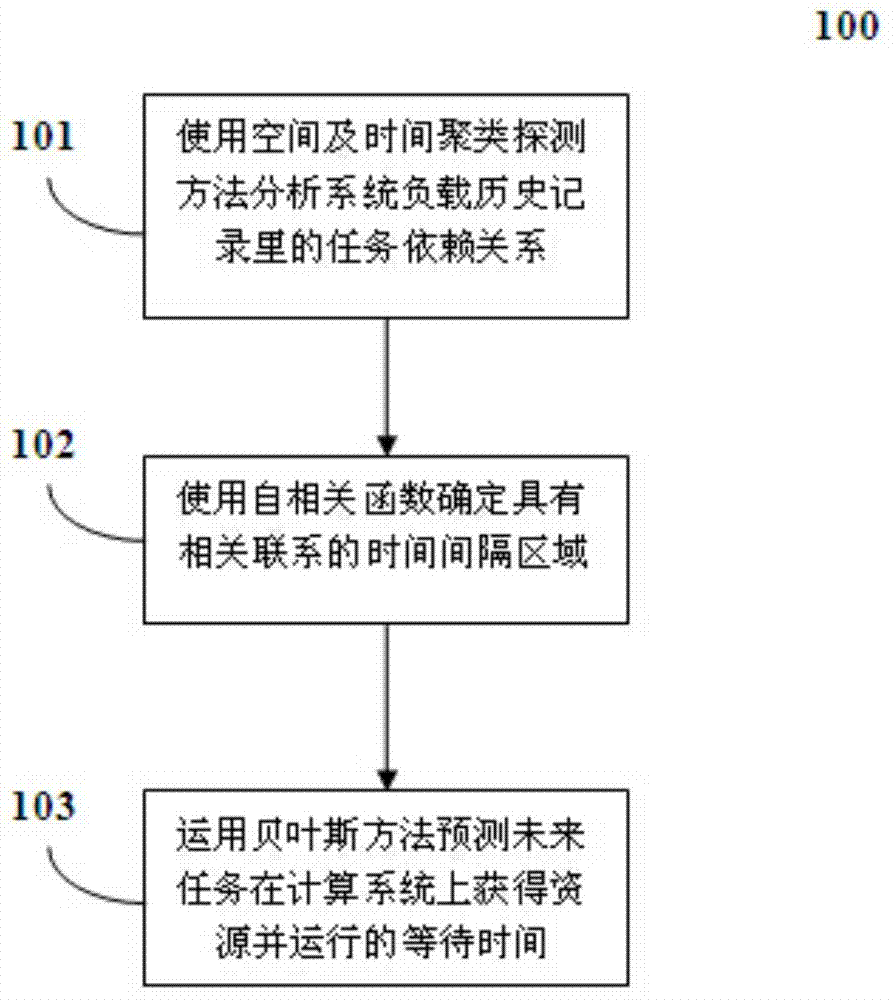

Method used

Image

Examples

Embodiment Construction

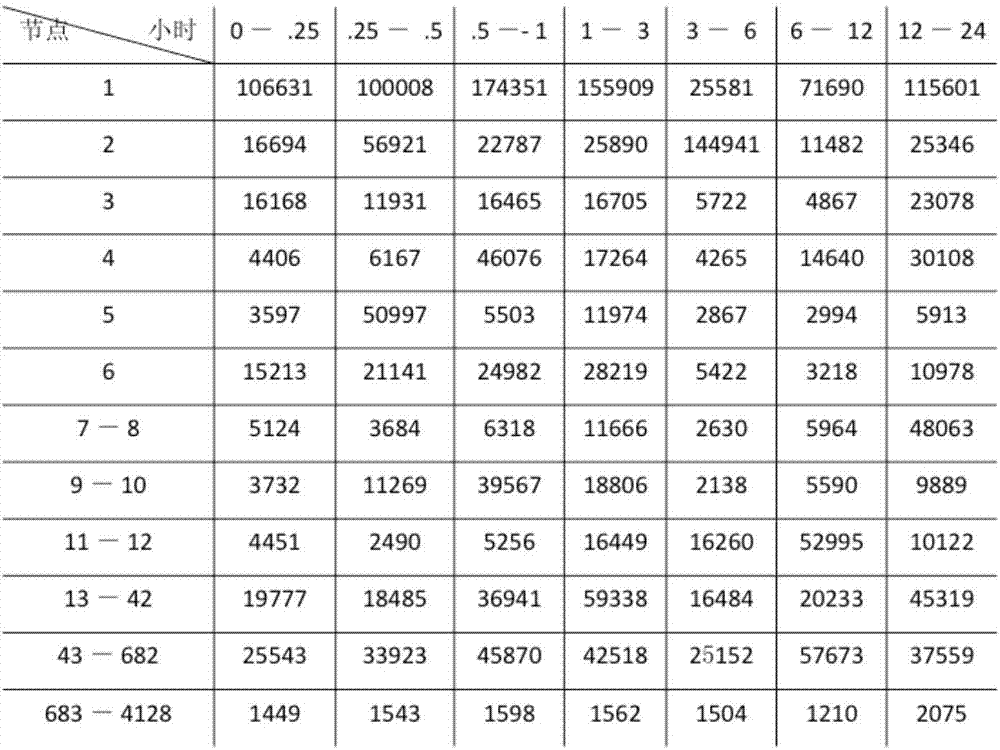

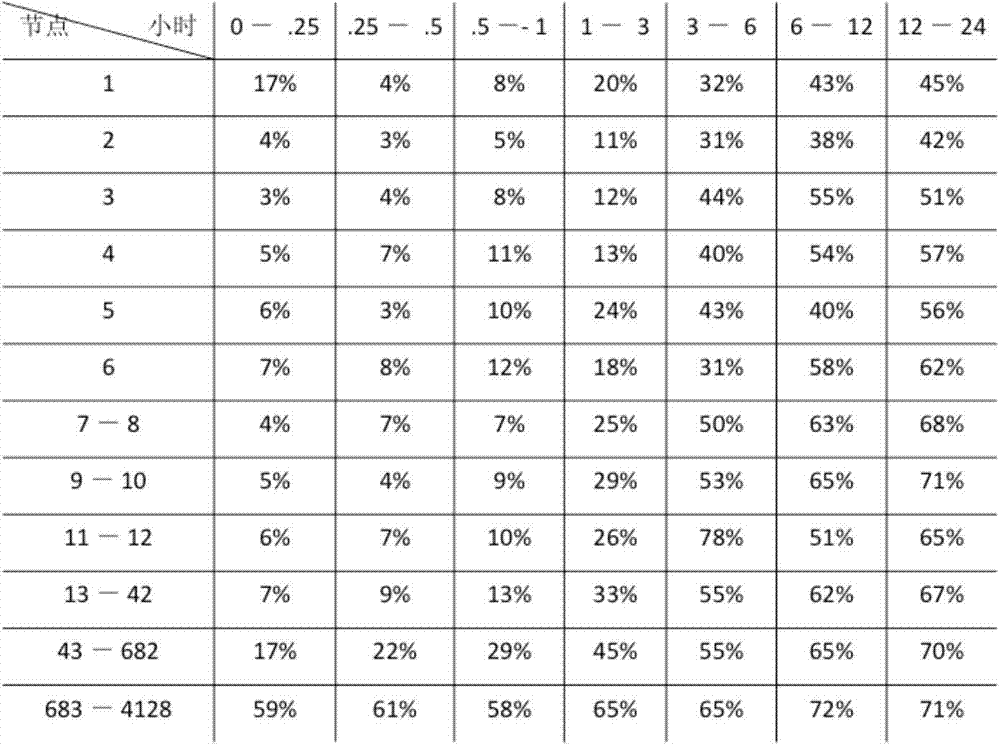

[0047]In the process of studying the historical load of the supercomputer Kraken, the inventor found that the waiting time of about 52.4% of the jobs (tasks) submitted by users in the job execution queue exceeded the actual running time. For each job, especially parallel jobs, there are two basic parameters that express its demand for computing resources: running time and the number of computing nodes (CPU, memory). From the user's point of view, the present invention hopes to adjust these two parameters under the prerequisite of ensuring the correct result, so that the job can be run quickly and reduce the waiting time in the job execution queue. In the process of statistical analysis, a user submits multiple similar jobs (such as the same execution program, different input data, etc.) within a short period of time, which greatly affects the effective statistical results, because the system can simultaneously execute The number of jobs is generally limited. The present inven...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com