Movement target detection and extraction method based on movement attention fusion model

A technology that combines models and moving objects, and is applied in image data processing, instruments, calculations, etc., to achieve the effect of reducing interference and suppressing noise

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

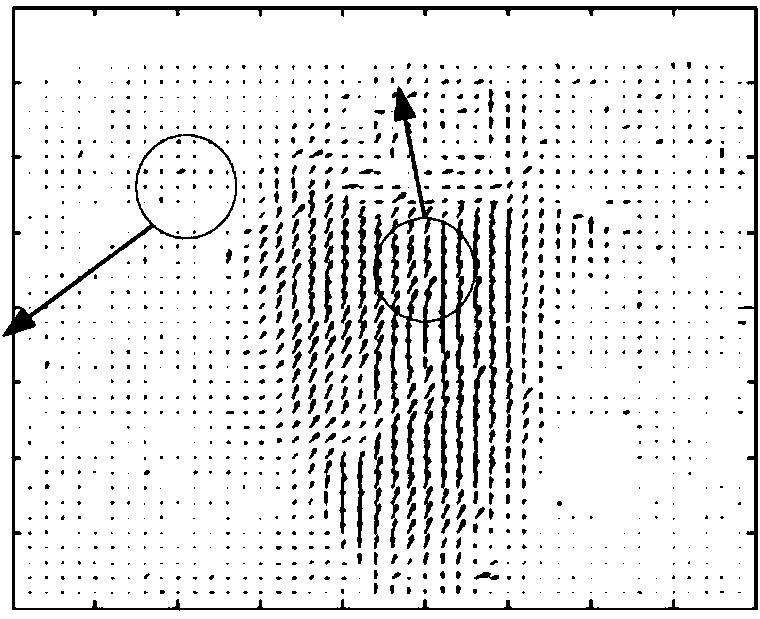

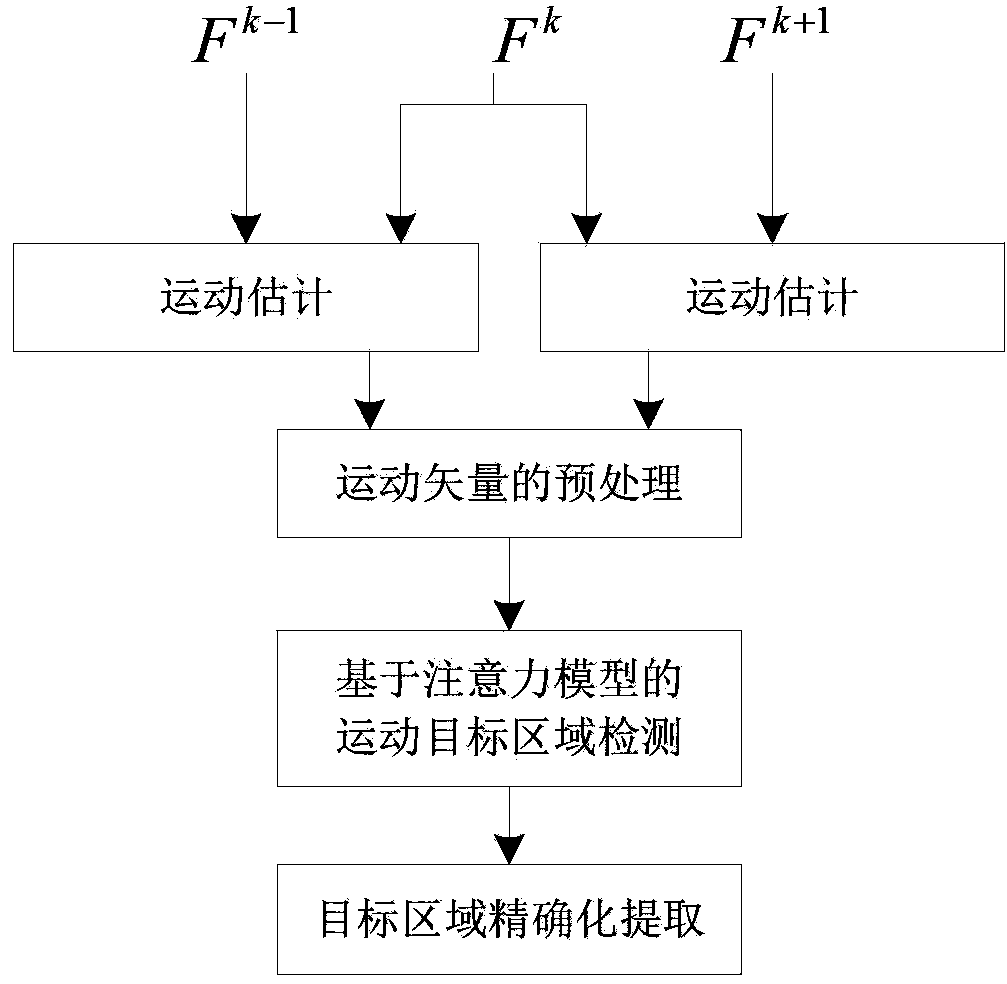

[0048] The invention provides a moving target detection and extraction method based on a motion attention fusion model. According to the motion contrast of the target in time and space, a motion attention fusion model is constructed by utilizing the change characteristics of motion vectors in time and space , combined with noise removal, median filtering, and edge detection, to achieve accurate extraction of moving objects in global moving scenes.

[0049] The method proceeds as follows,

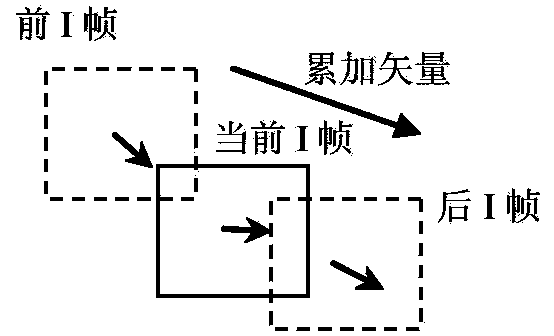

[0050] Step 1. After estimating the motion vector field according to the optical flow equation, perform two preprocessing steps: superposition and filtering:

[0051] Let the image be at pixel r=(x,y) T At , the intensity at time t is recorded as I(r,t), through the optical flow equation v · ▿ I ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com