Distributed decision making supporting massive high-concurrency access I/O (Input/output) server load balancing system

A distributed and server technology, applied in the computer field, can solve problems such as undiscovered, unbalanced, and lacking, and achieve the effects of high computational complexity, minimized access conflict probability, and minimized I/O access conflicts

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

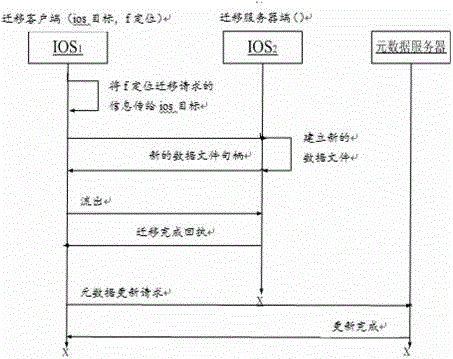

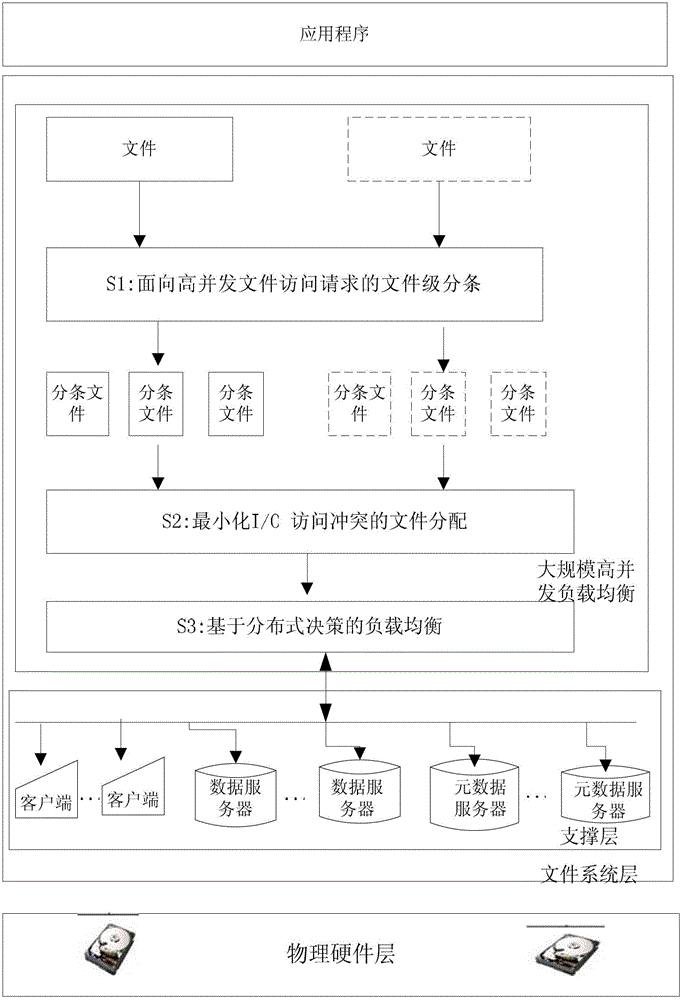

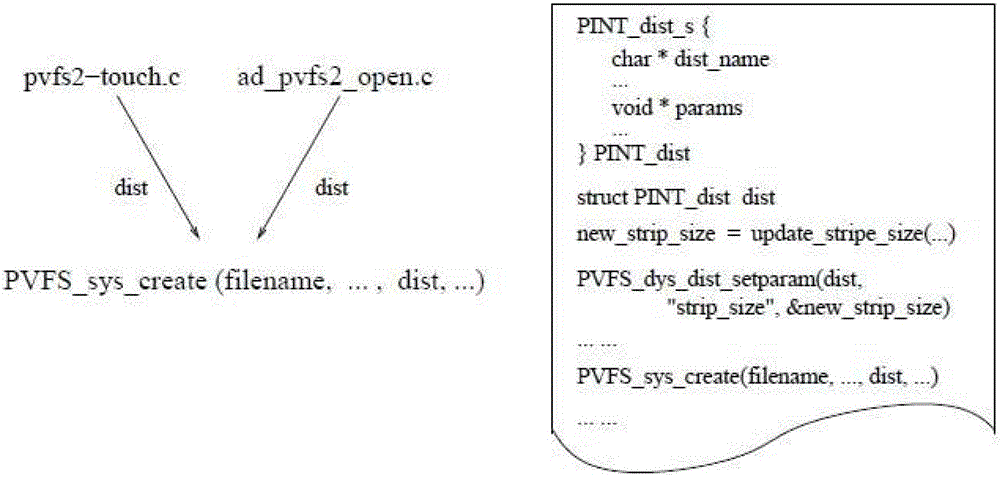

[0039] In order to make the purpose of the present invention, technical solutions and advantages more clearly expressed, taking PVFS (a typical parallel file system platform) and data server load balancing as examples, below in conjunction with the accompanying drawings (such as Figure 1-Figure 5 ) and specific examples will further describe the present invention in detail, but do not constitute a limitation to the present invention. The specific implementation method is as follows:

[0040] Firstly, the description of the mathematical symbols involved in this example is shown in Table 1.

[0041] Table 1. Mathematical symbols involved and their actual meanings

[0042]

[0043] Such as figure 1 As shown, based on the parallel IO system architecture (from top to bottom, application layer -> parallel file system layer -> physical hardware layer), based on the typical parallel file system architecture (client, metadata server and data Server) and the load balancing processi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com