Intelligent fusion method for depth images based on area shades and red green blue (RGB) images

An RGB image and depth image technology, applied in the field of machine vision and image fusion, can solve the problem of difficult to design image fusion algorithm, unable to meet the fusion of depth image and RGB image, etc., to improve the accuracy, reduce the amount of calculation, improve Detecting the effect of speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] In order to make the present invention more comprehensible, preferred embodiments are described in detail below with accompanying drawings.

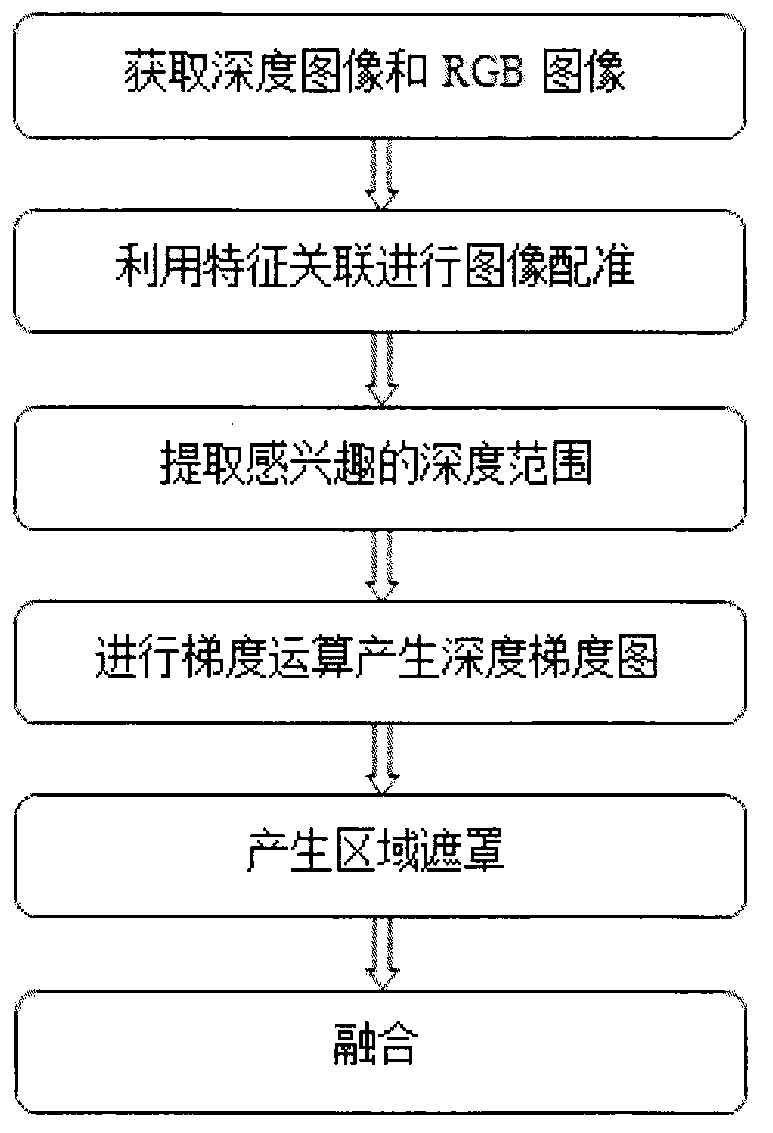

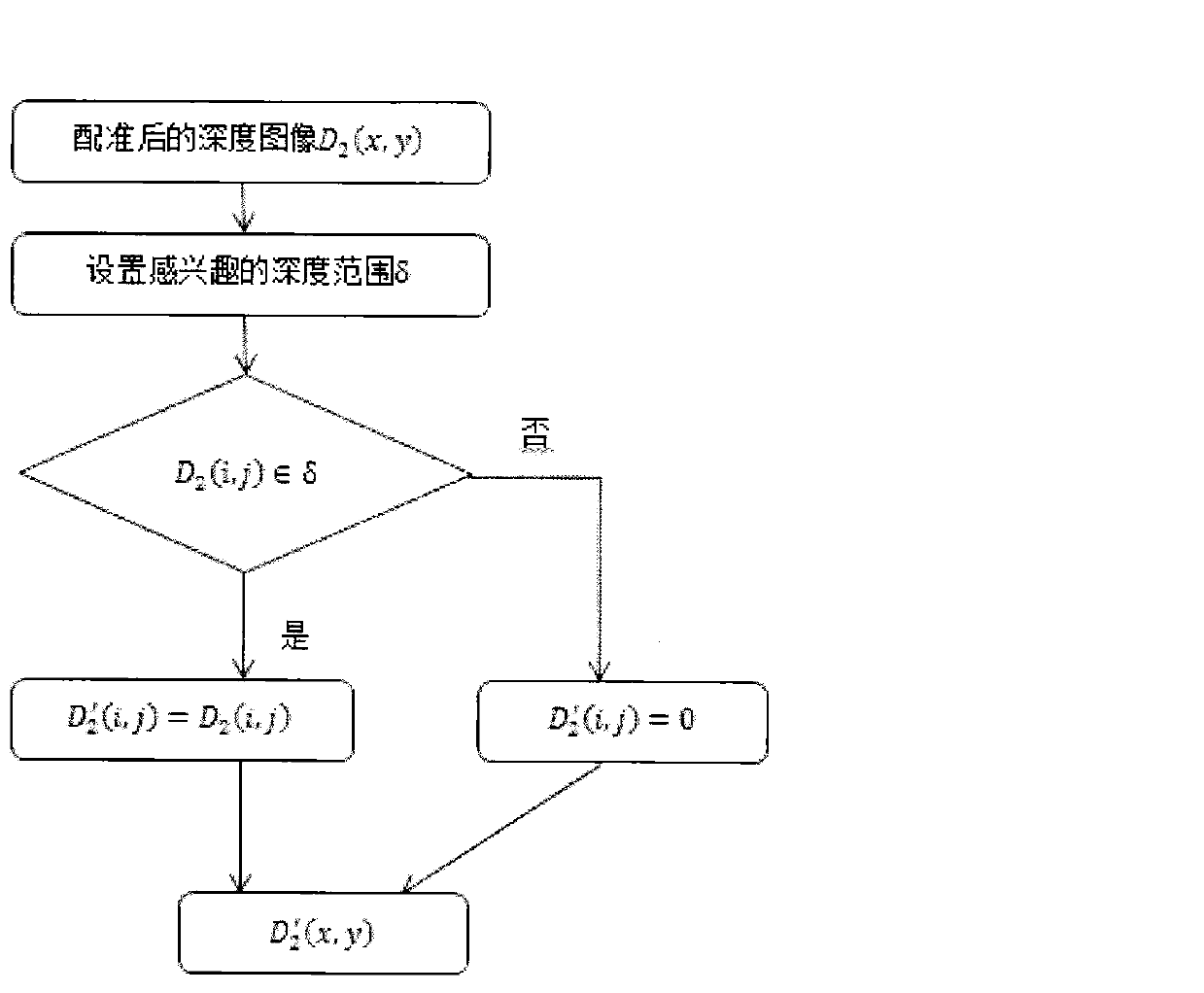

[0035] combine figure 1 , the intelligent fusion method of a kind of depth image and RGB image based on area mask provided by the present invention, it is characterized in that, the steps are:

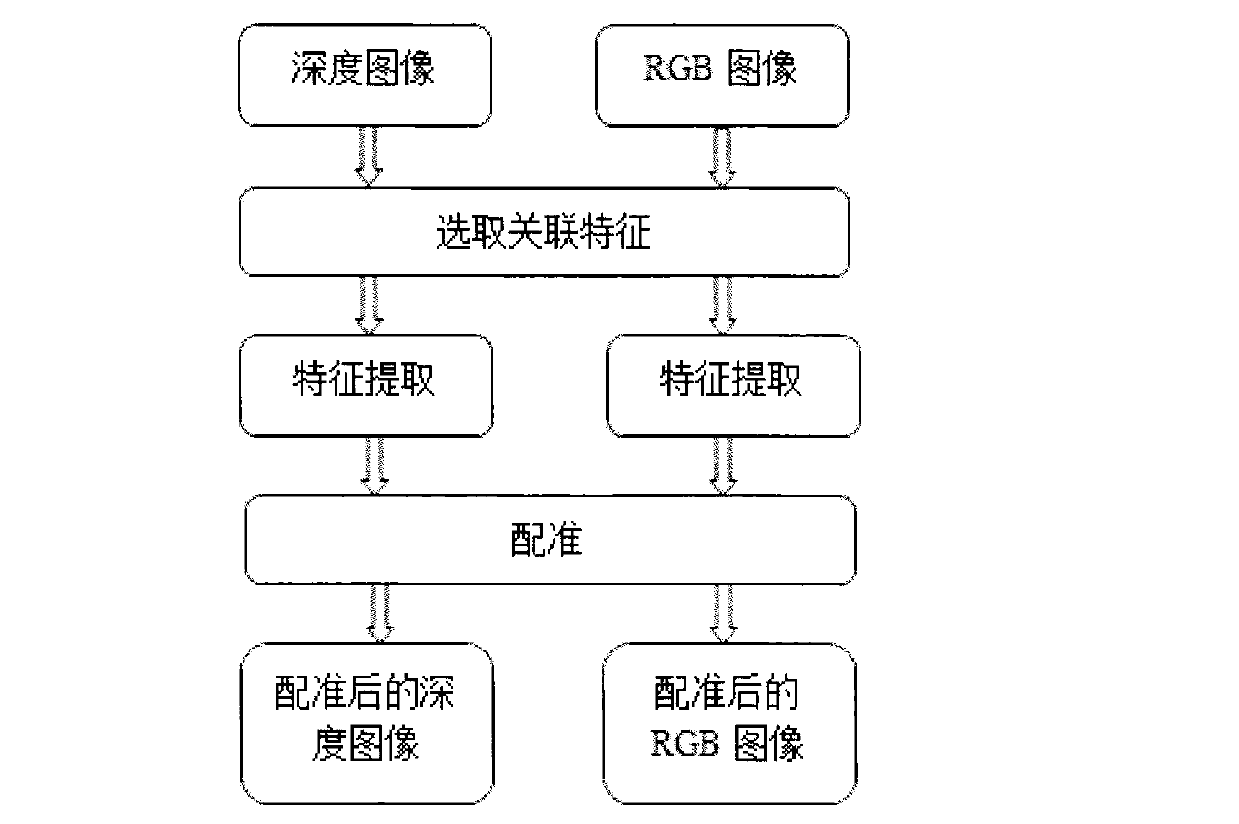

[0036] The first step is to collect the depth image and RGB image of the same scene at the same time, and select the large-area invariant correlation features of the depth image and RGB image. Change.

[0037] In the large-area invariant correlation feature, the "large area" refers to a background plane in a specific scene, or a large surface area of a fixed object. This large-area feature information exists in both the depth image and the RGB image, and the difference is only in the local area features that appear in the RGB image. "Invariance" means that the detection or description of selected large-area features remains unchanged ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com