Human-computer interaction fingertip detection method, device and television

A fingertip detection and human-computer interaction technology, applied in the field of human-computer interaction, can solve the problem of inaccurate detection position, achieve the effect of accurate palm area, expand the scope of application, and improve accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

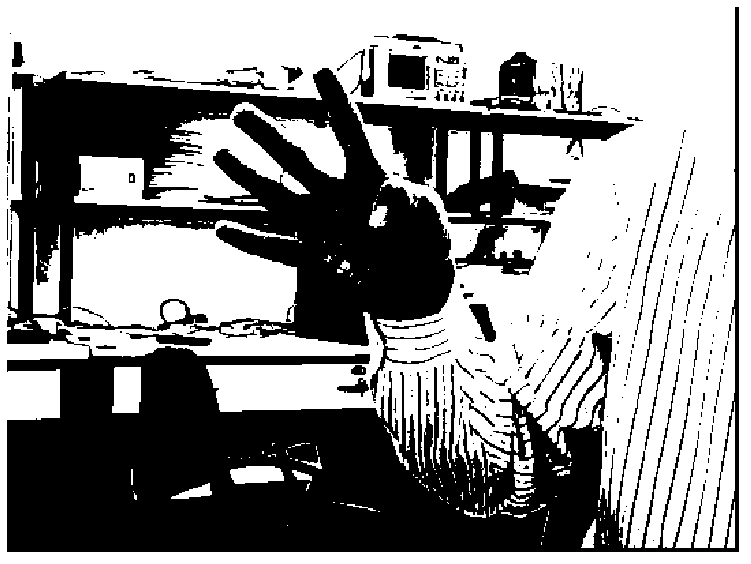

[0046] figure 1 It shows the flow chart of the human-computer interaction fingertip detection method provided by the first embodiment of the present invention. In this embodiment, motion information and skin color information are extracted according to the acquired region of interest, and the motion information and skin color information are fused to obtain Obtain a binary image containing the hand region, and then detect fingertips from the binary image containing the hand region. The details are as follows:

[0047] Step S11, acquiring the ROI of the input video image frame, and extracting the binarized motion information and binarized skin color information of the ROI.

[0048] In the embodiment of the present invention, the input video image frame is mainly a color image, and after the region of interest of the video image frame is obtained, the binarized motion information of the region of interest is extracted according to the motion characteristics and skin color chara...

Embodiment 2

[0056] The second embodiment of the present invention mainly describes step S11 of the first embodiment in more detail, and the rest of the steps are the same as those of the first embodiment, and will not be repeated here.

[0057] Wherein step S11 specifically is:

[0058] A1. Determine the region of interest of the video image frame.

[0059] In this embodiment, an area of interest is defined for the input video image series, for example, when the input color video image series includes all the features of people, according to the human-computer interaction, the corresponding command is executed according to the fingertip movement of the person In this case, the delineated region of interest is usually an area that excludes the areas where the skin color information is different from the palm skin color information and the same but static area between the skin color information and the palm skin color information. The entire arm area of , which excludes the face area a...

Embodiment 3

[0075] The third embodiment of the present invention mainly describes step S12 of the first embodiment in more detail, and the rest of the steps are the same as those of the first or second embodiment, and will not be repeated here.

[0076] Wherein step S12 specifically is:

[0077] B1. Divide the palm area according to the binarized motion information and the binarized skin color information, and obtain a binary image corresponding to the palm area.

[0078] In this embodiment, the palm area is determined in combination with the acquired binarized motion information and binarized skin color information, so as to improve the accuracy of the determined palm area.

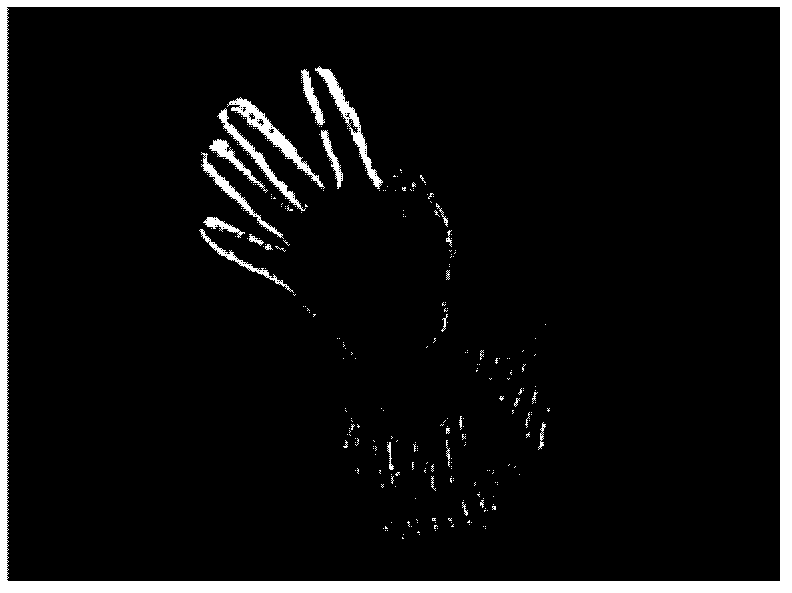

[0079] Among them, the binary image corresponding to the obtained palm area is as follows: Figure 5 shown in Figure 5 In , white is used to indicate the palm area, and black is used to indicate other areas, so the pixel value in the palm area is 1, and the pixel value in other areas is 0. Of course, black can al...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com