Background reconstruction method based on gray extremum

A grayscale extreme value and background technology, which is applied in the field of background reconstruction and can solve the problems of small calculation amount and wrong reconstruction results.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

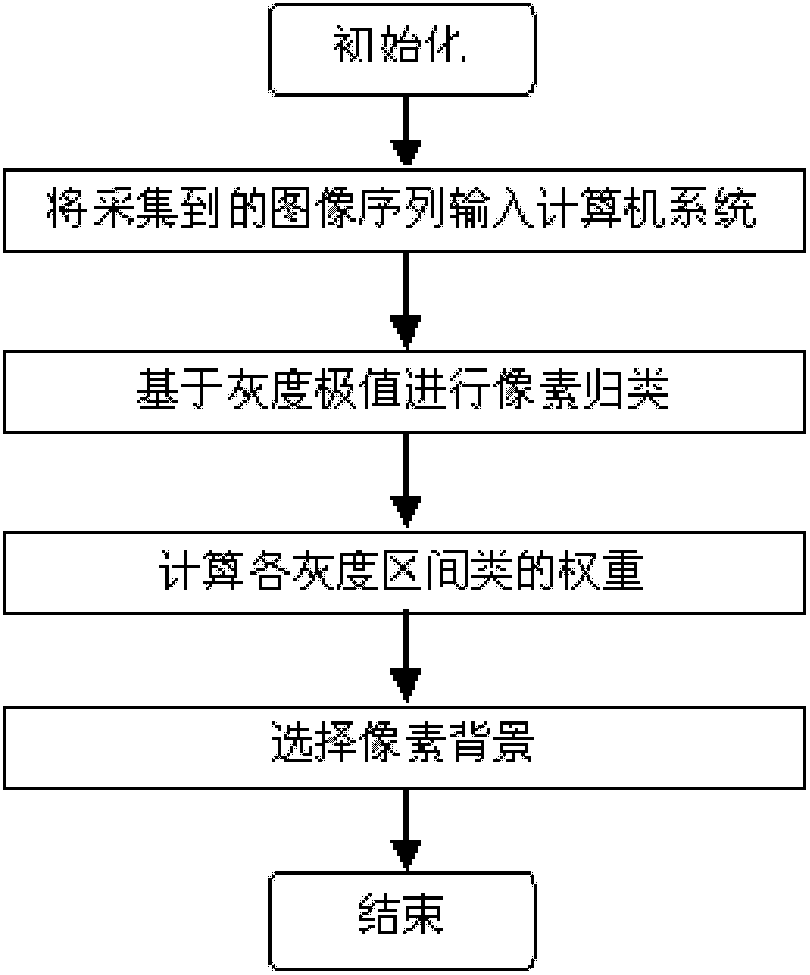

[0057] The background reconstruction method based on the extreme gray value of the present invention specifically includes the following steps:

[0058] Step 1: N frame image sequence (f 1 , f 2 ,..., f N ) is read into the computer system for reconstructing the background image of the scene;

[0059] Step 2: Pixel grayscale classification based on grayscale extreme values

[0060] The central idea of pixel grayscale classification based on grayscale extreme values: divide the image data into several grayscale intervals, each grayscale interval is represented by a minimum value and a maximum value, when new data is input, calculate the The distance between the new data and each gray-scale interval class that has been formed. If the distance between the new data and the nearest gray-scale interval class is less than or equal to the set threshold, the new data will be classified into the nearest gray-scale class Interval class, on the contrary, create a new grayscale inter...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com