Method for implementing decussation retrieval between mediums through amalgamating different modality information

A modal, media technology, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve a large number of manual annotation, no way to directly obtain media objects and other problems, to achieve the effect of powerful functions and accurate precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

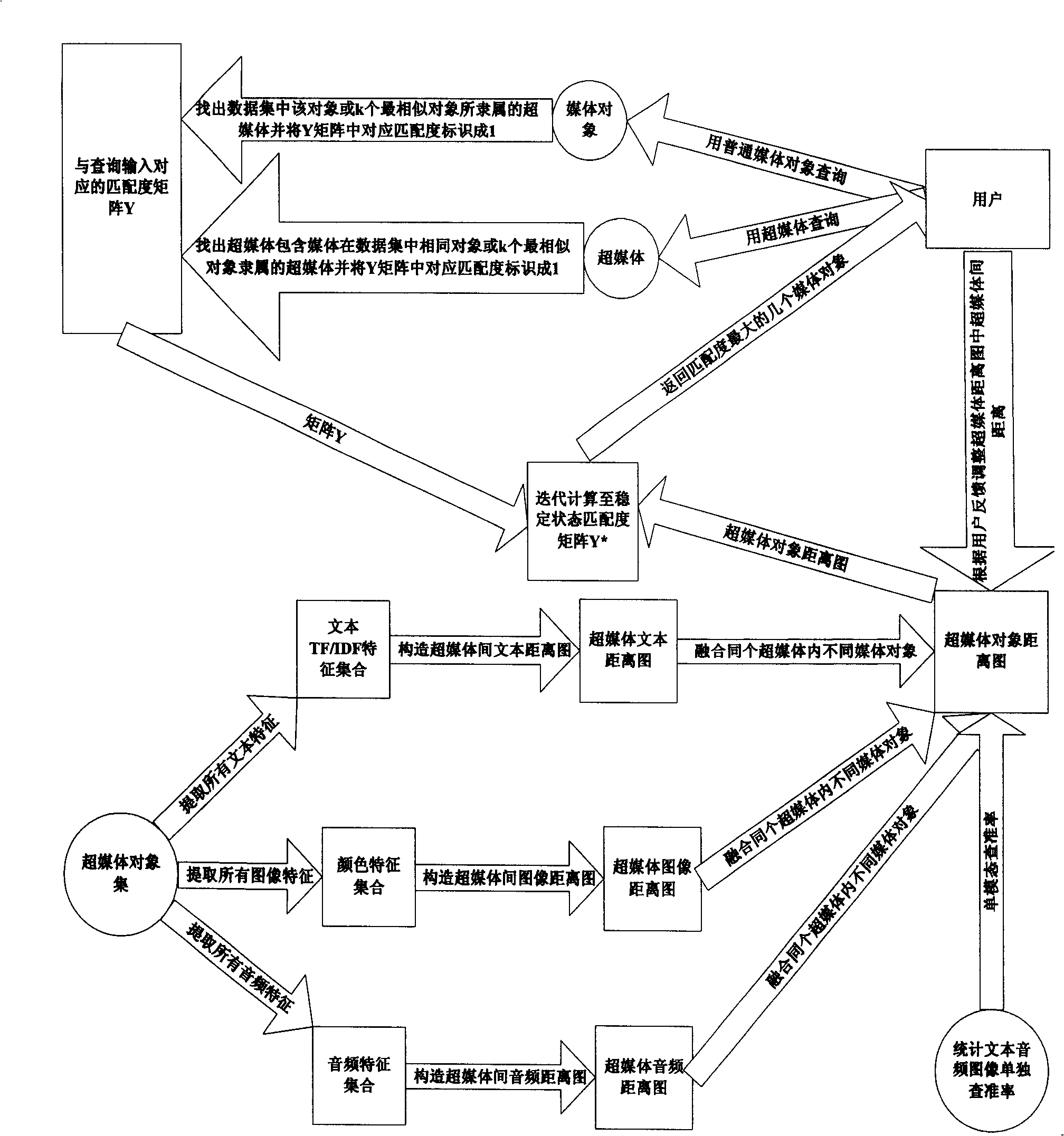

Method used

Image

Examples

Embodiment

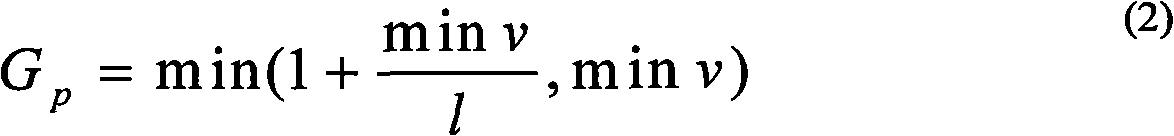

[0064] Assume that there are 1000 hypermedia consisting of 950 images, 100 sound clips and 800 texts. First extract the color features and texture features of all images, where the color features include color histograms, color moments, and color aggregation vectors, and the texture features include roughness, directionality, and contrast, and then calculate the pairwise distance between all images; for sound Segment, extract the Mel frequency cepstral coefficient MFCC, and calculate the distance between all sound objects; for text, calculate the distance between two text objects after vectorization of lexical frequency / inverse document frequency. After the media object distance calculation is completed, the image distance, text distance and sound distance should be normalized respectively. Establish audio distance map A, image distance map I, and text distance map T between hypermedia objects. To establish audio distance map A, firstly, for any hypermedia objects A and B, fir...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com