For example, in the case of data interruption due to a poor network condition, the user may be required to access the content again.

Unfortunately, multimedia content is represented in a streaming

file format so that a user has to view the file from the beginning in order to look for the exact point where the first user left off.

If the multimedia file is viewed by streaming, the user must go through a series of buffering to find out the last accessed position, thus

wasting much time.

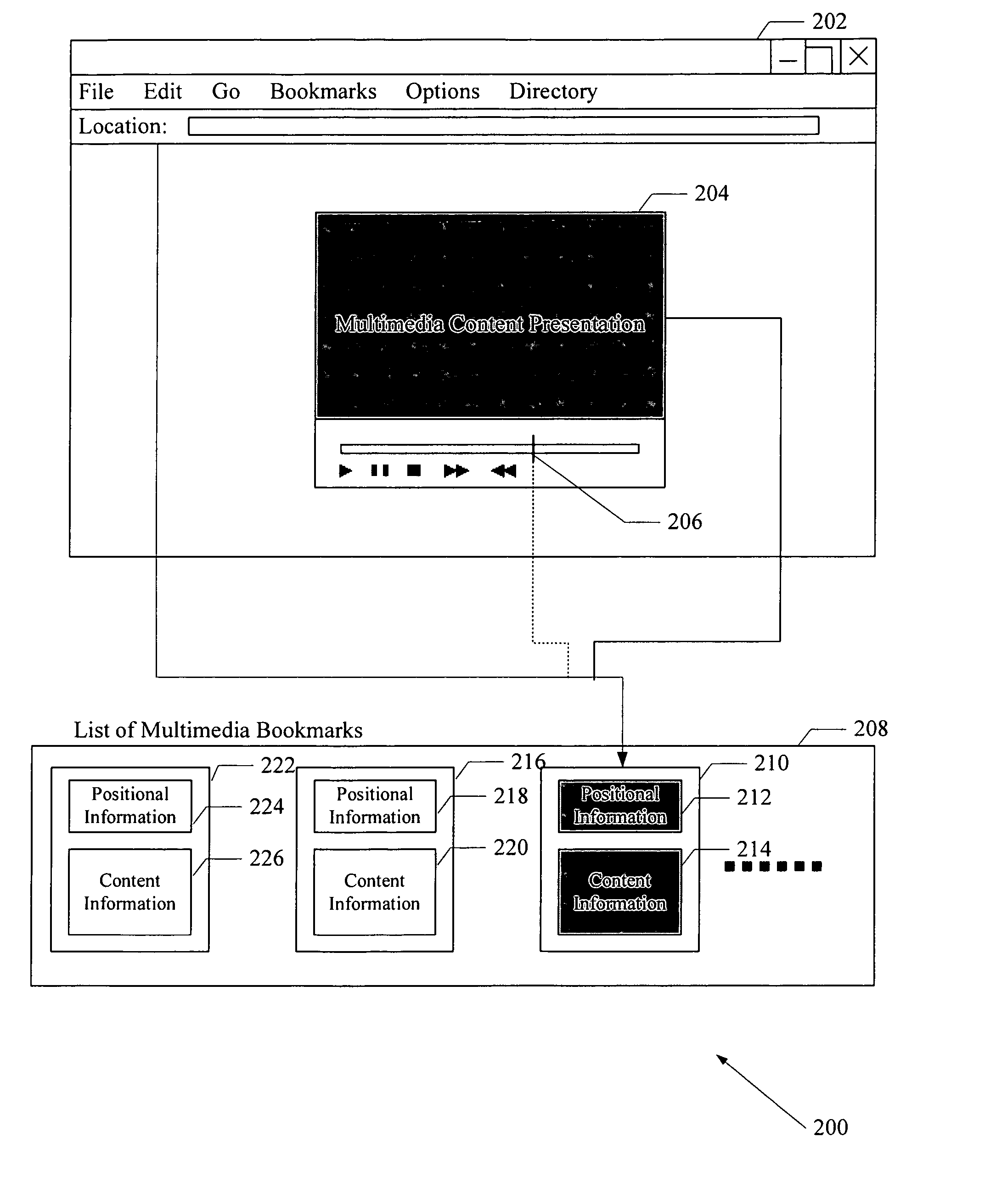

Even for the conventional bookmark with a bookmarked position, the same problem occurs when the multimedia content is delivered in live broadcast, since the bookmarked position within the multimedia content is not usually available, as well as when the user wants to replay one of the variations of the bookmarked multimedia content.

Further, conventional bookmarks do not provide a convenient way of switching between different data formats.

Similarly, if a bookmark incorporating

time information was used to save the last-accessed segment during real-time broadcast, the bookmark would not be effective during later access because the later available version may have been edited or a time code was not available during the real-time broadcast.

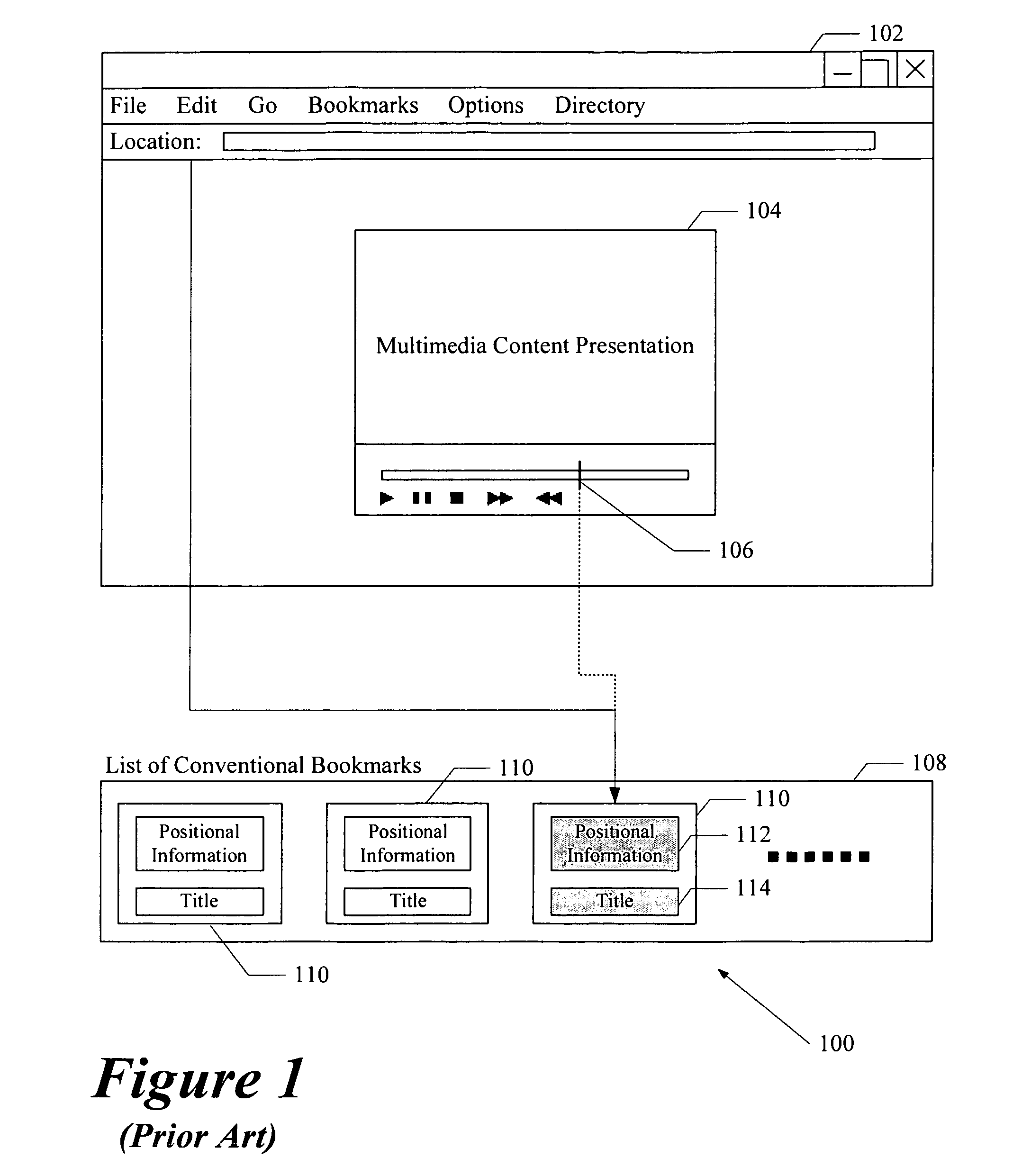

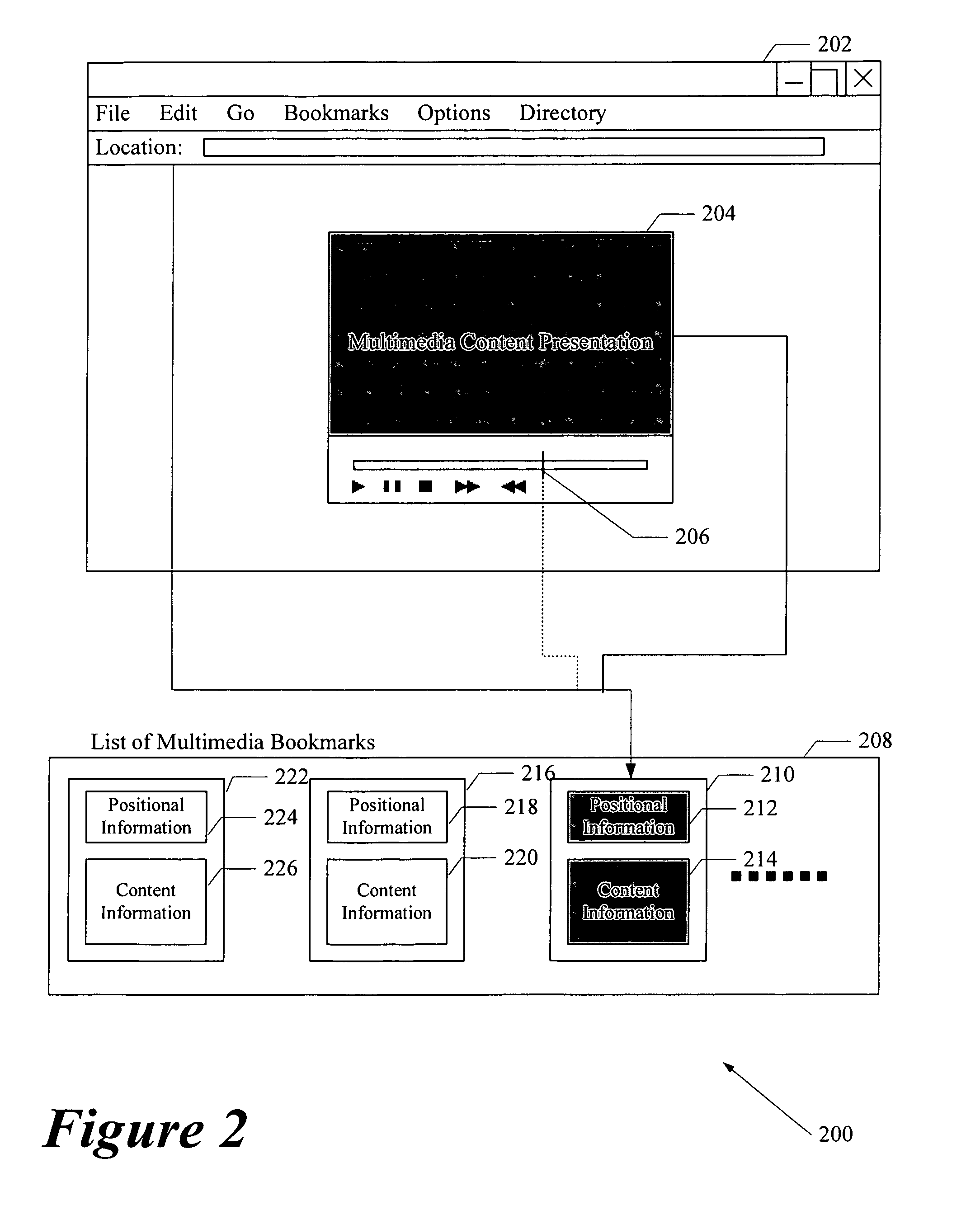

This results in mismatches of time points because a

specific time point of the source video content may be presented as different media time points in the five video files.

When a multimedia bookmark is utilized, the mismatches of positions cause a problem of mis-positioned playback.

The entire multimedia presentation is often lengthy.

However, there are frequent occasions when the presentation is interrupted, voluntarily or forcibly, to terminate before finishing.

However, EPG's two-dimensional presentation (channels vs. time slots) becomes cumbersome as terrestrial, cable, and

satellite systems send out thousands of programs through hundreds of channels.

Navigation through a large table of rows and columns in order to search for desired programs is frustrating.

However, there still exist some problems for the PVR-enabled STBs.

The first problem is that even the latest STBs alone cannot fully satisfy users' ever-increasing desire for diverse functionalities.

The STBs now on the market are very limited in terms of computing and memory and so it is not easy to execute most CPU and memory intensive applications.

However, the generation of such video

metadata usually requires intensive computation and a

human operator's help, so practically speaking, it is not feasible to generate the

metadata in the current STB.

The second problem is related to discrepancy between the two time instants: the time instant at which the STB starts the recording of the user-requested TV program, and the time instant at which the TV program is actually broadcast.

This time mismatch could bring some inconvenience to the user who wants to view only the requested program.

While high-level image descriptors are potentially more intuitive for common users, the derivation of high-level descriptors is still in its experimental stages in the field of

computer vision and requires complex

vision processing.

Despite its efficiency and ease of implementation, on the other hand, the main

disadvantage of low-level image features is that they are perceptually non-intuitive for both expert and non-expert users, and therefor, do not normally represent users' intent effectively.

Perceptually similar images are often highly dissimilar in terms of low-level image features.

Searches made by low-level features are often unsuccessful and it usually takes many trials to find images satisfactory to a user.

When the refinement is made by adjusting a set of low-level feature weights, however, the user's intent is still represented by low-level features and their basic limitations still remain.

Due to its limited feasibility for general image objects and complex

processing, its utility is still restricted.

However, it is also known that those approaches are not adequate for the

high dimensional feature vector spaces, and thus, they are useful only in low dimensional feature spaces.

The prior art method, however, confronts two major problems mentioned below.

The first problem of the prior art method is that it requires additional storage to store the new version of an edited video file.

In this case, the storage is wasted storing duplicated portions of the video.

The second problem with the prior art method is that a whole new

metadata have to be generated for a newly created video.

If the metadata are not edited in accordance with the edition of the video, even if the metadata for the specific segment of the input video are already constructed, the metadata may not accurately reflect the content.

If the

display size of a

client device is smaller than the size of the image, sub-sampling and / or

cropping to fit the

client display must reduce the spatial resolution of the image.

Users very often in such a case have difficulty in recognizing the text or the human face due to the excessive resolution reduction.

Although the importance value may be used to provide information on which part of the image can be cropped, it does not provide a quantified measure of perceptibility indicating the degree of allowable

transcoding.

For example, the prior art does not provide the quantitative information on the allowable compression factor with which the important regions can be compressed while preserving the minimum fidelity that an author or a publisher intended.

The InfoPyramid does not provide either the quantitative information about how much the spatial resolution of the image can be reduced or ensure that the user will perceive the transcoded image as the author or publisher initially intended.

Even if abrupt scene changes are relatively easy to detect, it is more difficult to identify

special effects, such as dissolve and wipe.

However, these approaches usually produce many false alarms and it is very hard for humans to exactly locate various types of shots (especially dissolves and wipes) of a given video even when the dissimilarity measure between two frames are plotted, for example when they are plotted in 1-D graph where the

horizontal axis represents time of a

video sequence and the

vertical axis represents the dissimilarity values between the histograms of the frames along time.

They also require high computation load to

handle different shapes, directions and patterns of various wipe effects.

As contents become readily available on

wide area networks such as

the Internet, archiving, searching, indexing and locating desired content in large volumes of multimedia containing image and video, in addition to the text information, will become even more difficult.

However, most of the compressed domain methods

restrict the detection of text in I-frames of a video because it is time-consuming to obtain the AC values in DCT for intra-frame coded frames.

Login to View More

Login to View More