Data processing method and device, AI chip, electronic equipment and storage medium

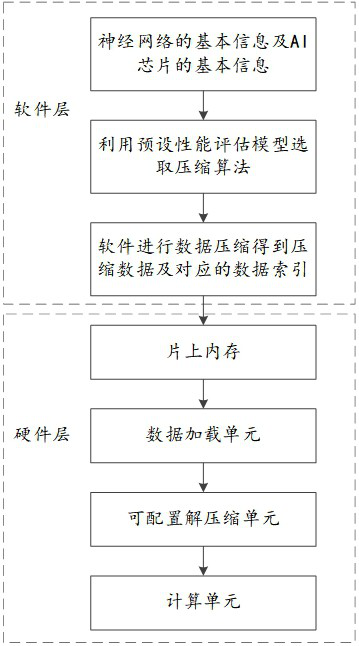

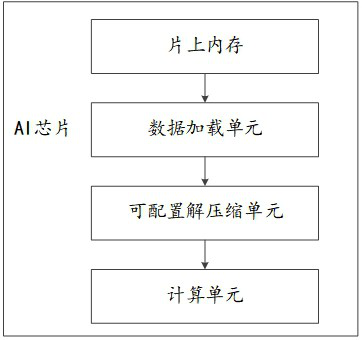

A data processing and chip technology, applied in the field of neural networks, can solve problems such as not obvious benefits, not supporting sparse network compression and decompression operations, not suitable for current network scenarios, etc., and achieve the effect of improving efficiency and good hardware performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

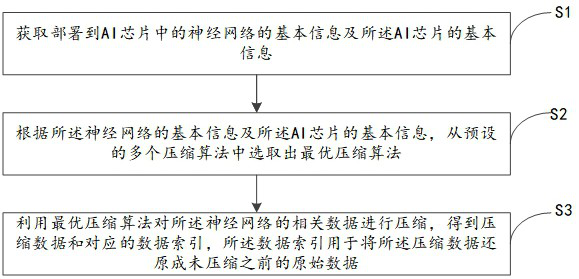

[0068] In an optional implementation manner, when using the optimal compression algorithm to compress the relevant data of the neural network, the process may be: dividing the relevant data of the neural network into blocks according to the format required by the hardware; Each data block is aligned according to the alignment requirements required by the hardware; the optimal compression algorithm is used to compress each aligned data block according to the alignment requirements required by the hardware to obtain the corresponding compressed data and corresponding data index to ensure that the compressed data conforms to the alignment requirements required by the hardware.

[0069] Since the relevant data (uncompressed data) of the neural network may be large, such as 100M, if the computing unit can only complete 1M task calculations at a time. At this time, the hardware needs to repeat 100 times to load the data into the computing unit for calculation. The data loaded each ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com