Monocular depth estimation method fusing multi-modal information

A depth estimation, multimodal technology, applied in the cross field, to achieve the effect of high depth estimation accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

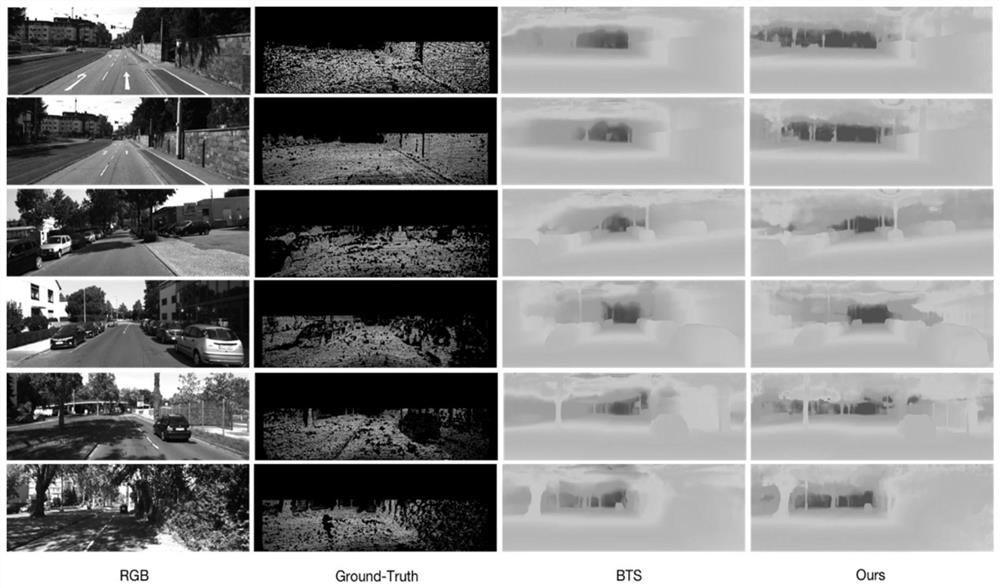

[0034] The present invention will be further described below with reference to the accompanying drawings and specific embodiments.

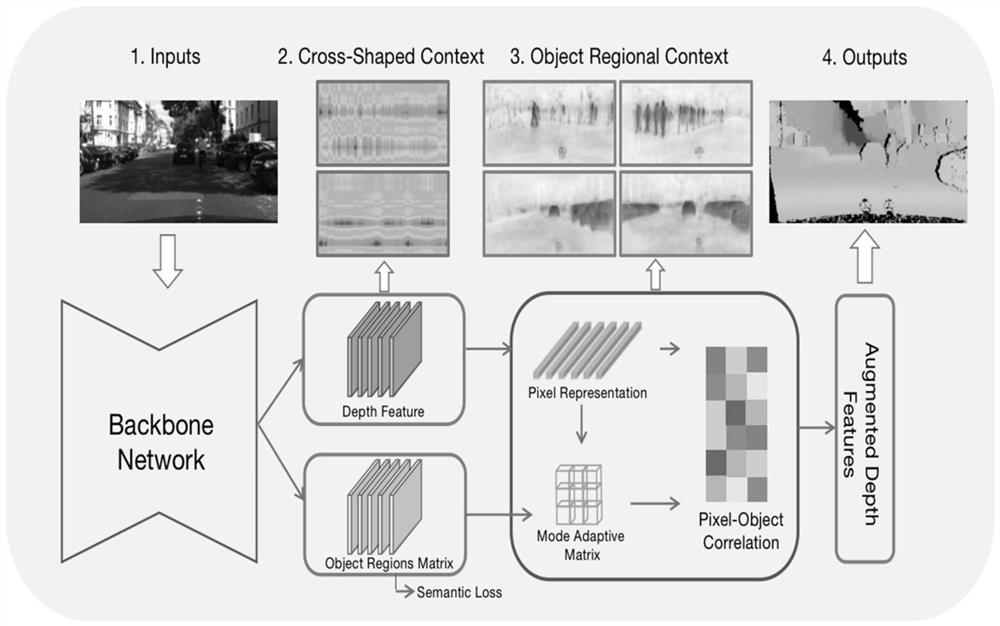

[0035] The process of the present invention is as follows figure 1 shown, including the following steps:

[0036] Step 1, the backbone network extracts the basic feature map.

[0037] After reading the image, perform feature extraction on the input RGB image. The available deep convolutional neural networks include ResNet (Deep residual network) and HRNet (Deep High-Resolution Representation Learning), and they are all pre-trained on the MIT ADE20K dataset.

[0038] Step 2, Cross Region Context Aggregation (CSC)

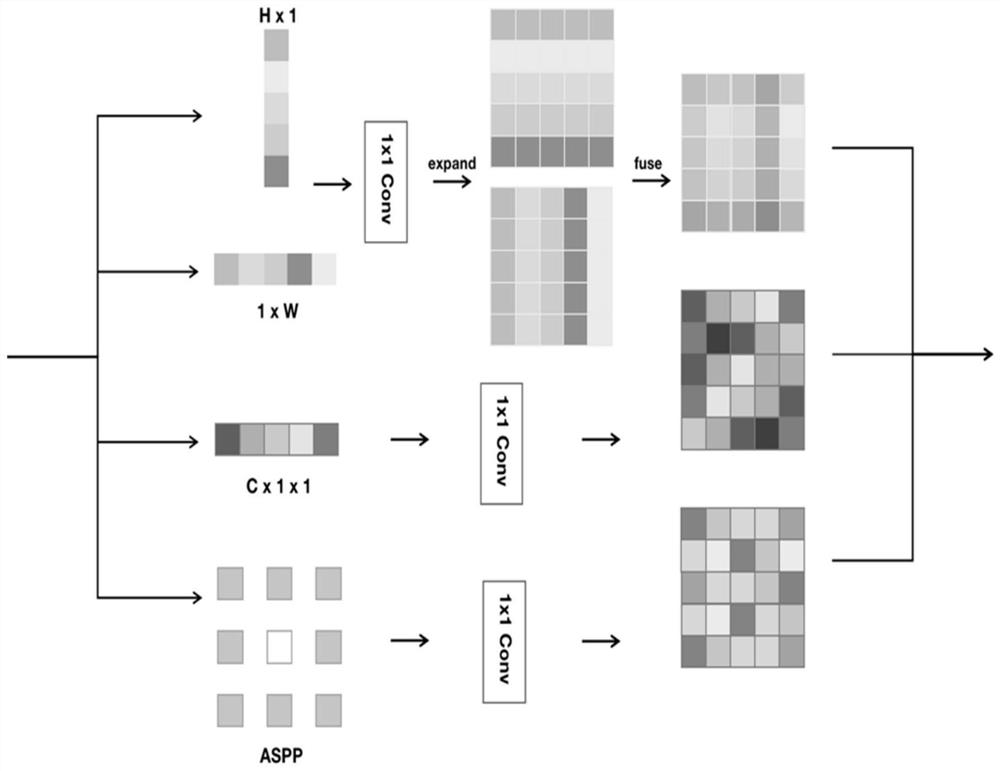

[0039] Existing methods consider context aggregation at multiple scales. For example, the 2018 CVPR article "Deep OrdinalRegression Network for Monocular Depth Estimation", or DORN for short, employs atrous Spatial Pyramid Pooling (ASPP) to capture spatial context at multiple local scales and employs a full-image encoder (global ave...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com