Graph execution pipeline parallel method and device for neural network model calculation

A neural network model and execution device technology, applied in the field of deep learning, can solve the problems of limiting the acceleration ratio and throughput rate of distributed systems, high execution costs, and low resource utilization of graph execution systems, and achieve easy distributed training and use The effect of low threshold

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

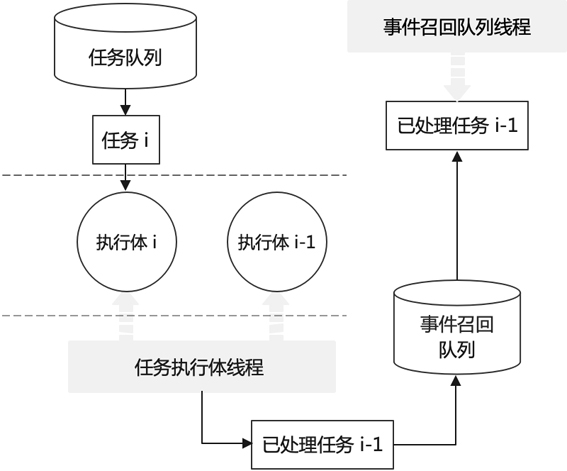

[0073] see Figure 4 , the construction of the physical calculation graph is composed of forward operator x->forward operator y->forward operator z and reverse operator Z->reverse operator Y->reverse operator X, respectively according to each The operator creates an executive body that runs its own kernel function, corresponding to the execution calculation graph that constitutes executive body a->executive body b->executive body c->executive body C->executive body B->executive body A; Executor, which runs the entire computational graph in parallel.

[0074] T1 moment:

[0075] Input the first batch of data, execute body a input data: execute body a runs the kernel function of forward operator x, and write the output tensor of the running result into the free memory block r11.

[0076] Execution body b, execution body c, execution body C, execution body B, and execution body A have no readable input tensor data, so execution bodies b, c, C, B, and A are in the waiting state....

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap