Photometric Stereo 3D Reconstruction Method Based on Deep Learning for High Frequency Region Enhancement

A deep learning and photometric stereo technology, which is applied in neural learning methods, 3D modeling, complex mathematical operations, etc., can solve the problems of fuzzy 3D reconstruction results and large errors in high frequency regions, so as to improve task accuracy and 3D reconstruction accuracy. , the effect of rich details

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

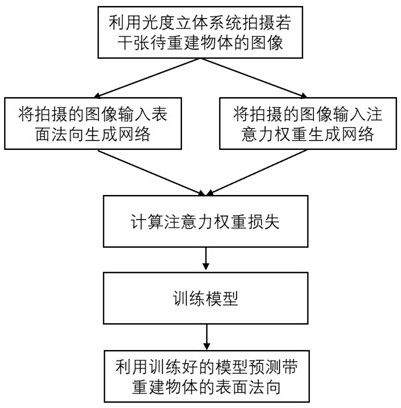

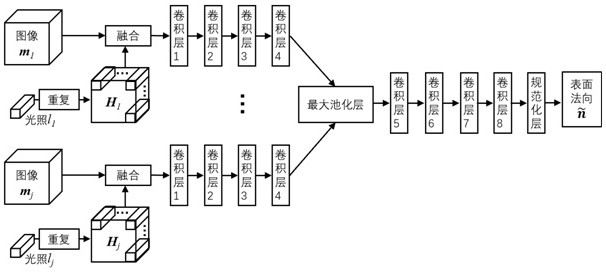

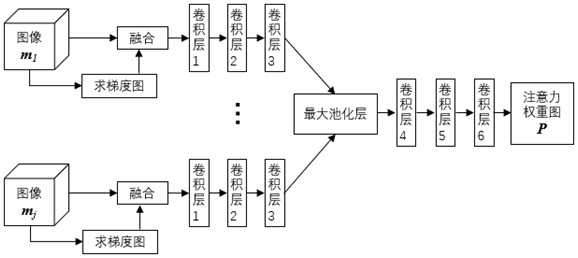

[0045] like figure 1 , a high-frequency area-enhanced photometric three-dimensional reconstruction method based on deep learning, which is characterized in that it includes the following steps:

[0046] 1) Using the photometric stereo system, take several images of the object to be reconstructed:

[0047] The object to be reconstructed is photographed under the illumination of a single parallel white light source, and the center of the object to be reconstructed is taken as the origin of the coordinate axis to establish a Cartesian coordinate system. The position of the white light source is determined by the vector in the Cartesian coordinate system l = [ x,y,z ]express;

[0048] Change the position of the light source to obtain images under another light direction; usually at least 10 images under different light directions are required to be taken, denoted as m 1 , m 2 , ..., m j , At the same time, the corresponding light source position is denoted as l 1 ,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com