Information processing device, information processing method, and information processing program

An information processing device and program technology, applied in the field of neural networks, can solve problems such as inability to perform real-time neural network actions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach 1

[0033] ***summary***

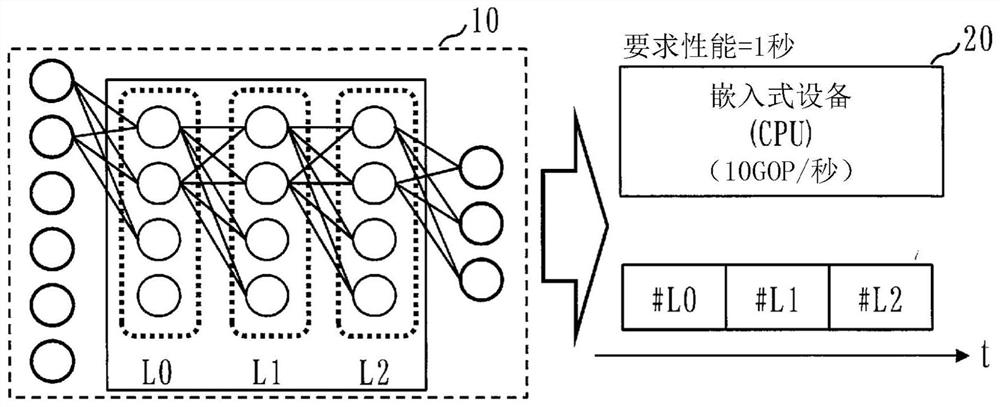

[0034] In this embodiment, weight reduction of the neural network when the neural network is installed in a device with limited resources such as an embedded device will be described.

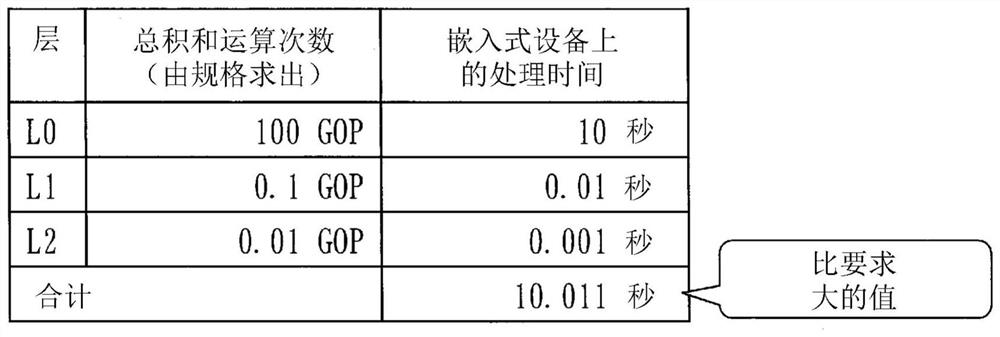

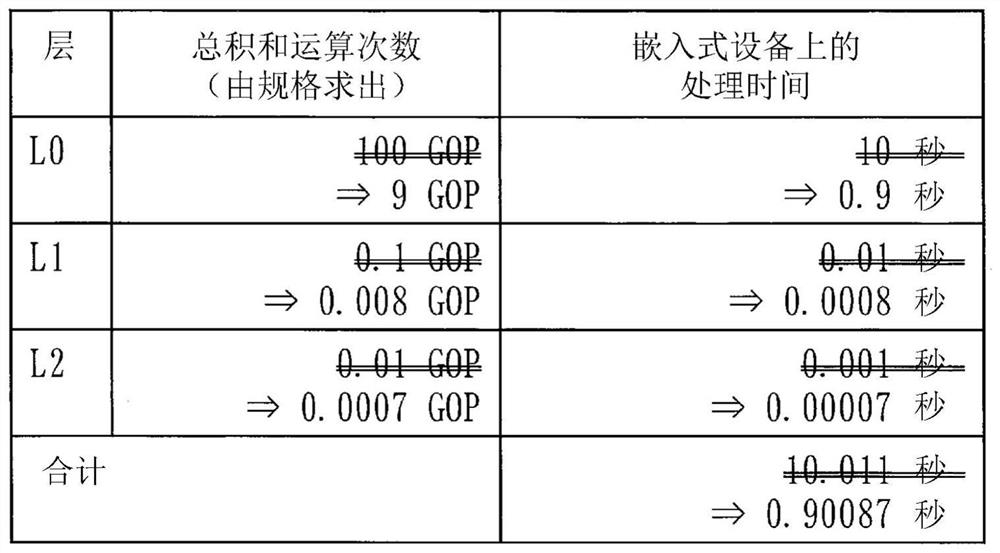

[0035] More specifically, in this embodiment, the layer with the largest amount of computation is extracted among the layers of the neural network. Then, the computation load of the extracted layers is reduced to meet the required processing performance. In addition, relearning is performed after reducing the amount of computation, thereby suppressing a decrease in the recognition rate.

[0036] By repeatedly executing the above steps, according to the present embodiment, it is possible to obtain a neural network with a small amount of computation that can be installed in devices with limited resources.

[0037] ***step***

[0038] Next, the procedure for reducing the weight of the neural network according to the present embodiment will be described with reference to t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com