L1 cache sharing method for GPU

A high-speed cache and S12 technology, applied in the GPU field, can solve problems such as large memory access overhead, unbalanced resource utilization, and inability to fully utilize L1 cache resources.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] In order to make the purpose, technical solution and advantages of the present invention clearer, the technical solution of the present invention will be clearly and completely described below in conjunction with specific embodiments of the present invention and corresponding drawings. Apparently, the described embodiments are only some of the embodiments of the present invention, but not all of them. Based on the embodiments of the present invention, all other embodiments obtained by persons of ordinary skill in the art without making creative efforts fall within the protection scope of the present invention.

[0034] The technical solution provided by an embodiment of the present invention will be described in detail below with reference to the accompanying drawings.

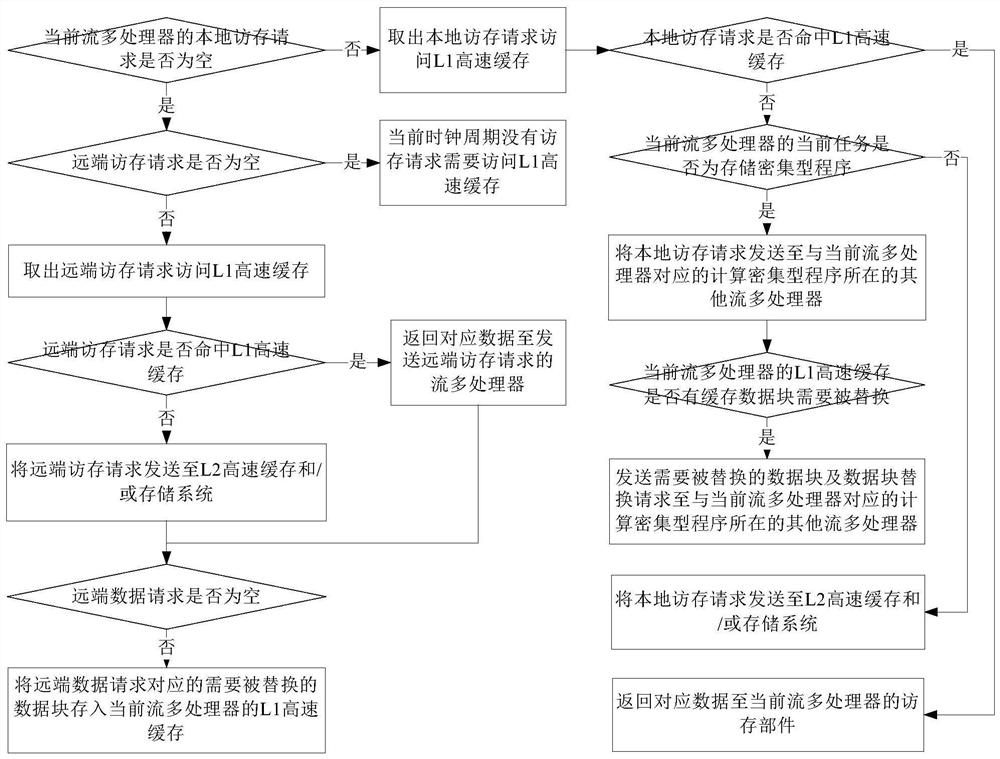

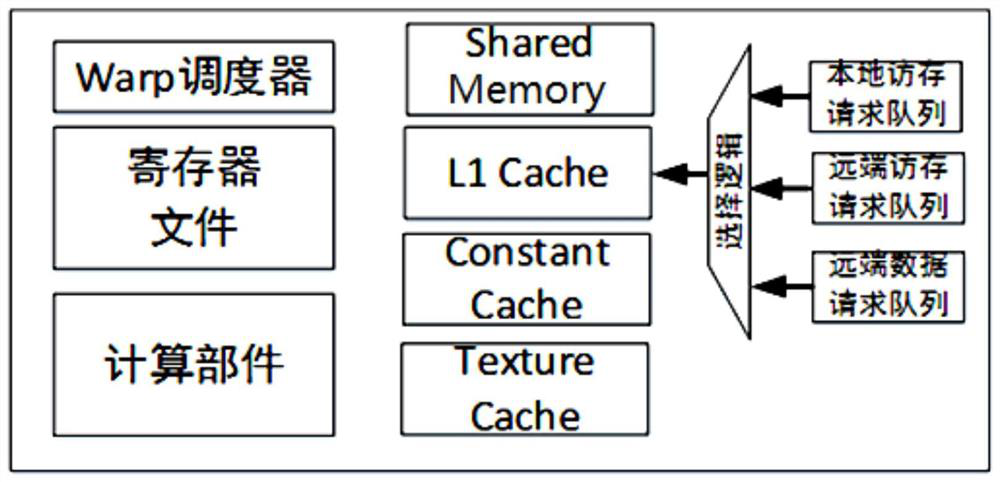

[0035] see figure 1 , an embodiment of the present invention provides a method for sharing an L1 cache of a GPU, the method is used for a spatially multitasking GPU that simultaneously runs a computati...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com