Multi-modal emotion recognition method and system

An emotion recognition and multi-modal technology, applied in the field of emotion recognition, can solve the problems of not considering the emotion distinguishability of facial expression images, low emotion recognizability of expression images, poor model performance, etc., to achieve effective feature learning, The effect of performance improvement and small amount of calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0057] The purpose of this embodiment is to provide a multi-modal emotion recognition method.

[0058] A method for multimodal emotion recognition, comprising:

[0059] Extract the emotional speech component and the emotional image component in the emotional video, and store them separately;

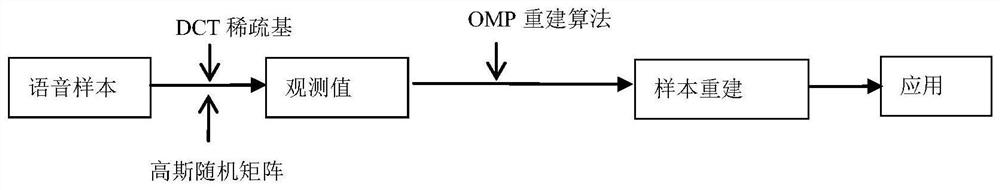

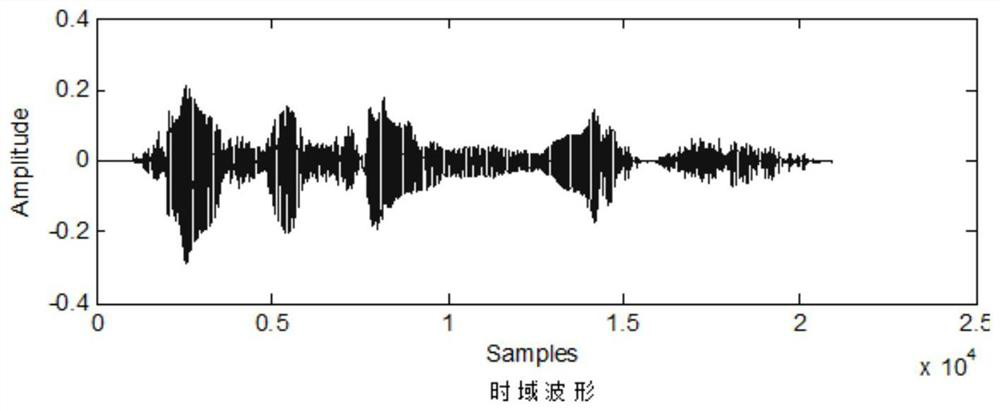

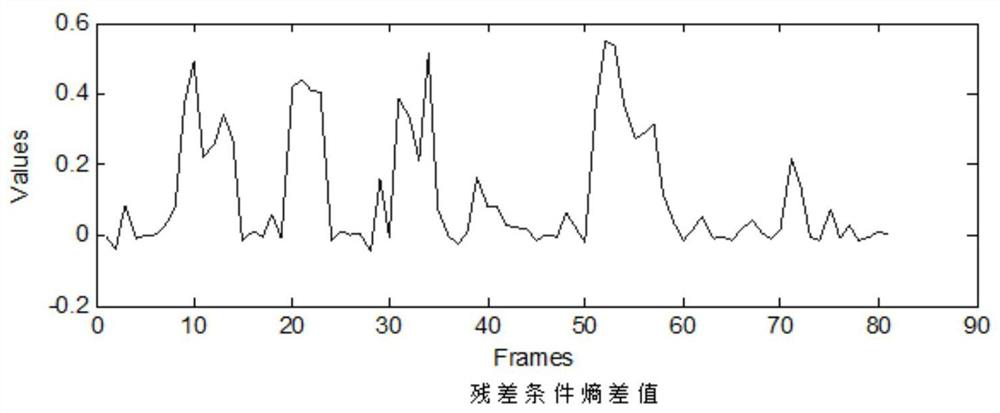

[0060] Using the emotional speech residual conditional entropy difference endpoint detection method to carry out endpoint detection to the emotional speech component, obtain the endpoint detection result of each frame of speech;

[0061] Screen the emotional image in the emotional image component based on the endpoint detection result of the emotional speech component, and remove the emotional image of the silent segment in the emotional image component;

[0062] Feature extraction is performed on the reconstructed emotional speech component and the filtered emotional image component respectively;

[0063] Fusion is carried out to the feature of emotional voice component and the featur...

Embodiment 2

[0101] The purpose of this embodiment is to provide a multi-modal emotion recognition system.

[0102] A multimodal emotion recognition system, comprising:

[0103] A data acquisition module, which is used to extract the emotional speech component and the emotional image component in the emotional video, and store them respectively;

[0104] An endpoint detection module, which is used to detect the endpoint of the emotional speech component using the emotional speech residual conditional entropy difference endpoint detection method, and obtain the endpoint detection result of each frame of speech;

[0105] An image screening module, which is used to screen the emotional image in the emotional image component based on the endpoint detection result of the emotional voice component, and remove the emotional image of the silent segment in the emotional image component;

[0106] A feature extraction module, which is used to extract the features of the reconstructed emotional speec...

Embodiment 3

[0110] In this embodiment, a method for detecting the working status of customer service personnel in a call center is provided, and the detection method utilizes the above-mentioned multi-modal emotion recognition method.

[0111] When dealing with problems, customer service personnel need to communicate with customers and answer all kinds of questions from customers non-stop. This kind of work has the characteristics of cumbersome content and high pressure. At the same time, the attitude of customers is not friendly in some cases. In the working environment, customer service personnel will have certain negative emotions, and if the customer service personnel have certain negative emotions such as disgust or anger, it will seriously affect the service quality, and it is also very detrimental to the mental health of the customer service personnel themselves. However, the multi-modal emotion recognition method proposed in the present disclosure can be effectively applied to the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com