Method for predicting resource performance of cloud server based on LSTM-ACO model

A cloud server and model prediction technology, which is applied in computing models, biological models, neural learning methods, etc., can solve problems such as instability, fluctuating cloud server resource performance, and slow convergence speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

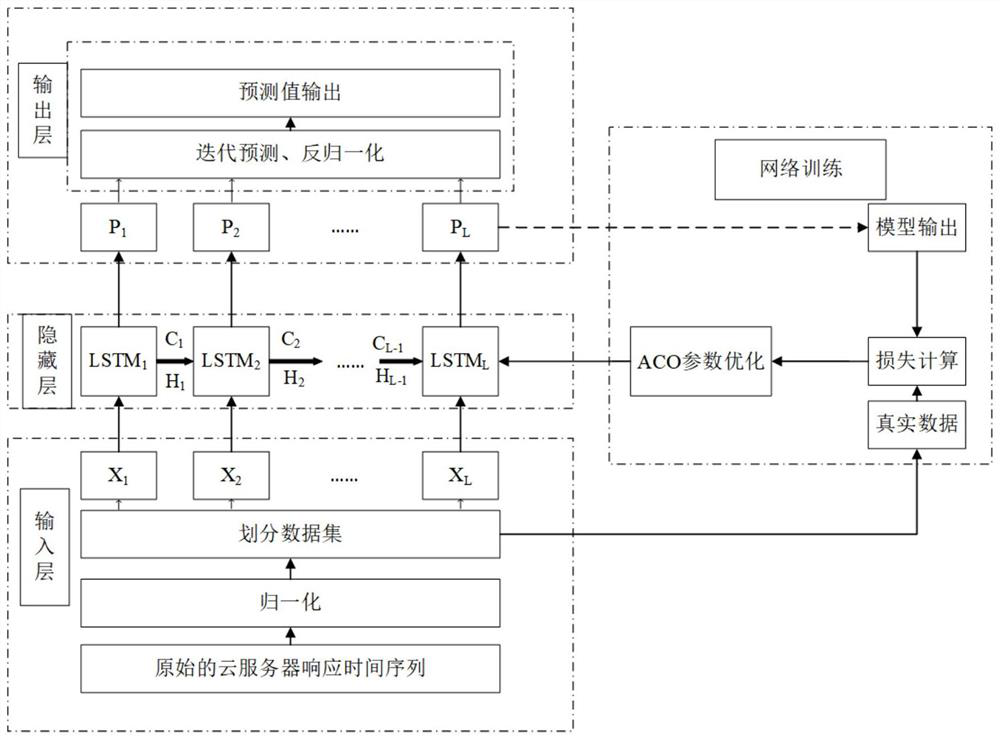

[0041] The present invention predicts the method for cloud server resource performance based on LSTM-ACO model, comprises the following steps:

[0042] Step 1, collect resource and performance data of the cloud server.

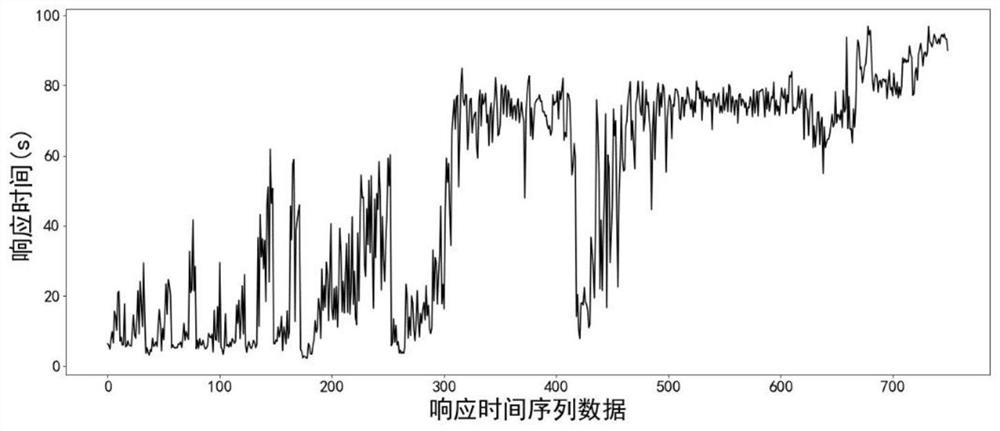

[0043] Step 2, obtaining cloud server resource and performance sequence data, said resource and performance sequence data including: CPU idle rate, available memory, average load and response time.

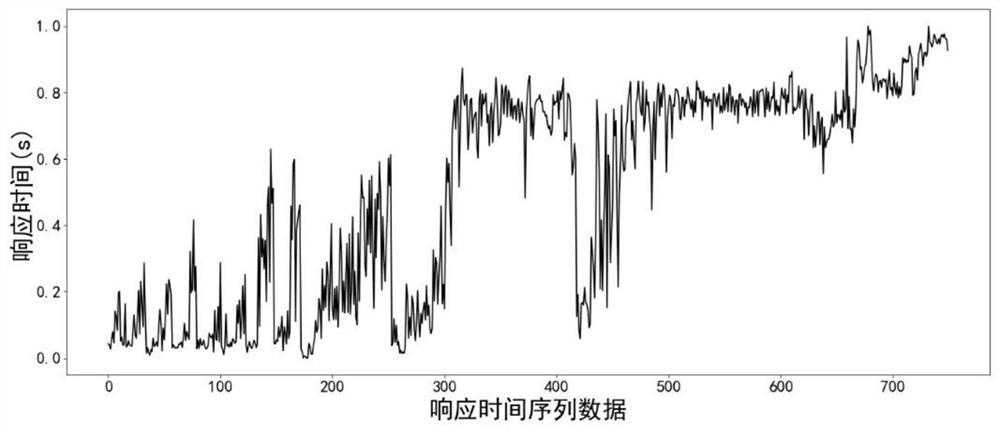

[0044] Step 3, perform preprocessing operations on the sequence data obtained in step 2.

[0045] Step 4, use the data obtained in step 3 to construct an LSTM model, and use the model to obtain the predicted value of the LSTM model for the data obtained in step 3.

[0046] Step 5, use the ant colony algorithm to optimize the parameters of the LSTM model obtained in step 4, and construct the LSTM-ACO model.

[0047] Step 6. Use...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com