Storage node and system

A storage node and memory technology, applied in the field of distributed systems, to achieve the effects of reducing data processing delay, avoiding movement, and avoiding performance bottlenecks

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

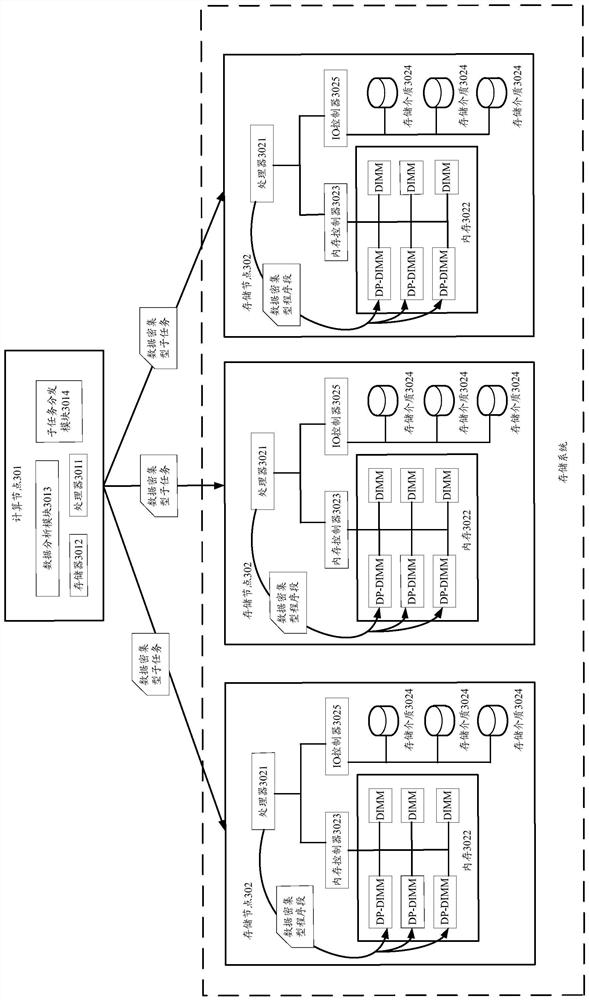

[0030] The terms "first", "second" and "third" in the specification and claims of the present application and the above drawings are used to distinguish different objects, rather than to limit a specific order.

[0031] In the embodiments of the present application, words such as "exemplary" or "for example" are used as examples, illustrations or illustrations. Any embodiment or design scheme described as "exemplary" or "for example" in the embodiments of the present application shall not be interpreted as being more preferred or more advantageous than other embodiments or design schemes. Rather, the use of words such as "exemplary" or "such as" is intended to present related concepts in a concrete manner.

[0032] In this application, "at least one" means one or more, and "multiple" means two or more. "And / or" describes the association relationship of associated objects, indicating that there can be three types of relationships, for example, A and / or B, which can mean: A exi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com