Voiceprint recognition model training method, storage medium and computer equipment

A voiceprint recognition and training method technology, applied in neural learning methods, biological neural network models, speech analysis, etc., can solve problems such as complex channel differences, achieve good learning effects, and improve recall and accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

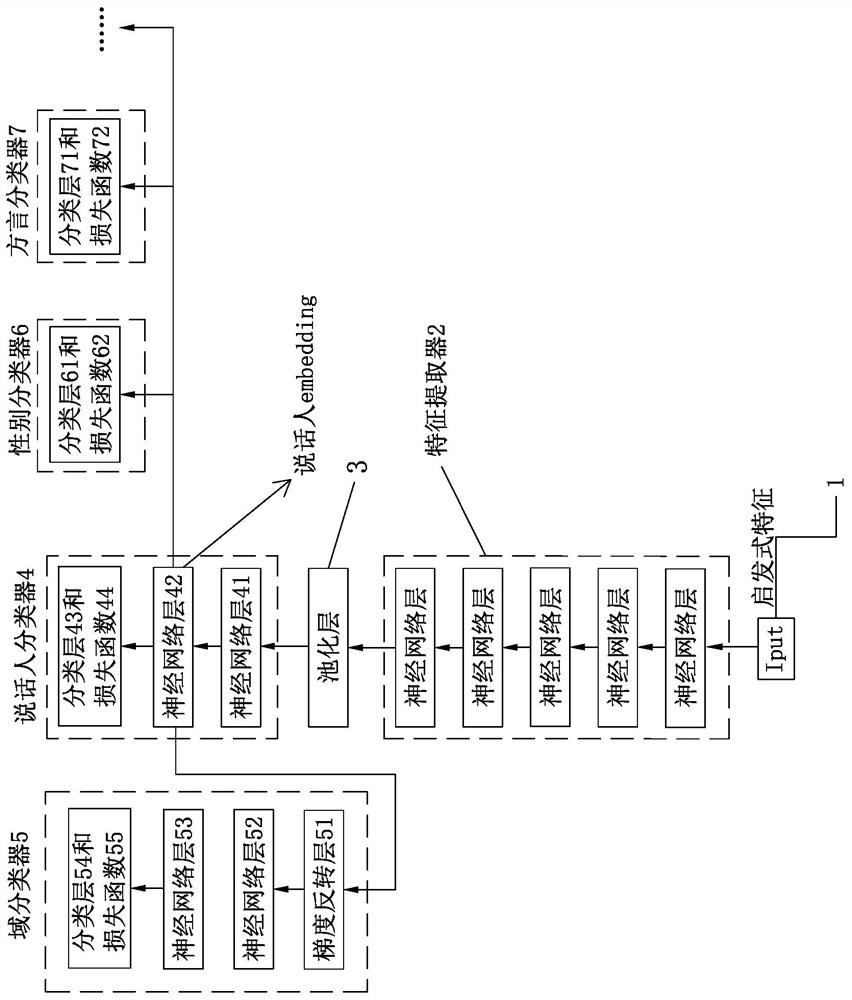

[0041]Such asfigure 1As shown, a method for training a voiceprint recognition model of the present invention specifically includes the following steps:

[0042]Step 1. Collect the set of speech samples to be trained

[0043]Collect 100,000 speech samples of the two channels to be trained for voiceprint recognition and comparison. One of the channels collects 1 speech sample for each sample object, totaling 100,000 people. Each speech sample collected for this channel needs to be based on the sample Objects are labeled with features such as gender and dialect; the other channel also collects 1 voice sample per sample object, a total of 100,000 people, the collected voice samples do not need to be labeled with the above-mentioned labels, and the sample objects of the channel that need to be labeled are in When selecting, it is necessary to consider the uniform distribution of gender, dialect and other characteristics as much as possible. For channels that do not need to be labeled, when sel...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com