Single-image three-dimensional reconstruction method based on deep learning video supervision

A deep learning and 3D reconstruction technology, applied in the field of 3D reconstruction, can solve problems such as expensive, difficult to obtain 3D data, time-consuming and memory-intensive input sequence sensitivity, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

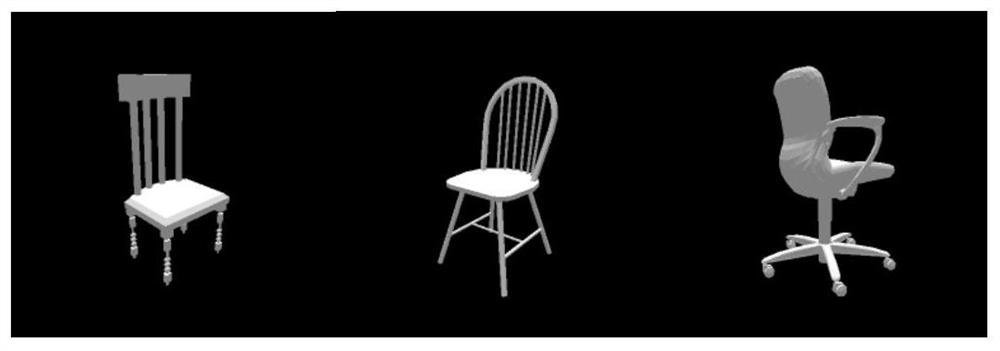

Image

Examples

Embodiment Construction

[0059] Such as figure 1 As shown, a three-dimensional reconstruction method based on deep learning video supervision disclosed by the present invention is specifically implemented according to the following steps:

[0060] 1. Build an object pose prediction module

[0061] Input: Video sequence of objects

[0062] Output: The predicted object pose for each frame

[0063] 1.1 Building a pose prediction network includes constructing an object pose prediction network model G

[0064] The object pose prediction network G includes an encoder and a decoder, and the trainable parameters in each layer of the network are denoted as θ G ; The encoder part includes a 3×3 nine-layer convolutional layer, the convolutional layer is connected to the batch norm pooling layer, and ReLU is selected as the activation function, and then two fully connected layers are connected, and ReLU is selected as the activation function, and finally the pair of input encoding. The decoder part contains ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com