SLAM drawing method and system based on multi-sensor fusion

A multi-sensor fusion and sensor technology, applied in radio wave measurement systems, satellite radio beacon positioning systems, instruments, etc., can solve the problems of large errors and low precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

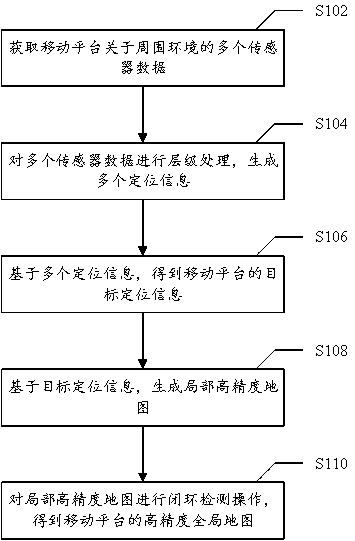

[0027] figure 1It is a flow chart of a SLAM mapping method based on multi-sensor fusion provided according to an embodiment of the present invention, and the method is applied to a server. Such as figure 1 As shown, the method specifically includes the following steps:

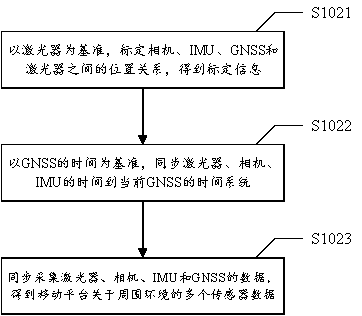

[0028] Step S102, acquiring multiple sensor data about the surrounding environment of the mobile platform; the multiple sensor data include: point cloud data, image data, IMU data and GNSS data.

[0029] Specifically, the point cloud information of the surrounding environment is collected through the laser to obtain point cloud data; the image information is collected through the camera to obtain image data; the angular velocity and acceleration of the mobile platform are obtained through the IMU to obtain IMU data; the absolute latitude and longitude at each moment is obtained through GNSS Coordinates to get GNSS data.

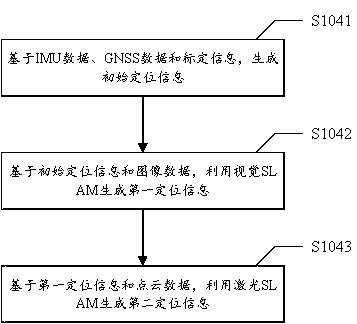

[0030] Step S104, performing hierarchical processing on multiple sensor data to genera...

Embodiment 2

[0095] Figure 6 It is a schematic diagram of a SLAM mapping system based on multi-sensor fusion provided according to an embodiment of the present invention, and the system is applied to a server. Such as Figure 6 As shown, the system includes: an acquisition module 10 , a layer processing module 20 , a positioning module 30 , a first generation module 40 and a second generation module 50 .

[0096] Specifically, the acquiring module 10 is configured to acquire multiple sensor data about the surrounding environment of the mobile platform; the multiple sensor data include: point cloud data, image data, IMU data and GNSS data.

[0097] The hierarchical processing module 20 is configured to perform hierarchical processing on multiple sensor data to generate multiple positioning information; wherein one sensor data corresponds to one positioning information.

[0098] The positioning module 30 is configured to obtain target positioning information of the mobile platform based o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com