Multi-modal label recommendation model construction method and device of multi-level attention mechanism

A construction method and attention technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve the problem of poor recommendation effect, insufficient feature mining, and failure to consider the spatial correlation of image features themselves. Text features are related to time series. It can achieve the effect of rich scenes, satisfying real production environment and simple structure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

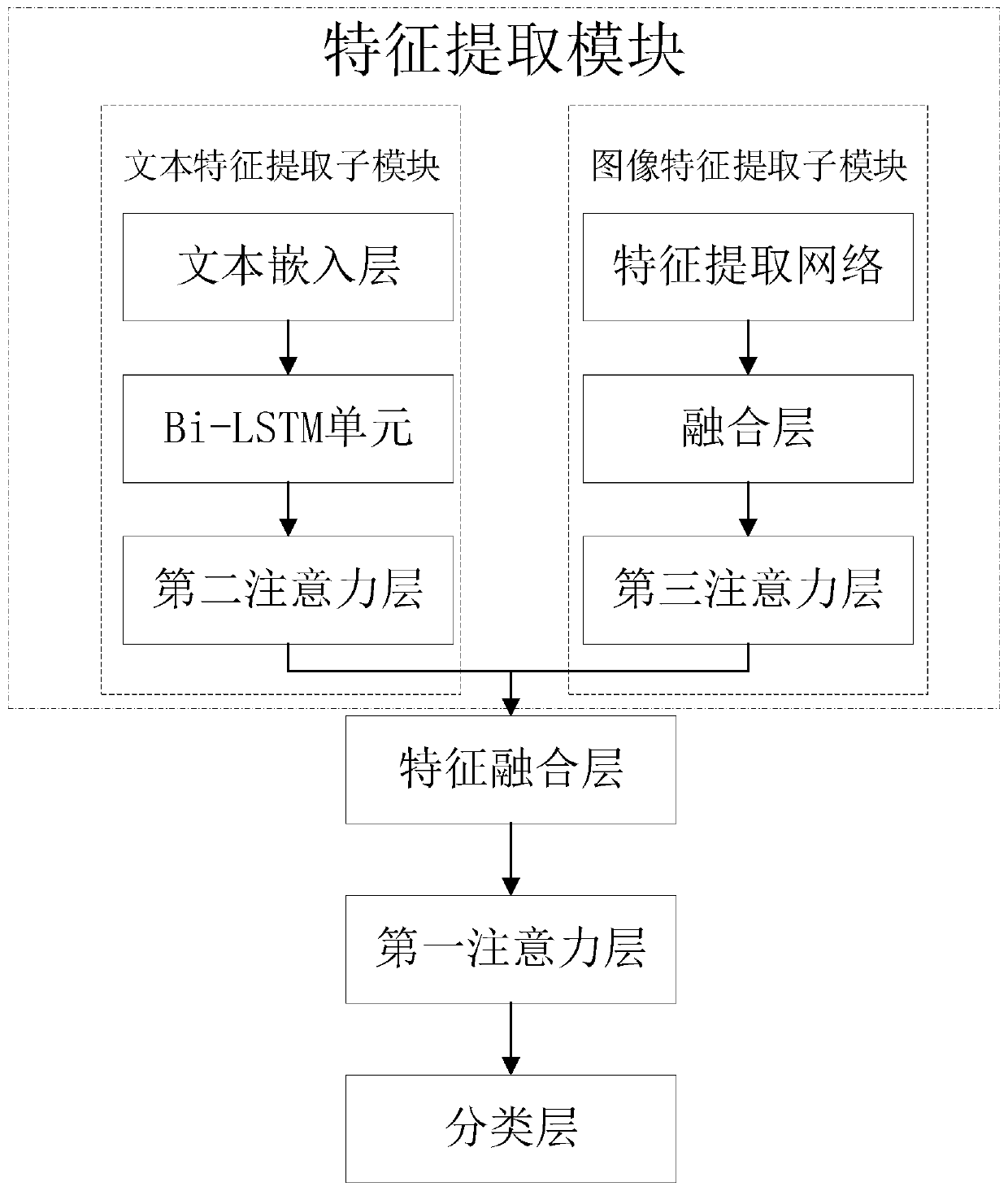

[0058] In this embodiment, a multi-modal label recommendation model construction method of a multi-level attention mechanism is provided. The multi-level attention model constructed by the model construction method provided by the present invention introduces multi-modal bilinear fusion And the hierarchical attention mechanism can not only fully integrate multi-modal features, but also mine more effective information in the features, and achieve the effect of improving the recommendation performance.

[0059] In the recommendation model provided by the present invention, two different levels of attention layers are included to calculate attention factors, and the low-level attention layers (second attention layer and third attention layer) are used to calculate image and text unit values respectively. The features of the modality, and the high-level attention layer (the first attention layer) is used to calculate the multi-modal features after fusion. The two-level attention ...

Embodiment 2

[0126] A multi-modal tag recommendation method based on a multi-level attention mechanism. The recommendation method is performed in the following steps:

[0127] Step A, obtaining a set of data to be recommended, the data includes image data and text data;

[0128] Step B. Input the data to be recommended into the multi-modal tag recommendation model obtained by the multi-modal tag recommendation model construction method of the multi-level attention mechanism in Embodiment 1, and obtain the recommendation result.

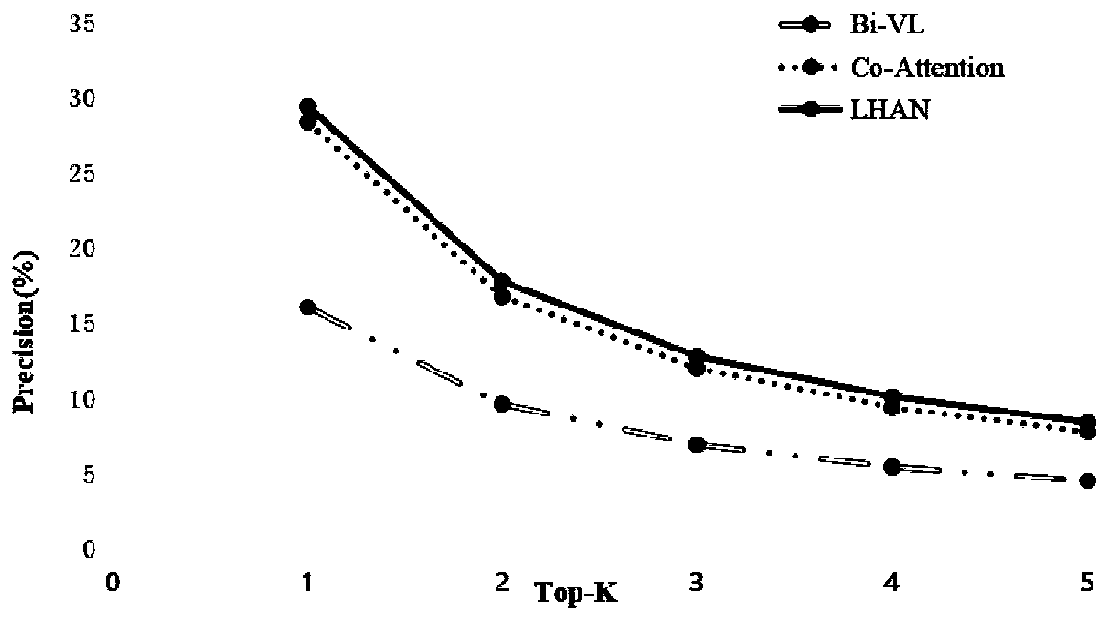

[0129] In this embodiment, the provided label recommendation method is tested, the experiment uses the Linux 7.2.1511 operating system, the CPU model is Intel(R) Xeon(R) E5-2643, the memory size is 251GB, and the graphics card is two GeForce GTX1080Ti Graphics card, the size of a single video memory is 11GB, and the deep learning framework is Keras version 2.0. The dataset used is based on the Tweet dataset of real users, which contains 334,989 pictures and corre...

Embodiment 3

[0146] In this embodiment, a multi-modal label recommendation model construction device with a multi-level attention mechanism, the device includes a data acquisition module, a data preprocessing module and a network construction module;

[0147] The data acquisition module is used to obtain multiple sets of data and the labels corresponding to each set of data, and obtain data sets and label sets;

[0148] Each set of data includes image data and text data;

[0149] The data preprocessing module is used to preprocess the data set to obtain the preprocessed data set;

[0150] The preprocessing includes unifying the size of the image data;

[0151] The network building block is used to train the neural network with the preprocessed data set as input and the label set as reference output;

[0152] The neural network includes a feature extraction module, a feature fusion layer, a first attention layer, and a classification layer set in series;

[0153] The feature extraction m...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com