Voice emotion recognition method based on glottal wave signal feature extraction

A technology of speech emotion recognition and signal characteristics, applied in speech analysis, instruments, etc., can solve the problems of dimension disaster, multi-dimensionality, high redundancy, etc., and achieve less resonance ripples, better recognition effect, and better recognition effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] The technical solutions of the present invention will be further described below in conjunction with embodiments.

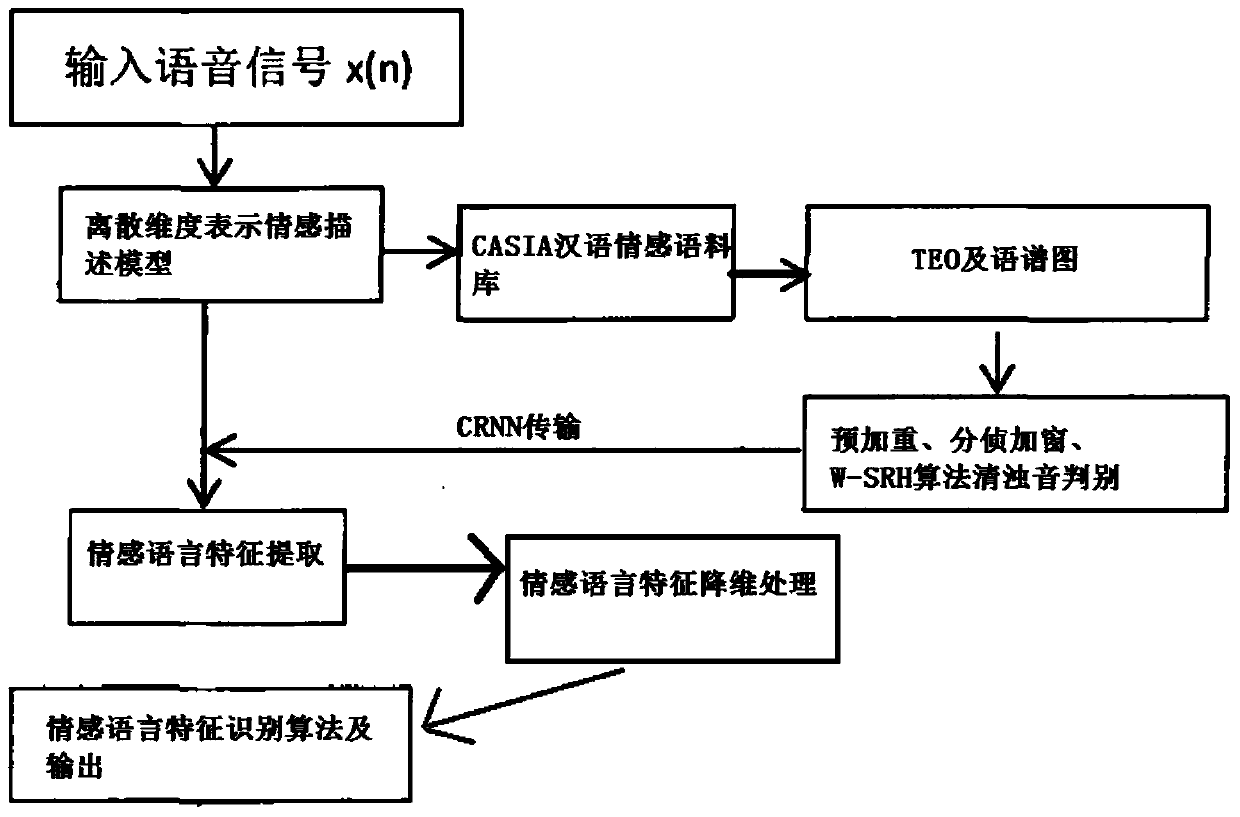

[0018] Such as figure 1 As shown, a kind of speech emotion recognition method based on glottal wave signal feature extraction of the embodiment of the present invention comprises the following specific steps:

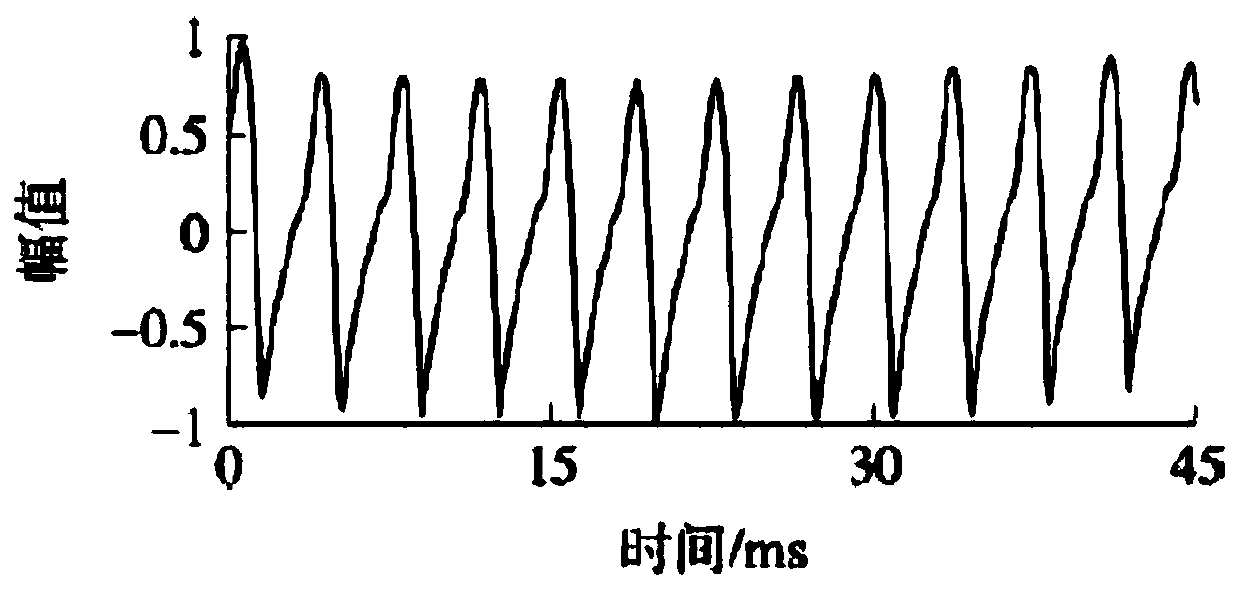

[0019] Step 1: Speech input and front-end processing. After the speech signal is input, the emotion description model and the CASIA Chinese emotion corpus are expressed in discrete dimensions. After the front-end preliminary processing of the TEO and spectrogram path, a transfer function is given by formula 2.1 The filter is used to realize the pre-emphasis of glottal excitation, and the speech signal is intercepted into data frames with the same length. Generally, the frame length is 10-50ms, and the frame overlap is 5-25ms. Then, based on the unvoiced and voiced sound algorithm of the W-SRH algorithm, the emotional speech signal is discriminated....

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com