A cache memory replacement method and system based on usage heat

A cache and memory technology, applied in memory systems, instruments, computing, etc., can solve the problems of inability to change the replacement strategy, large resource usage, low hit rate, etc., to increase replacement flexibility, less resource usage, general purpose high sex effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

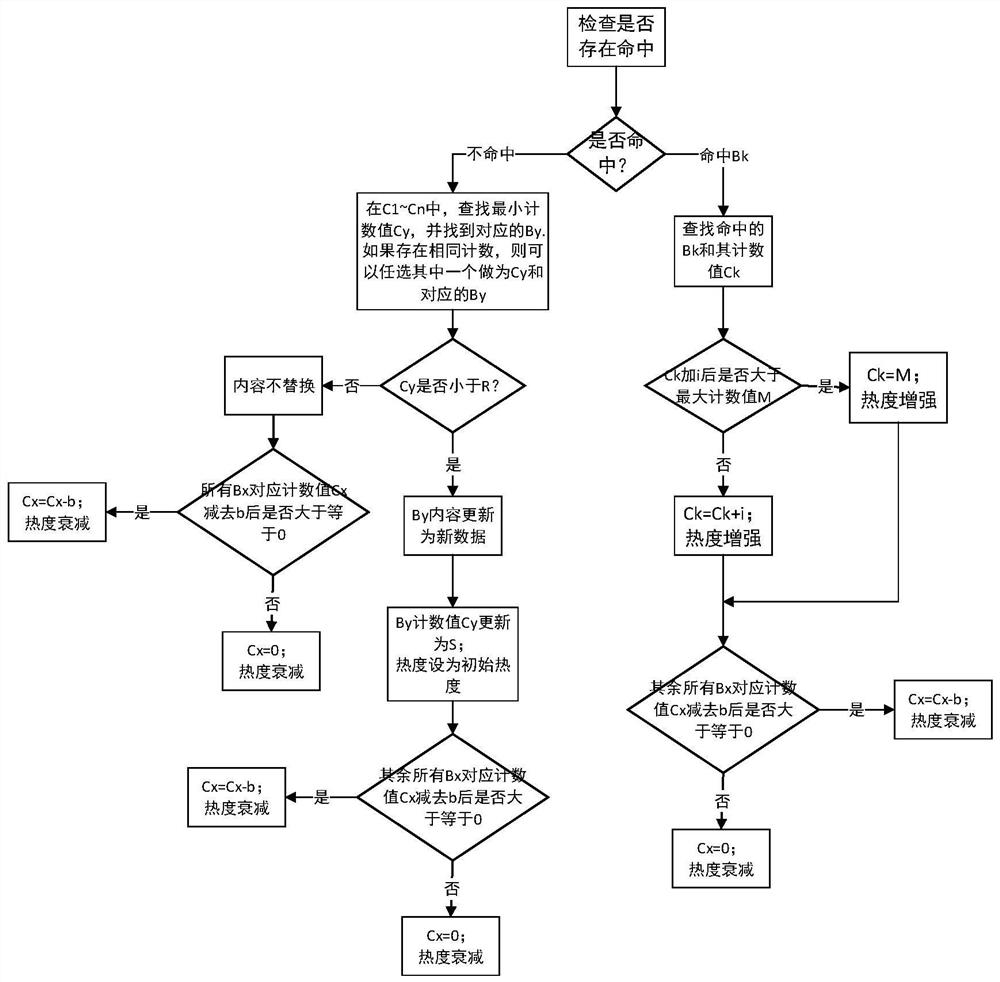

[0040] The present disclosure provides a cache memory replacement method based on usage heat, including:

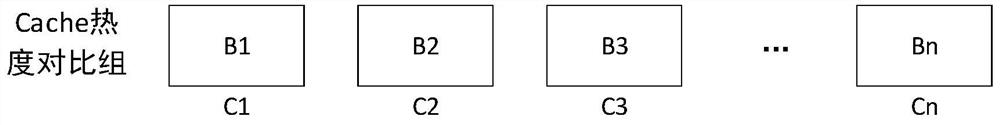

[0041] S1: if figure 1 As shown, the cache memory Cache is divided into n Cache blocks, and the n Cache blocks and their corresponding heat values form a heat comparison group;

[0042] In this comparison group, there are a total of n blocks for heat comparison, and the comparison block is named B1 ~B n , and its corresponding count value is C 1 ~C n .

[0043] S2: Define parameter values

[0044] a. Initial heat value S: After data replacement, the initial count value of Cx corresponding to the data block Bx.

[0045] b. Heat decay factor b: After the block is not hit, the decreasing value of the heat count; this value can be a fixed value, or it can change with the change of the heat value.

[0046] c. Popularity enhancement factor i: After hitting the block, the increased value of the popular count; this value can be a fixed value, or it can change with the cha...

Embodiment 2

[0059] The present disclosure provides a usage heat based cache memory replacement system comprising:

[0060] A block module, which is used to divide the cache memory Cache into n Cache blocks, and the n Cache blocks and their corresponding heat values form a heat comparison group;

[0061] A data reading module, which is used to judge whether the CPU data to be read exists in the Cache according to the received CPU data reading request, if hit, find the hit Cache block and its corresponding heat value in the heat comparison group, Increase the heat value according to the preset heat enhancement factor, and the heat value of the rest of the miss Cache block is attenuated according to the heat decay factor, and read the CPU data in the hit Cache block;

[0062] Replacement module, which is used to find the Cache block with the smallest heat value and less than or equal to the replacement threshold in the heat comparison group if there is a miss, replace the CPU data to be re...

Embodiment 3

[0064] The present disclosure provides an electronic device, which is characterized in that it includes a memory, a processor, and computer instructions stored in the memory and run on the processor. When the computer instructions are executed by the processor, a high-speed Steps described in the buffer memory replacement method.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com