Network training method, image processing method, network, terminal device and medium

A training method and network technology, applied in the field of image processing, can solve problems such as poor effect of replacing image background, inability of mask to accurately represent the contour edge of target object, and inability to accurately segment target object, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0043] The following describes the training method of the image segmentation network provided by Embodiment 1 of the present application, please refer to the attached figure 2 , the training method includes:

[0044] In step S101, each sample image containing the target object, a sample mask corresponding to each sample image, and sample edge information corresponding to each sample mask are obtained, wherein each sample mask is used to indicate the corresponding sample The image area where the target object is located in the image, and each sample edge information is used to indicate the contour edge of the image area where the target object indicated by the corresponding sample mask;

[0045] In the embodiment of the present application, a part of sample images can be obtained from the data set first, and then the number of sample images used to train the image segmentation network can be expanded in the following ways: mirror inversion, scaling and / or Gamma changes, etc., s...

Embodiment 2

[0092] The following describes another image segmentation network training method provided by Embodiment 2 of the present application. Compared with the training method described in Embodiment 1, this training method includes a training process for the edge neural network. Please refer to the attached Figure 7 , the training method includes:

[0093] In step S301, each sample image including the target object, a sample mask corresponding to each sample image, and sample edge information corresponding to each sample mask are acquired, wherein each sample mask is used to indicate the corresponding sample The image area where the target object is located in the image, and each sample edge information is used to indicate the contour edge of the image area where the target object indicated by the corresponding sample mask;

[0094] For the specific implementation process of step S301, please refer to the part of step S101 in the first embodiment, which will not be repeated here. ...

Embodiment 3

[0125] Embodiment 3 of the present application provides an image processing method, please refer to the attached Figure 9 , the image processing method includes:

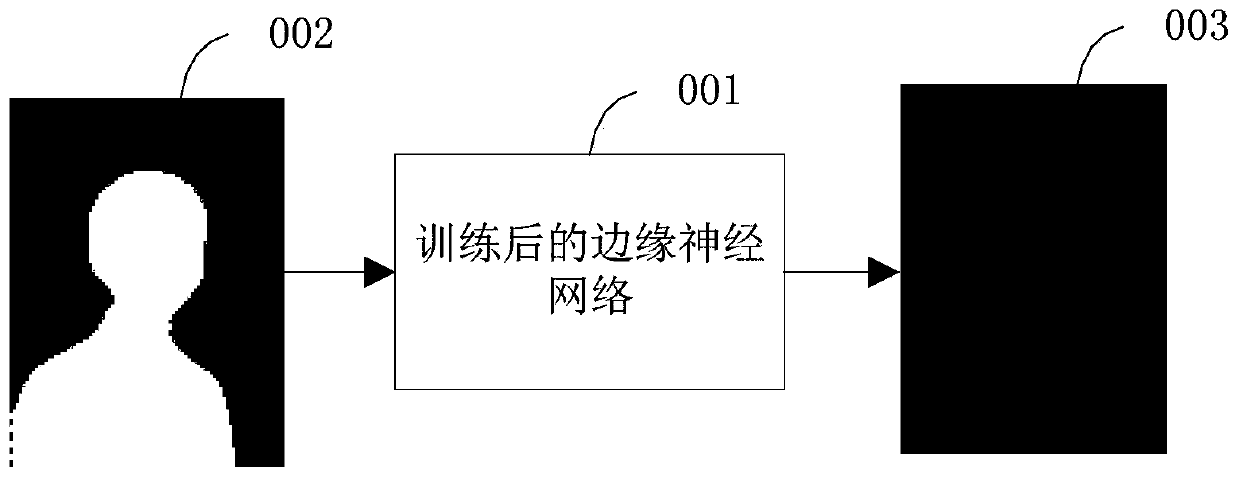

[0126] In step S401, the image to be processed is obtained, and the image to be processed is input into the trained image segmentation network to obtain the mask corresponding to the image to be processed, wherein the trained image segmentation network uses the trained edge Obtained by neural network training, the trained edge neural network is used to output the edge profile of the region where the target object indicated by the mask is located according to the input mask;

[0127] Specifically, the trained edge neural network described in step S401 is a neural network trained by the method described in the above-mentioned embodiment 1 or embodiment 2;

[0128] In step S402, the target object included in the image to be processed is segmented based on the mask corresponding to the image to be processed.

[0129]...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com