Unmanned ship perception fusion algorithm based on deep learning

A technology of fusion algorithm and deep learning, applied in the field of unmanned boat perception fusion algorithm based on deep learning, can solve the problems of limited detection distance, short detection distance, long detection distance, etc., to reduce detection cost and enhance robustness. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] The technical solutions in the present invention will be clearly and completely described below in conjunction with the accompanying drawings in the present invention.

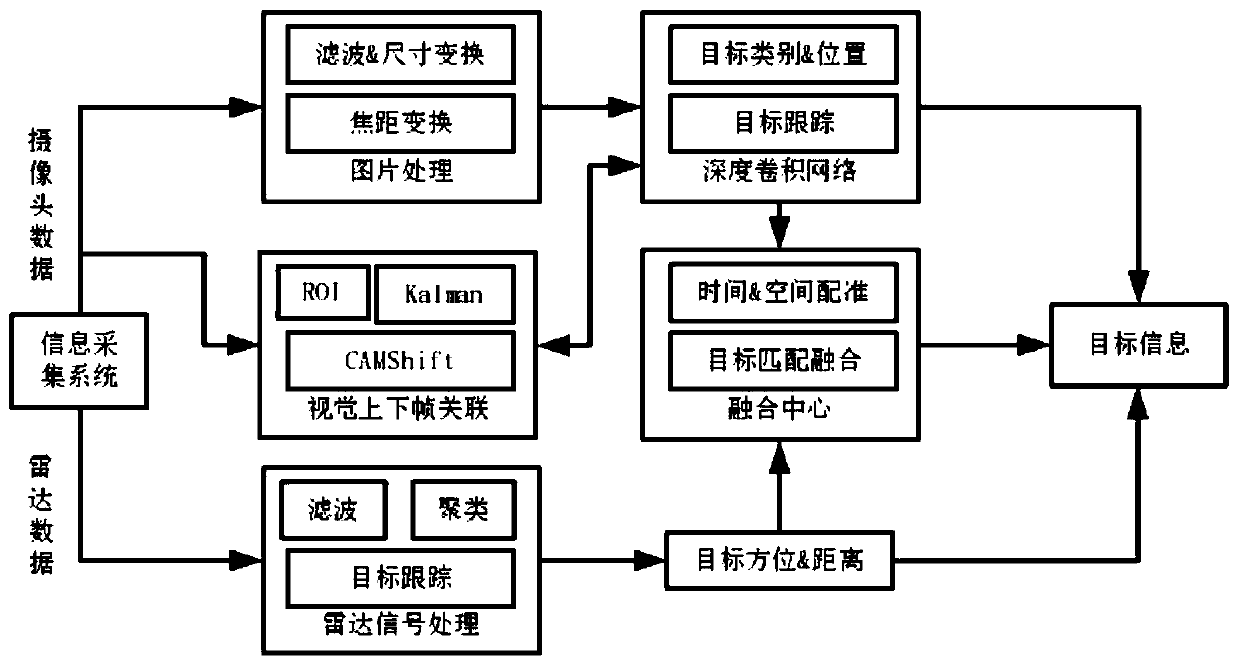

[0052] figure 1 Shown is the data flow chart of the fusion system used by the deep learning-based unmanned vehicle perception fusion algorithm of the present invention, and the functions of each module are introduced as follows:

[0053] ① Image processing module: through this module, the data of the camera is obtained, and the image is converted into an appropriate size through filtering, size conversion, etc. for subsequent modules.

[0054] ②Deep convolutional network module: The data processed by the image processing module is used as input, and the pre-trained model is used to detect the target.

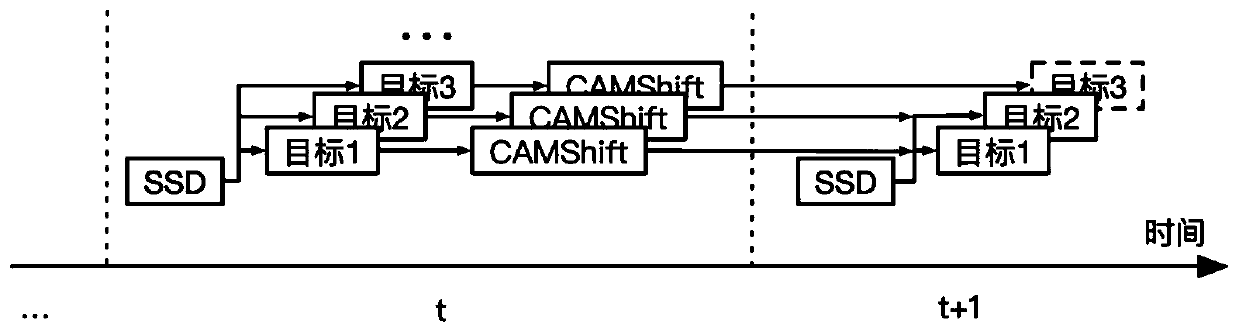

[0055] ③Visual upper and lower frame association module: mainly use CAMShift to assist the deep convolutional network to track the target, solve the problem that the lightweight network is easy to lose ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com