Sequence labeling method and device and training method of sequence labeling model

A sequence labeling and model technology, applied in the direction of neural learning methods, biological neural network models, special data processing applications, etc., can solve problems such as the inability to improve model labeling performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

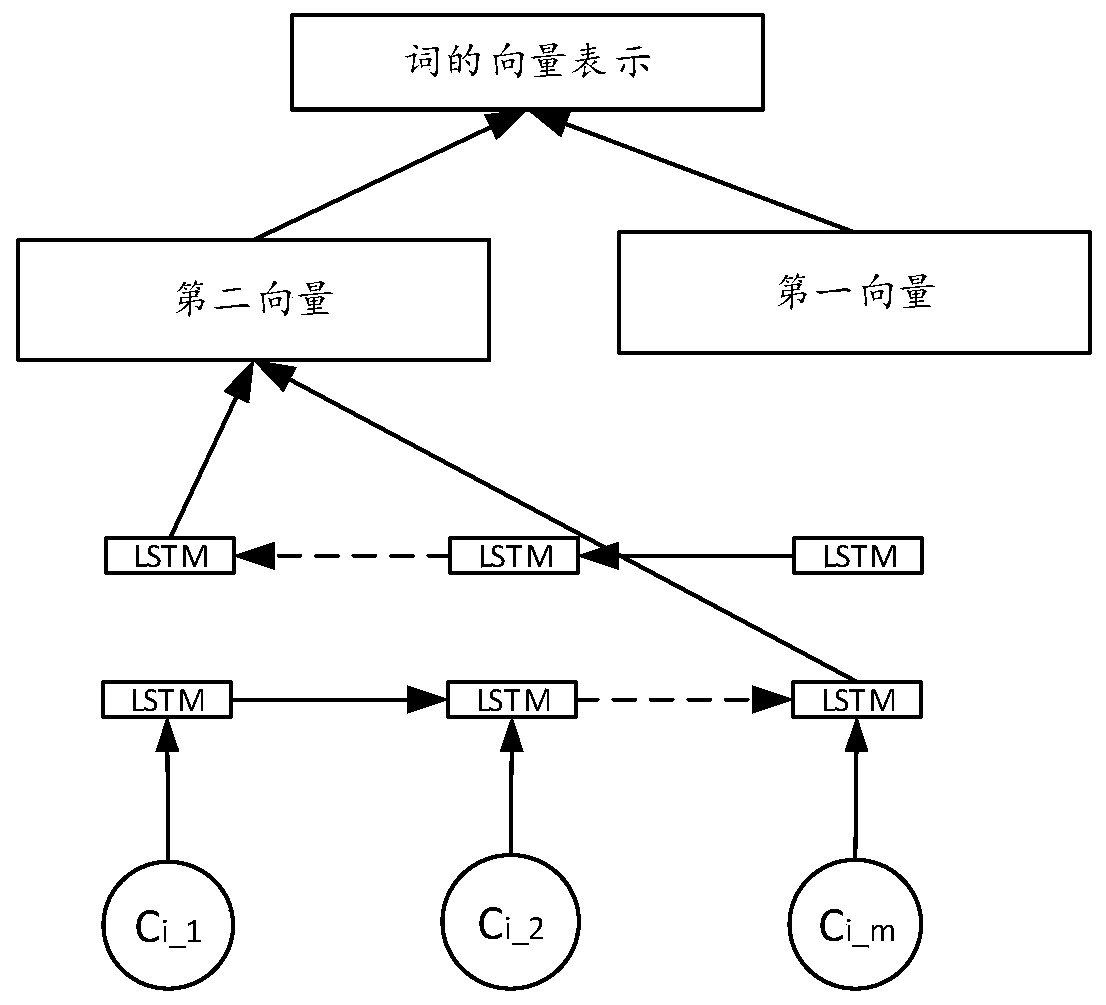

[0052] The following introduces Embodiment 1 of a sequence tagging device provided by the present application, and Embodiment 1 includes a sequence tagging model. It should be noted that, in this embodiment, a deep neural network is used as the above-mentioned sequence labeling model to avoid the shortcomings of traditional feature engineering-based models, such as cumbersome feature extraction process and difficulty in ensuring the rationality of feature templates. As a specific implementation, this embodiment selects BiLSTM (Bidirectional Long Short-Term Memory) as the basic model.

[0053] see figure 1 , the above sequence labeling model specifically includes:

[0054] Input layer 101: for obtaining text to be marked;

[0055] Presentation layer 102: for determining the vector representation of each word of the text to be marked, and sending the vector representation to the first scoring layer 103 and multiple second scoring layers 104 respectively;

[0056] In this embo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com