Visual emotion label distribution prediction method based on automatic estimation

A technology of distribution prediction and emotion, applied in the field of deep convolutional neural network, can solve problems such as not considering label connection, and achieve the effect of a good model

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

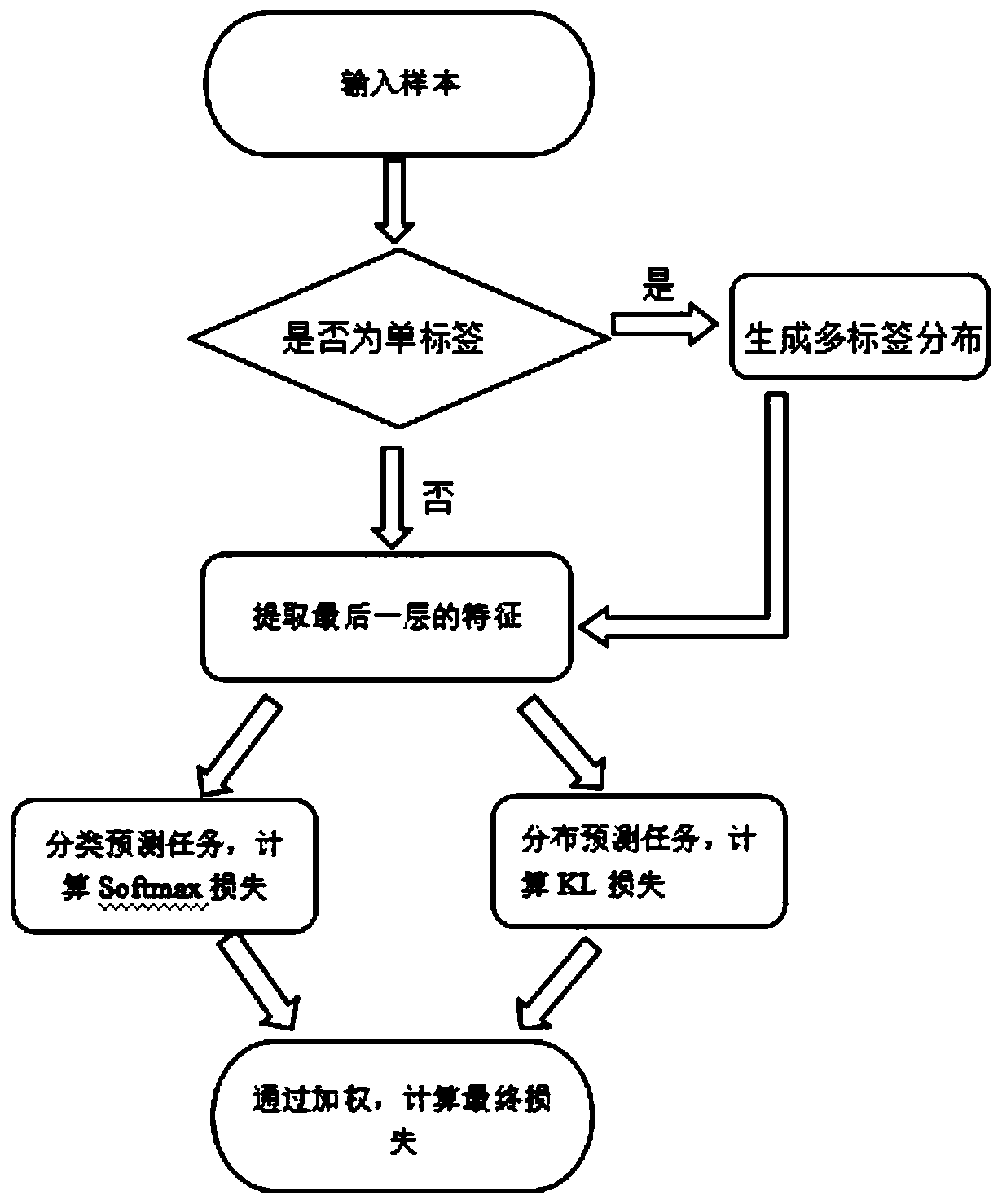

[0031] refer to figure 1 , which represents the flow chart of the method of jointly learning visual emotion classification and distribution through a deep convolutional neural network. The steps shown in the figure are:

[0032] a. The pictures are resized, data enhanced and other operations are sent to the model, and the original model is pre-trained on the large-scale dataset ImageNet.

[0033] b. For single-label training data, use two kinds of weak prior knowledge to generate multi-label label distribution. The two prior knowledge and calculation principles are:

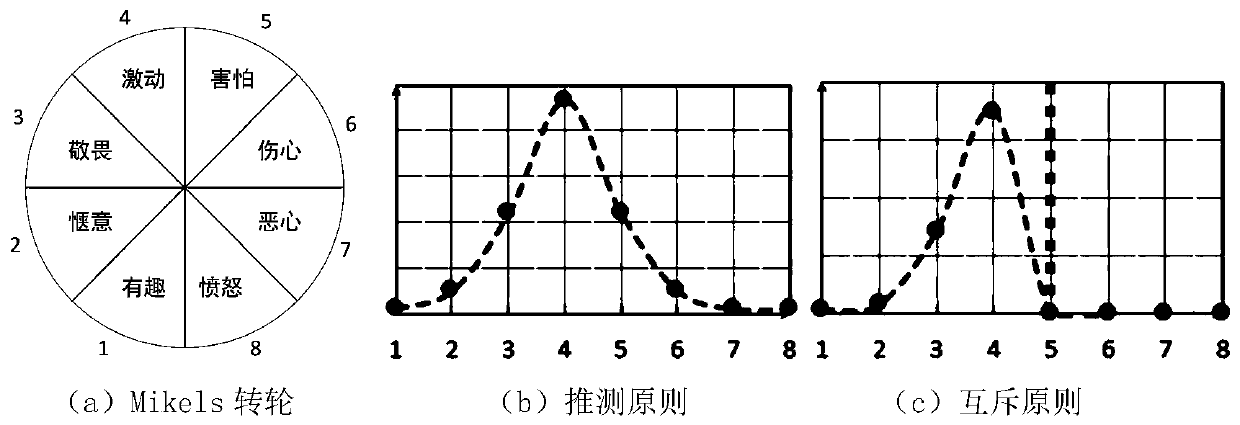

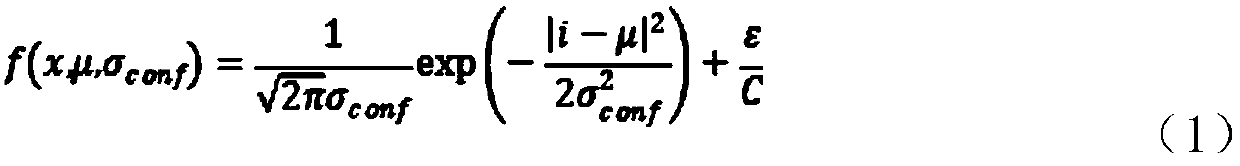

[0034] (1) Inference principle: the distance between two emotions can be measured by the Mikel wheel, and the probability value of the related category is calculated with the help of the Gaussian function. The probability value of the category closer to the original label is larger, otherwise it is smaller, so that it can be Get the multi-label distribution of the picture;

[0035](2) The principle of mutual ex...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com