Semantic mapping system based on instant positioning mapping and three-dimensional semantic segmentation

A semantic segmentation and semantic mapping technology, applied in the field of computer vision, can solve problems such as reducing efficiency and weakening system performance, and achieve the effects of improving efficiency, improving system performance, and strong robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] Below in conjunction with accompanying drawing and specific embodiment the present invention is described in further detail:

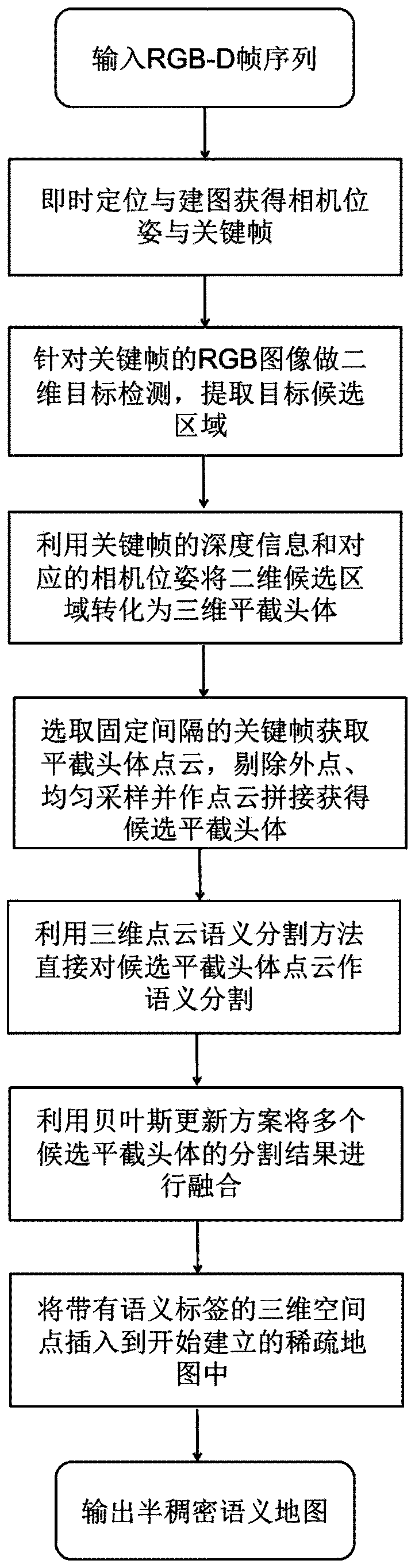

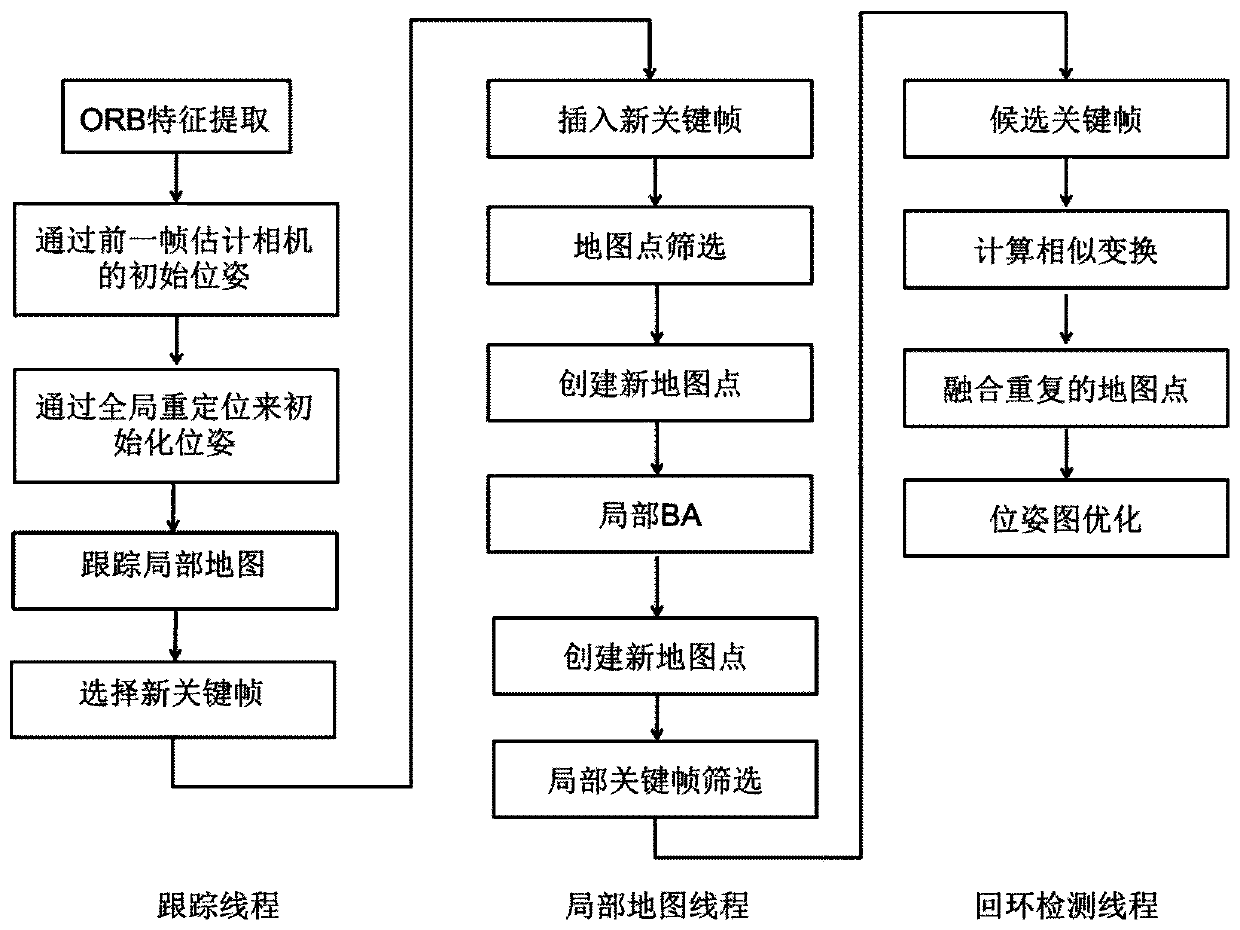

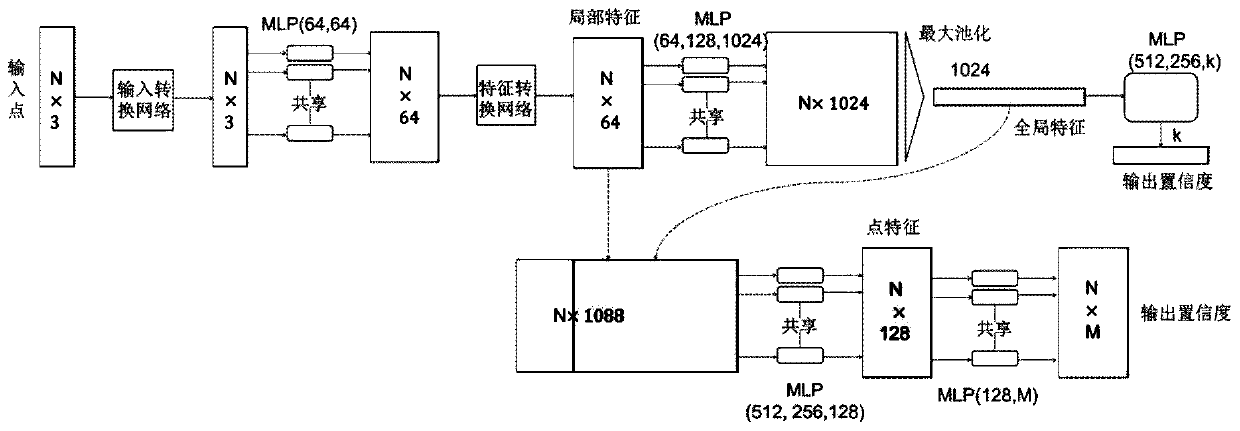

[0040] The present invention provides a semantic mapping system based on real-time positioning mapping and three-dimensional semantic segmentation, and uses a sparse mapping system based on feature points to extract key frames and camera poses. For the key frame, first use the mature two-dimensional object detection method to extract the region of interest, and then use the inter-frame information, that is, the camera pose, and the spatial information, that is, the image depth, to obtain candidate frustums. The frustum is segmented by point cloud semantic segmentation method, and a Bayesian update scheme is designed to fuse the segmentation results of different frames. The present invention aims to make full use of inter-frame information and spatial information to improve system performance.

[0041] The following is based on Ubuntu16.04 and N...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com