TOF camera-based hair segmentation method

A TOF camera and hair technology, applied in the field of 3D images, to achieve the effect of high-quality and high-precision segmentation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0020] The present invention will be further described now in conjunction with accompanying drawing.

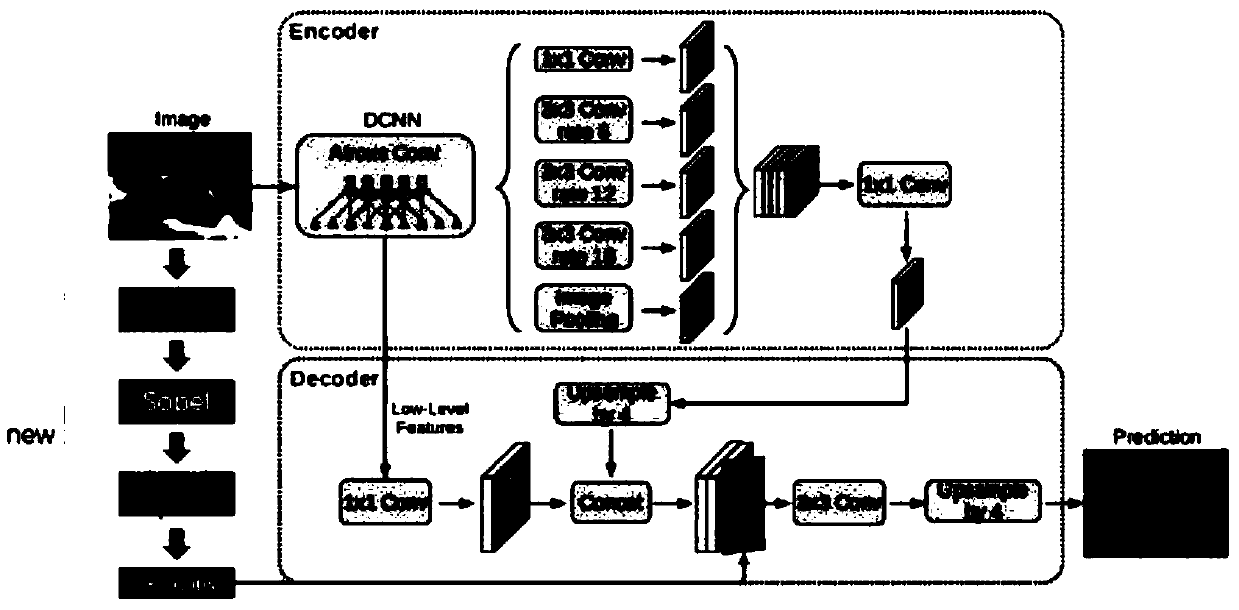

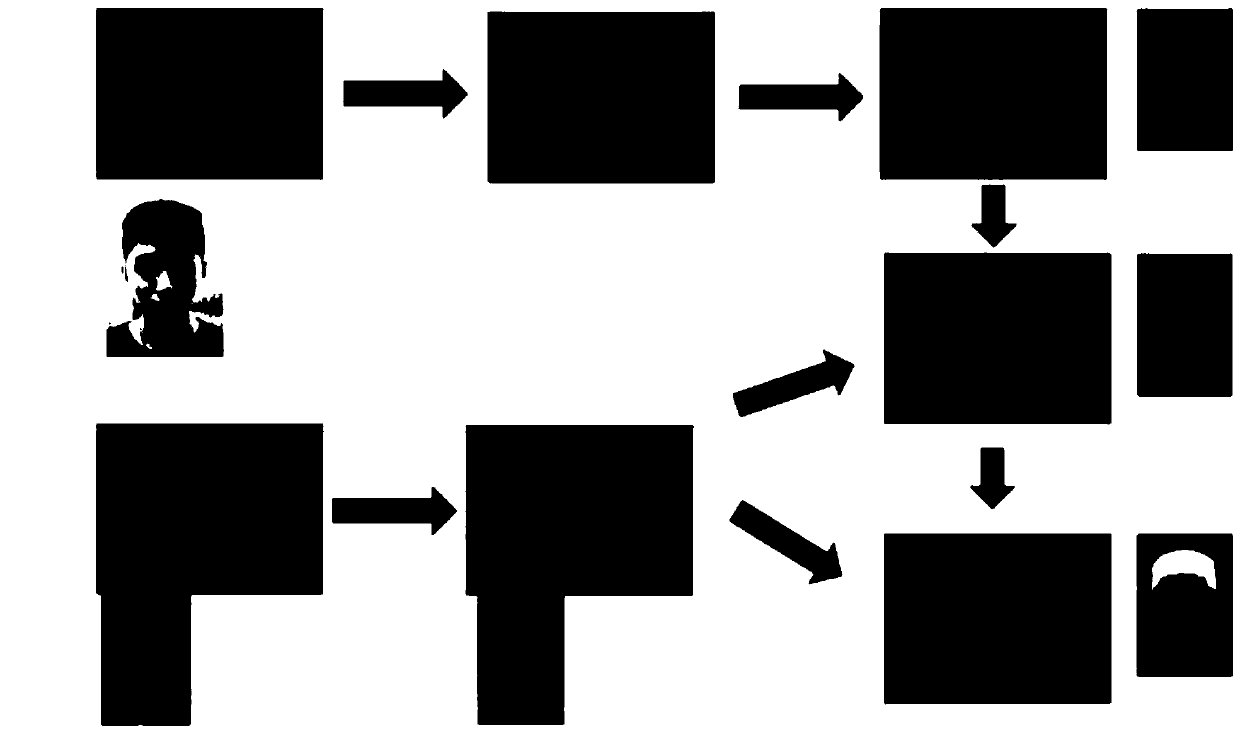

[0021] see figure 1 , figure 2 , figure 1 , figure 2 What is shown is an embodiment of the present invention. This embodiment is based on a TOF camera to achieve higher precision segmentation of human hair, and reversely uses the characteristic of time-of-flight to generate a certain degree of noise on the hair to achieve higher precision for hair segmentation.

[0022] First, deep learning achieves preliminary segmentation of hair.

[0023] That is to use the existing data set of a small and not very fine hair color map plus mask to construct a hair style deep learning network, and integrate the important information of hair gradient to train and obtain a preliminary hair segmentation map.

[0024] see figure 1 , this embodiment uses the Figaro 1K hair dataset, which has 1050 pieces of hair data. Perform data enhancement on the data set, add noise to hair, change br...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com