A pedestrian re-identification method based on deep learning and overlapped image inter-block measurement

A technology of overlapping images and deep learning, applied in character and pattern recognition, instruments, biological neural network models, etc., can solve problems such as interference, limited recognition performance, and large impact on recognition performance, so as to improve the recognition rate and improve recognition. The effect of performance, good robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

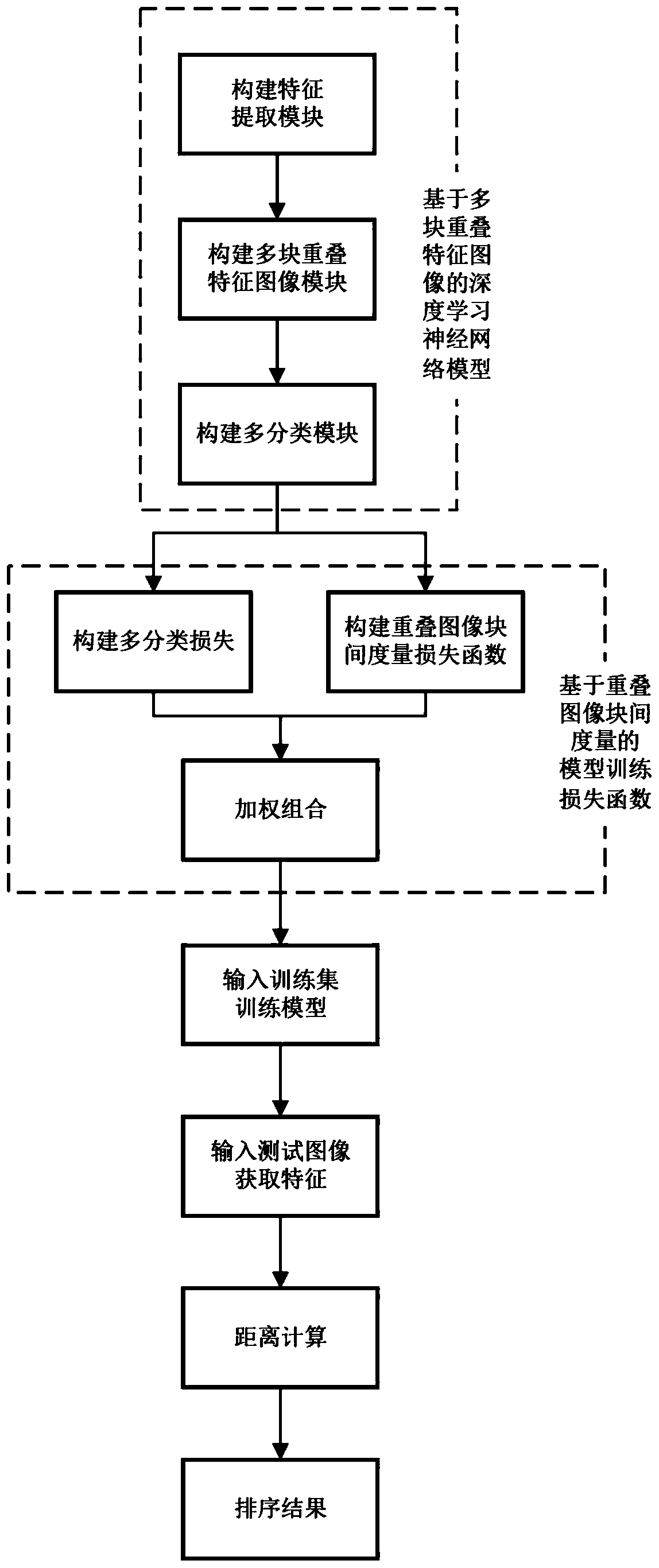

[0063] In order to make the object, technical scheme and advantages of the present invention clearer, below in conjunction with embodiment, specifically as figure 1 The shown algorithm flow chart further describes the present invention in detail. It should be understood that the specific embodiments described here are only used to explain the present invention, but not to limit the present invention.

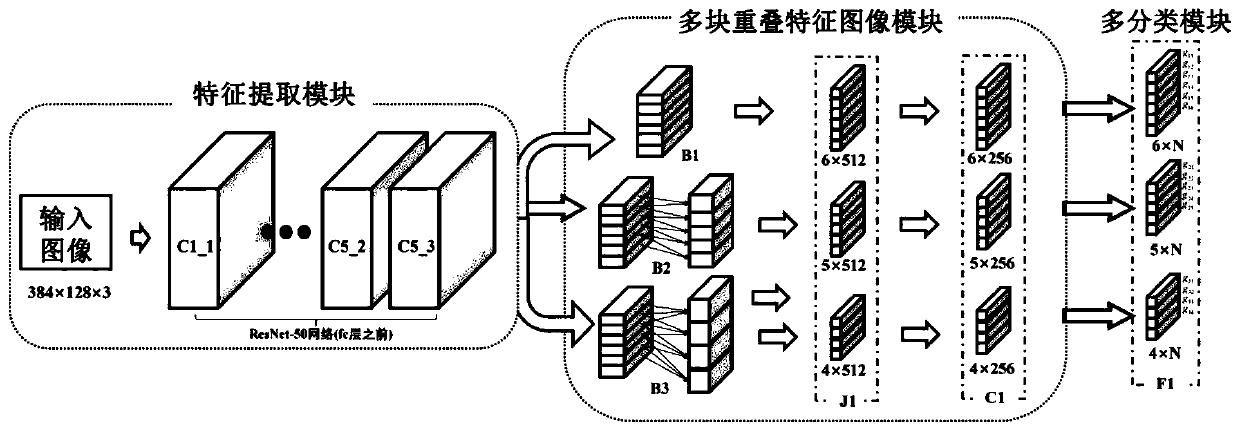

[0064] Step 1: Construct a deep learning neural network model based on multiple overlapping feature images, specifically described as follows: The deep learning neural network model based on multiple overlapping feature images is sequentially connected by a feature extraction module, a multi-block overlapping feature image module, and a multi-classification module Combination formation is mainly used to extract robust and highly discriminative features from pedestrian images. The deep learning neural network model based on multi-block overlapping feature images adds a multi-bl...

specific Embodiment approach

[0119] figure 1 It is the flow chart of OBM algorithm implementation of the present invention, and the specific implementation is as follows:

[0120] 1. Build a feature extraction module;

[0121] 2. Build multiple overlapping feature image modules;

[0122] 3. Build multi-category modules;

[0123] 4. Construct a multi-classification loss function ClassificationLoss;

[0124] 5. Construct a metric loss function OverlapBlocksLoss between overlapping image blocks;

[0125] 6. Weighted ClassificationLoss and OverlapBlocksLoss loss functions to obtain the final model training loss function;

[0126] 7. Adjust the image size of all training sets to 384*128;

[0127] 8. The training batch size (Batch Size) is set to 64, and the training cycle (Epoch) is set to 80;

[0128] 9. Repeatedly input training images for model training, calculate the loss value based on the model training loss function based on the measurement between overlapping image blocks, and use the stochastic...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com