Remote sensing image description method based on joint latent semantic embedding

A technology of remote sensing images and semantics, applied in the fields of instruments, computing, and electrical digital data processing, etc., can solve the problems that complex scenes cannot be effectively applied, remote sensing images cannot be fully utilized, etc., and achieve the effect of full description

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

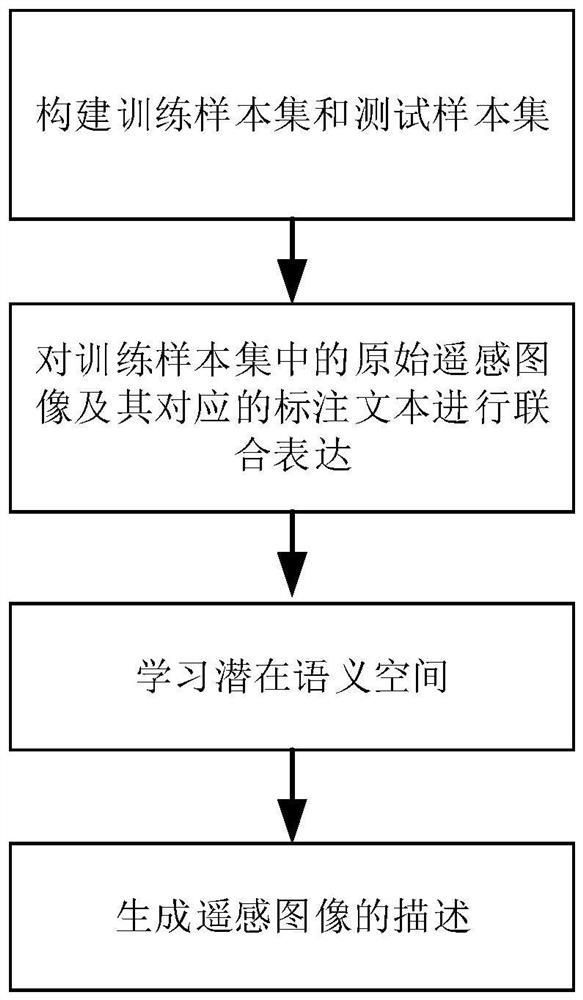

[0055] refer to figure 1 , the steps that the present invention realizes are as follows:

[0056] Step 1) Construct training sample set and test sample set:

[0057] Divide the original remote sensing images and their corresponding annotations in the database (UCM-captions, Sydney-captions or RSICD); when dividing, it is best to divide 90% of the original remote sensing images and their corresponding annotations in the database into training samples 10% of the original remote sensing images and their corresponding annotations are classified into the test sample set, and the remote sensing images to be retrieved are classified into the test sample set.

[0058] Step 2) Jointly express the original remote sensing image in the training sample set and its corresponding labeled text:

[0059] Step 2.1) Utilize the pre-trained deep neural network to extract the image features of each original remote sensing image;

[0060] Step 2.2) Use the pre-trained word vectors to extract the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com