Question answering method and system based on brain-inspired semantic hierarchical temporal memory reasoning model

A Semantic, Temporal Technique Applied to the Field of Cognitive Neuroscience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0072] The application will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain related inventions, not to limit the invention. It should also be noted that, for the convenience of description, only the parts related to the related invention are shown in the drawings.

[0073] It should be noted that, in the case of no conflict, the embodiments in the present application and the features in the embodiments can be combined with each other. The present application will be described in detail below with reference to the accompanying drawings and embodiments.

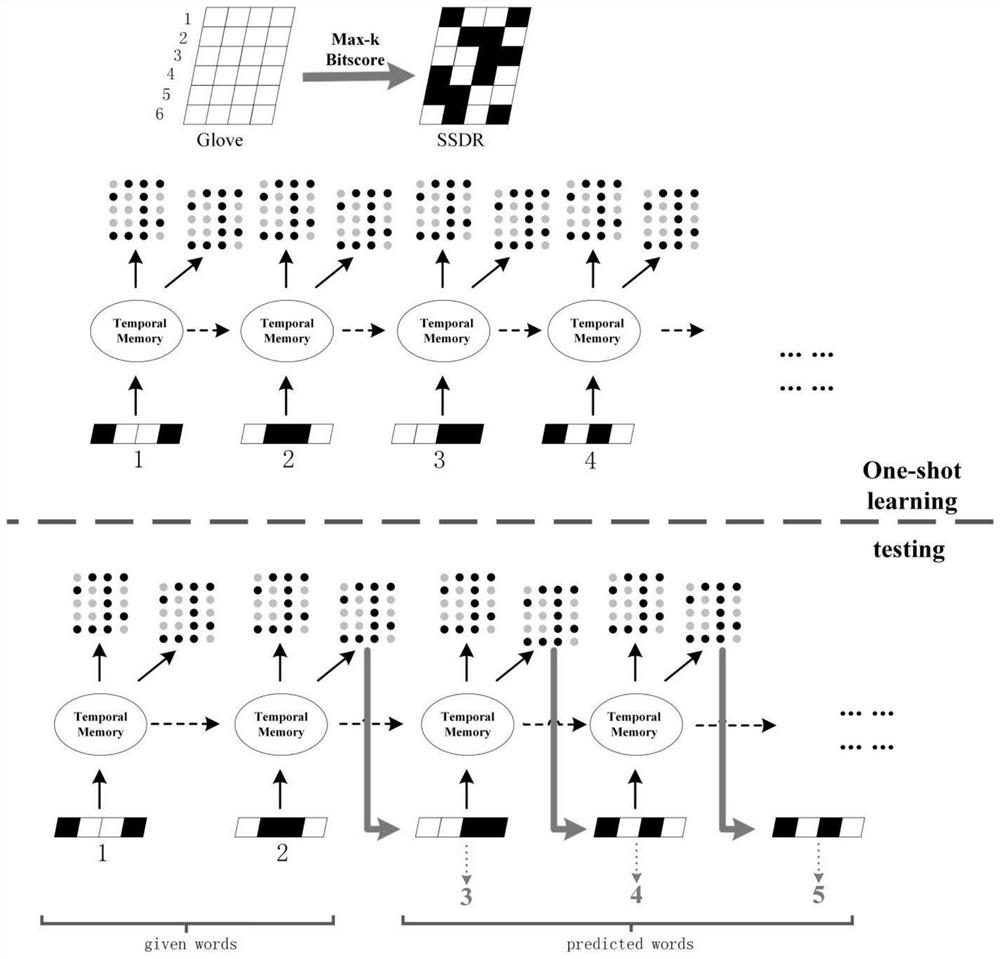

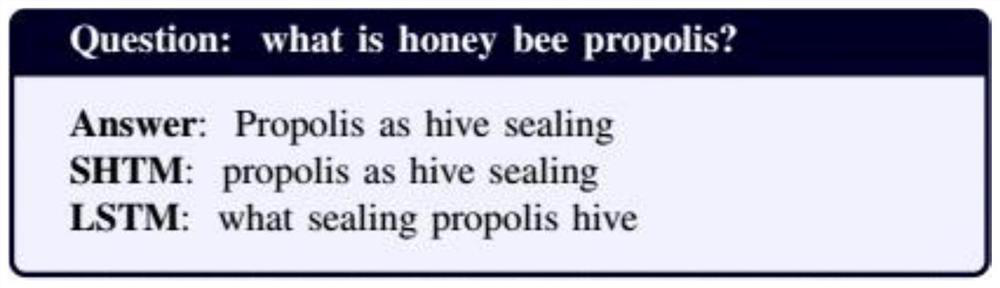

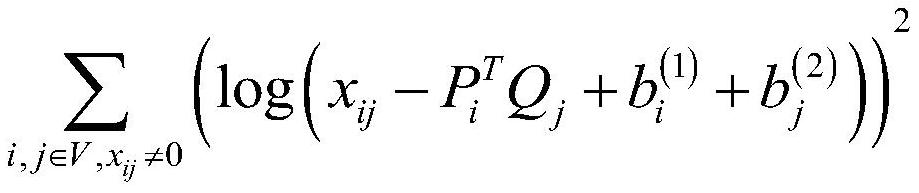

[0074] Traditional neural networks, contrary to the process of human learning knowledge, require a large amount of data and continuously optimize the model, while the HTM model (Hierarchical Temporal Memory, semantic hierarchical temporal memory model) is rarely used in natural langu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com