Grabbing point acquisition method of robot stable grasping object based on monocular vision

A technology of monocular vision and acquisition method, which is applied in the direction of instruments, computer components, character and pattern recognition, etc., can solve the problem of low capture success rate, achieve the improvement of capture success rate, fast and stable capture, and execution efficiency high effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

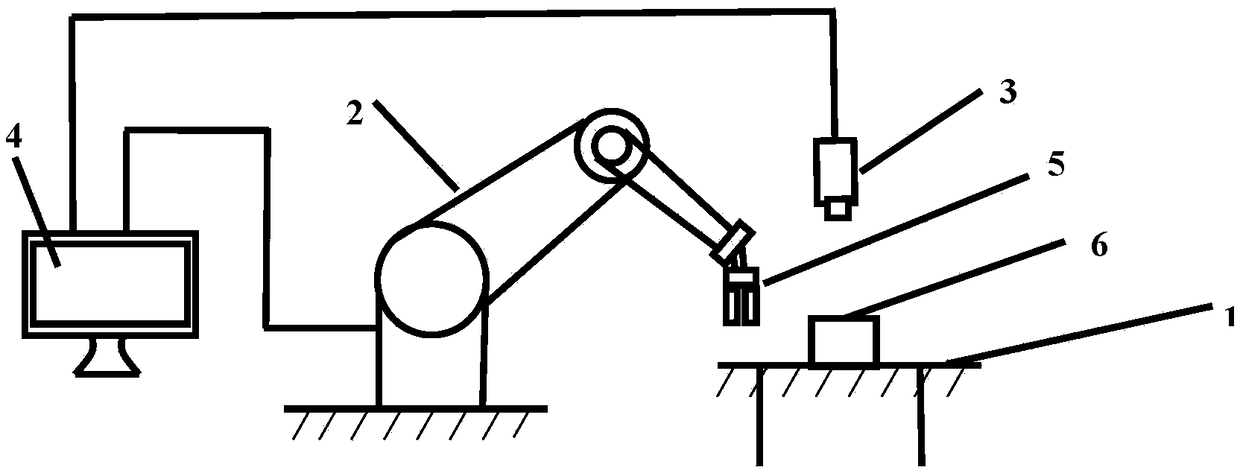

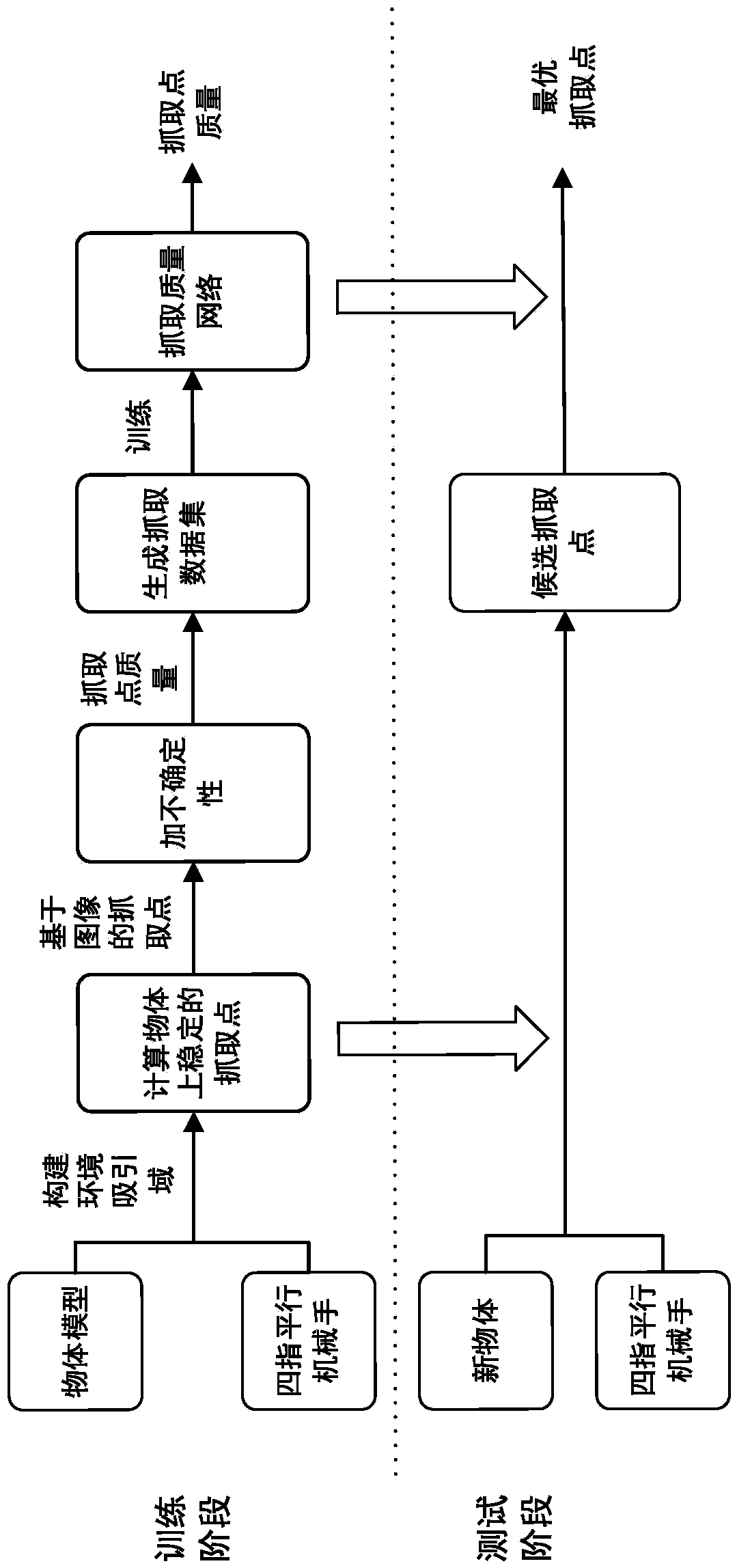

[0045] In order to make the purpose, technical solutions and advantages of the present invention clearer, the technical solutions in the embodiments of the present invention will be clearly and completely described below in conjunction with the accompanying drawings. Obviously, the described embodiments are part of the embodiments of the present invention, rather than Full examples. Based on the embodiments of the present invention, all other embodiments obtained by persons of ordinary skill in the art without making creative efforts belong to the protection scope of the present invention.

[0046] The application will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain related inventions, rather than to limit the invention. It should also be noted that, for the convenience of description, only the parts related to the related invention...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com